from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.tree import DecisionTreeClassifier

pipe_tree = make_pipeline(

(StandardScaler()),

(DecisionTreeClassifier(random_state=123))

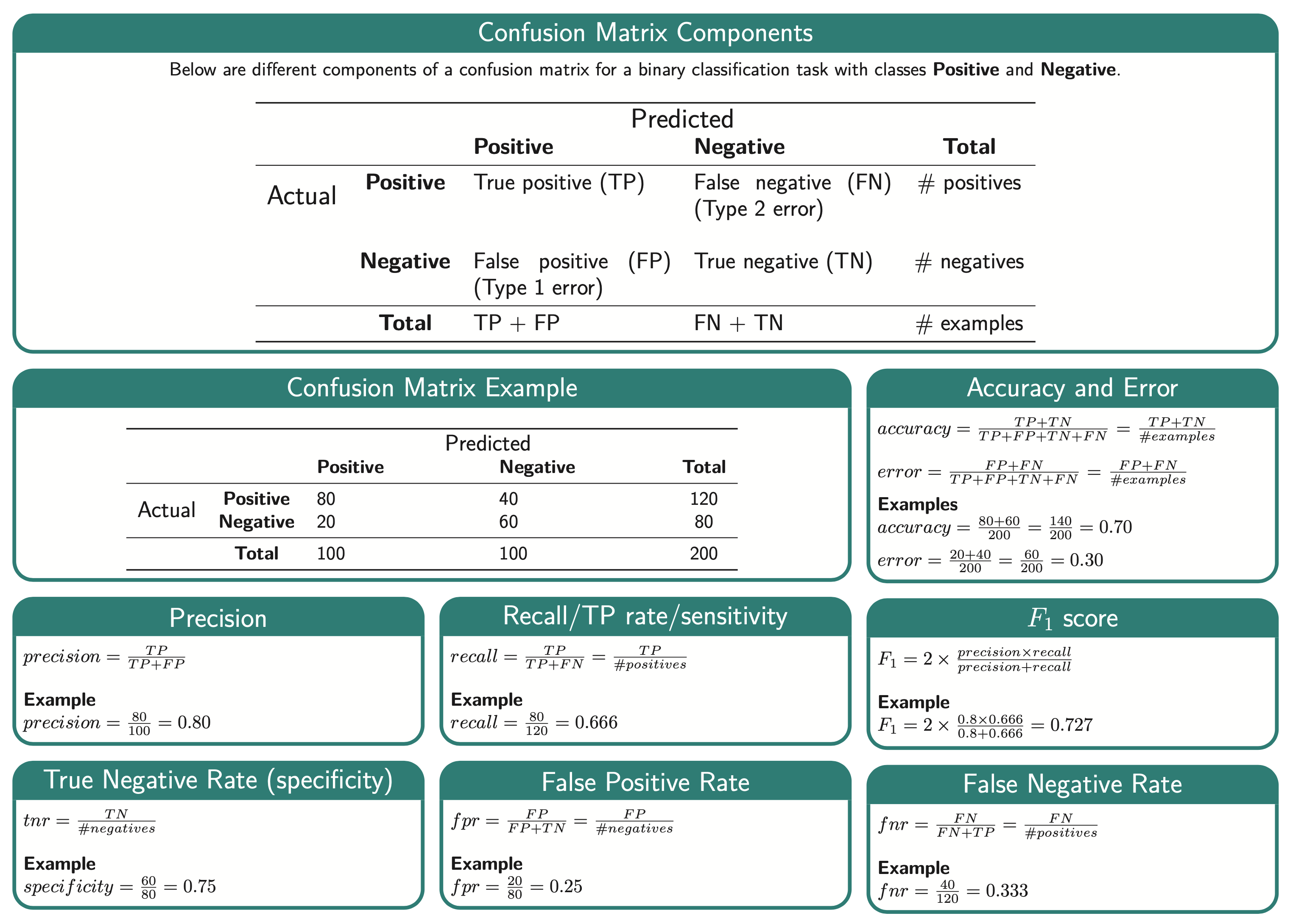

)Precision, Recall and F1 Score

Accuracy is only part of the story…

from sklearn.model_selection import cross_validate

pd.DataFrame(cross_validate(pipe_tree, X_train, y_train, return_train_score=True)).mean()fit_time 9.988710

score_time 0.005139

test_score 0.999119

train_score 1.000000

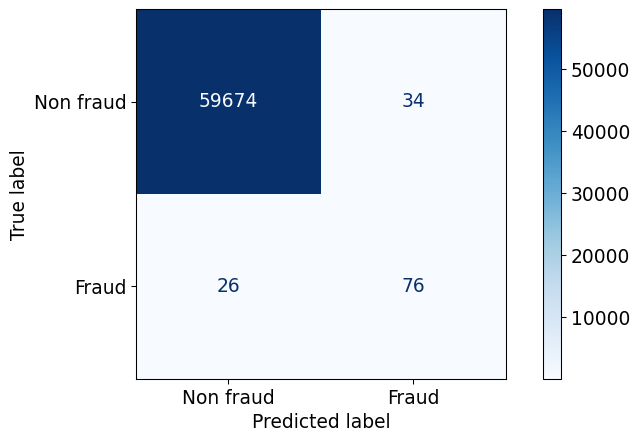

dtype: float64from sklearn.metrics import confusion_matrix

pipe_tree.fit(X_train,y_train);

predictions = pipe_tree.predict(X_valid)

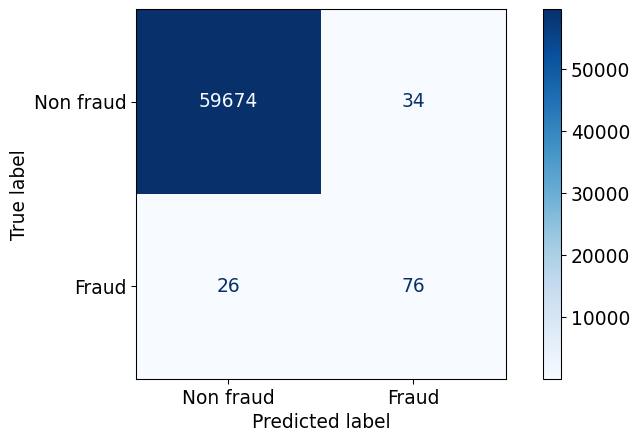

confusion_matrix(y_valid, predictions)array([[59674, 34],

[ 26, 76]])Recall

Among all positive examples, how many did you identify?

Precision

Among the positive examples you identified, how many were actually positive?

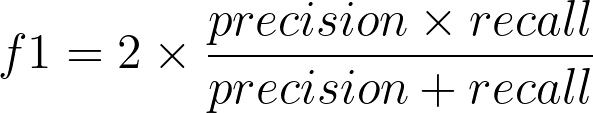

f1

f1-score combines precision and recall to give one score.

Calculate evaluation metrics by ourselves and with sklearn

data = {}

data["accuracy"] = [(TP + TN) / (TN + FP + FN + TP)]

data["error"] = [(FP + FN) / (TN + FP + FN + TP)]

data["precision"] = [ TP / (TP + FP)]

data["recall"] = [TP / (TP + FN)]

data["f1 score"] = [(2 * precision * recall) / (precision + recall)]

measures_df = pd.DataFrame(data, index=['ourselves'])pred_cv = pipe_tree.predict(X_valid)

data["accuracy"].append(accuracy_score(y_valid, pred_cv))

data["error"].append(1 - accuracy_score(y_valid, pred_cv))

data["precision"].append(precision_score(y_valid, pred_cv, zero_division=1))

data["recall"].append(recall_score(y_valid, pred_cv))

data["f1 score"].append(f1_score(y_valid, pred_cv))

pd.DataFrame(data, index=['ourselves', 'sklearn'])| accuracy | error | precision | recall | f1 score | |

|---|---|---|---|---|---|

| ourselves | 0.998997 | 0.001003 | 0.690909 | 0.745098 | 0.716981 |

| sklearn | 0.998997 | 0.001003 | 0.690909 | 0.745098 | 0.716981 |

Classification report