Lecture 6: Common Distribution Families and Conditioning#

Learning Goals#

By the end of this lecture, you should be able to:

Identify and apply common continuous distribution families.

Identify what makes a function a bivariate probability density function.

Compute probabilities from bivariate probability density functions.

Compute conditional distributions for continuous random variables.

Important

Let us make a note on the Greek alphabet. Throughout MDS, we will use diverse Greek letters in various statistical topics. Usually, these letters represent distributional parameters. That said, it is not necessary to memorize these letters right away. With practice over time, you will get familiar with this alphabet. You can find the whole alphabet in Greek Alphabet.

1. Common Continuous Distribution Families#

Just like for discrete distributions, there are also parametric families of continuous distributions. Recall the chart of univariate distributions, where you will find key information, such as their corresponding probability density functions (PDFs). Furthermore, the chart illustrates how these distributions are related via random variable transformations.

1.1. Uniform#

Process#

A Uniform distribution has equal density in between two points \(a\) and \(b\) (for \(a < b\)), and is usually denoted by

That means that there are two parameters: one for each end-point. A reference to a “Uniform distribution” usually implies continuous uniform, as opposed to discrete uniform.

PDF#

The density is

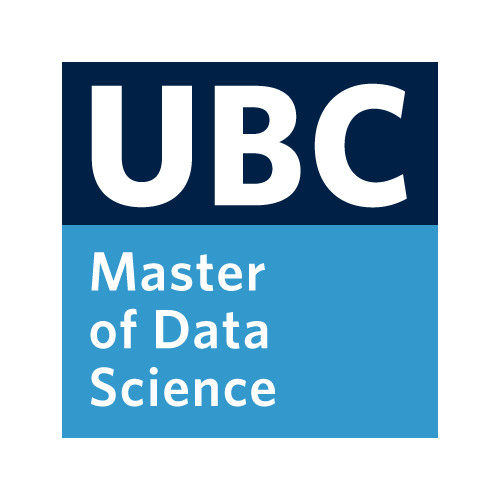

Here are some densities from members of this family:

Mean#

The mean of a Uniform random variable is defined as:

Variance#

The variance of a Uniform random variable is defined as:

1.2. Gaussian or Normal#

Process#

Probably the most famous family of distributions. It has a density that follows a “bell-shaped” curve. It is parameterized by its mean \(-\infty < \mu < \infty\) and variance \(\sigma^2 > 0\). A Normal distribution is usually denoted as

PDF#

The density is

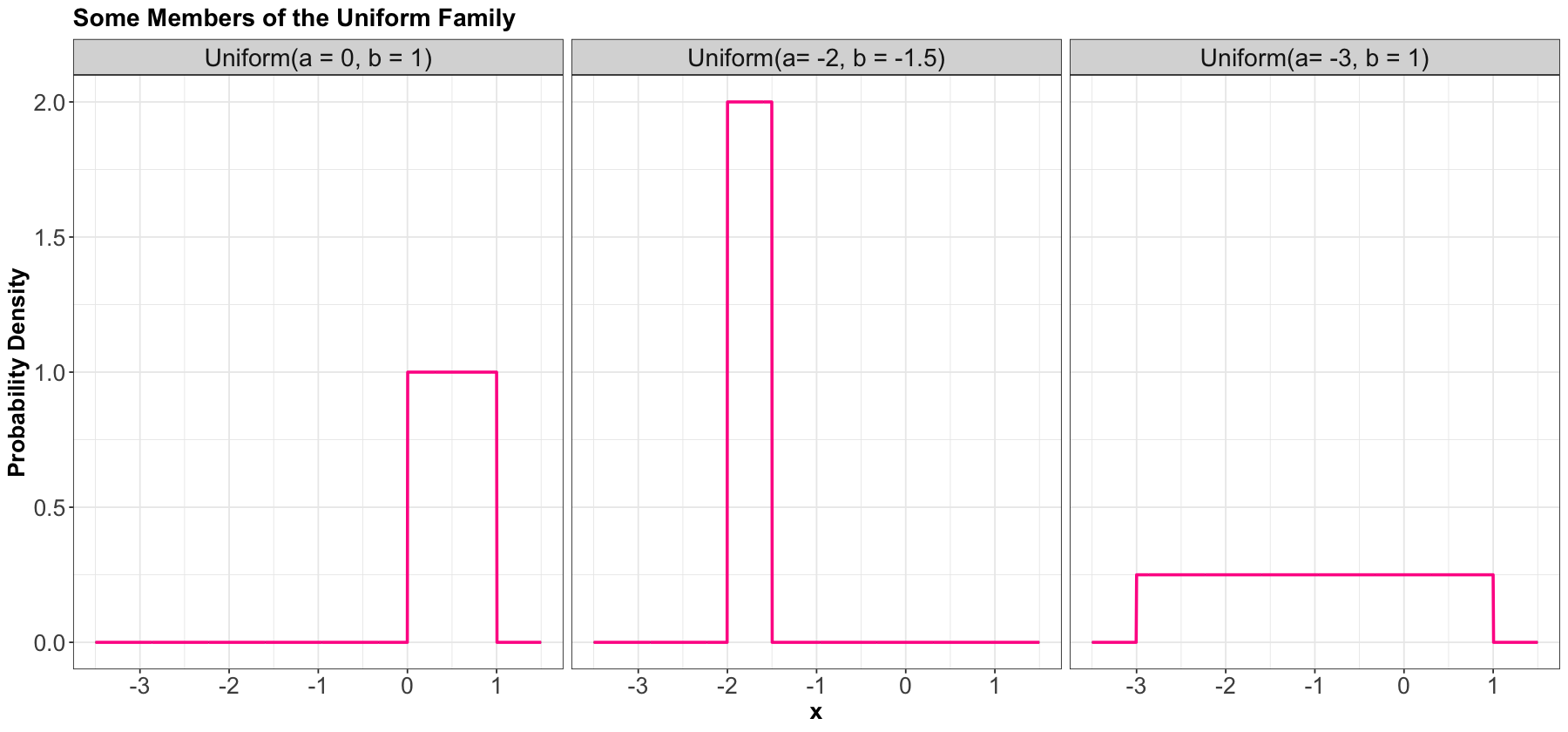

Here are some densities from members of this family:

Mean#

The mean of a Normal random variable is defined as:

Variance#

The variance of a Normal random variable is defined as:

1.3. Log-Normal#

Process#

A random variable \(X\) is a Log-Normal distribution if the transformation \(\log(X)\) is Normal. This family is often parameterized by the mean \(-\infty < \mu < \infty\) and variance \(\sigma^2 > 0\) of \(\log X\). The Log-Normal family is denoted as

PDF#

The density is

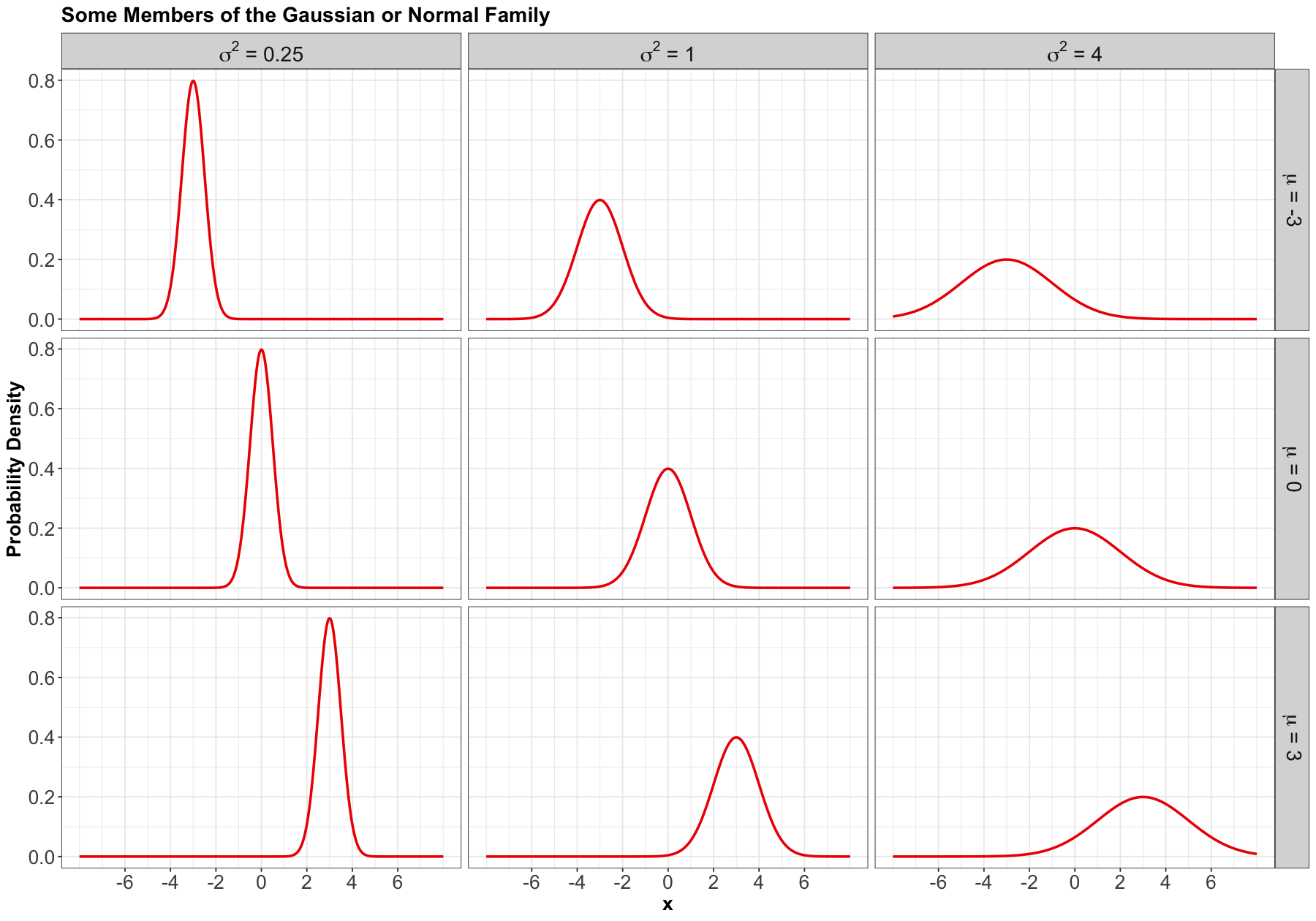

Here are some densities from members of this family:

Mean#

The mean of a Log-Normal random variable is defined as:

Variance#

The variance of a Log-Normal random variable is defined as:

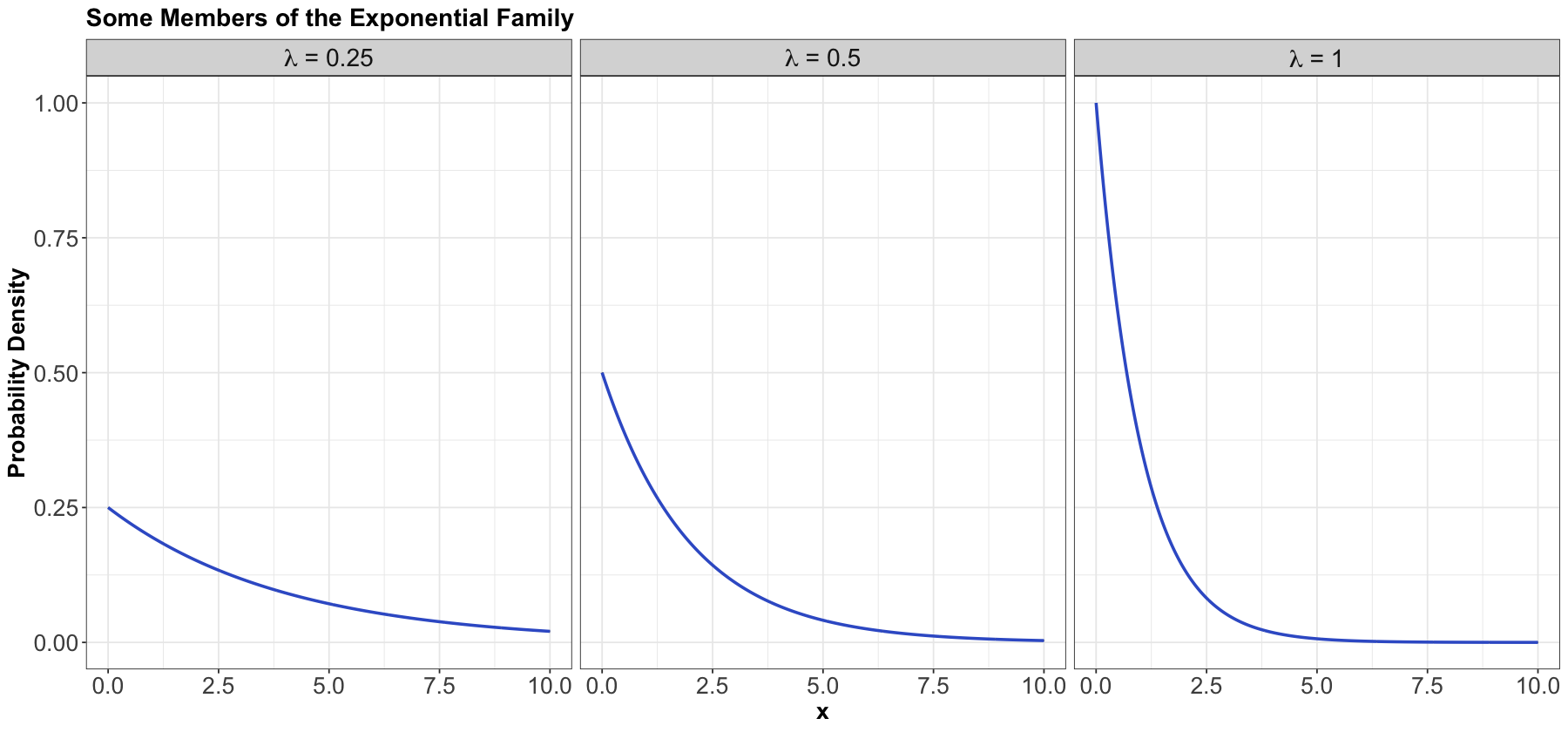

1.4. Exponential#

Process#

The Exponential family is for positive random variables, often interpreted as wait time for some event to happen. Characterized by a memoryless property, where after waiting for a certain period of time, the remaining wait time has the same distribution.

The family is characterized by a single parameter, usually either the mean wait time \(\beta > 0\), or its reciprocal, the average rate \(\lambda > 0\) at which events happen.

The Exponential family is denoted as

or

PDF#

The density can be parameterized as

or

The densities from this family all decay starting at \(x = 0\) for rate \(\lambda\):

Mean#

Using a \(\beta\) parameterization, the mean of an Exponential random variable is defined as:

On the other hand, using a \(\lambda\) parameterization, the mean of an Exponential random variable is defined as:

Variance#

Using a \(\beta\) parameterization, the variance of an Exponential random variable is defined as:

On the other hand, using a \(\lambda\) parameterization, the variance of an Exponential random variable is defined as:

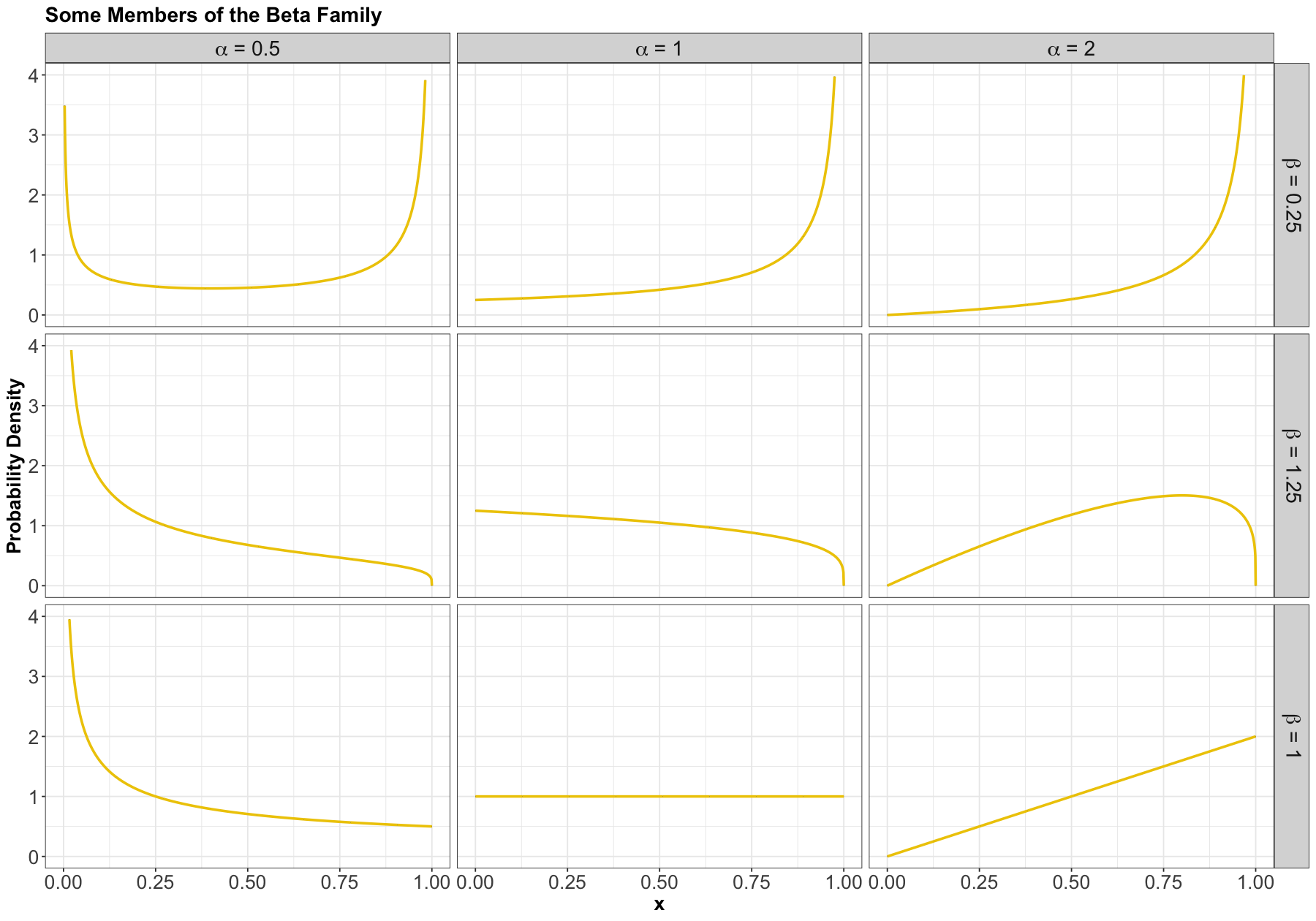

1.5. Beta#

Process#

The Beta family of distributions is defined for random variables taking values between \(0\) and \(1\), so is useful for modelling the distribution of proportions. This family is quite flexible, and has the Uniform distribution as a special case. It is characterized by two positive shape parameters, \(\alpha > 0\) and \(\beta > 0\).

The Beta family is denoted as

PDF#

The density is parameterized as

where \(\Gamma(\cdot)\) is the Gamma function.

Here are some examples of densities:

Mean#

The mean of a Beta random variable is defined as:

Variance#

The variance of a Beta random variable is defined as:

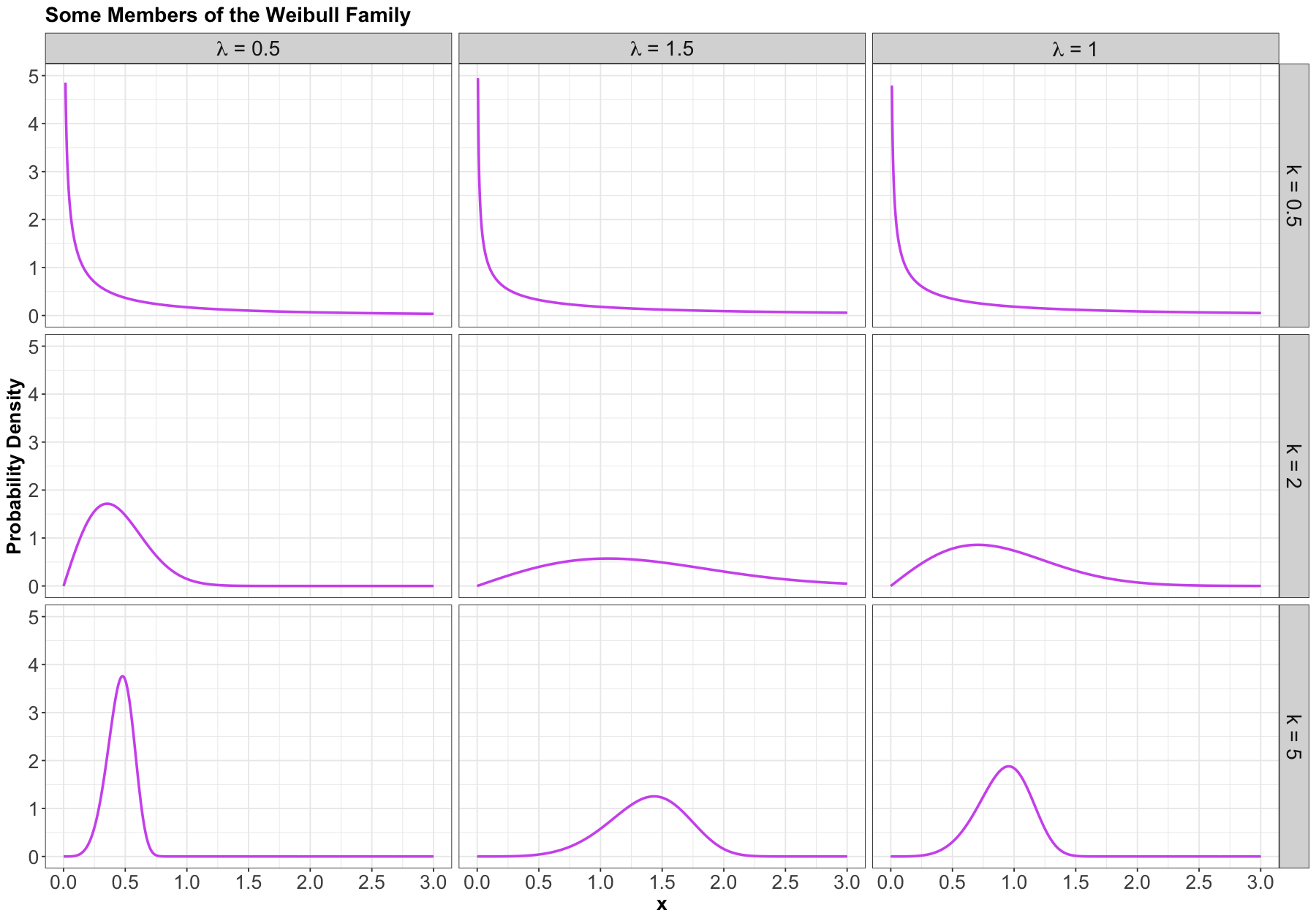

1.6. Weibull#

Process#

A generalization of the Exponential family, which allows for an event to be more likely the longer you wait. Because of this flexibility and interpretation, this family is used heavily in survival analysis when modelling time until an event.

This family is characterized by two parameters, a scale parameter \(\lambda > 0\) and a shape parameter \(k > 0\) (where \(k = 1\) results in the Exponential family).

The Weibull family is denoted as

PDF#

The density is parameterized as

Here are some examples of densities:

Mean#

The mean of a Weibull random variable is defined as:

Variance#

The variance of a Weibull random variable is defined as:

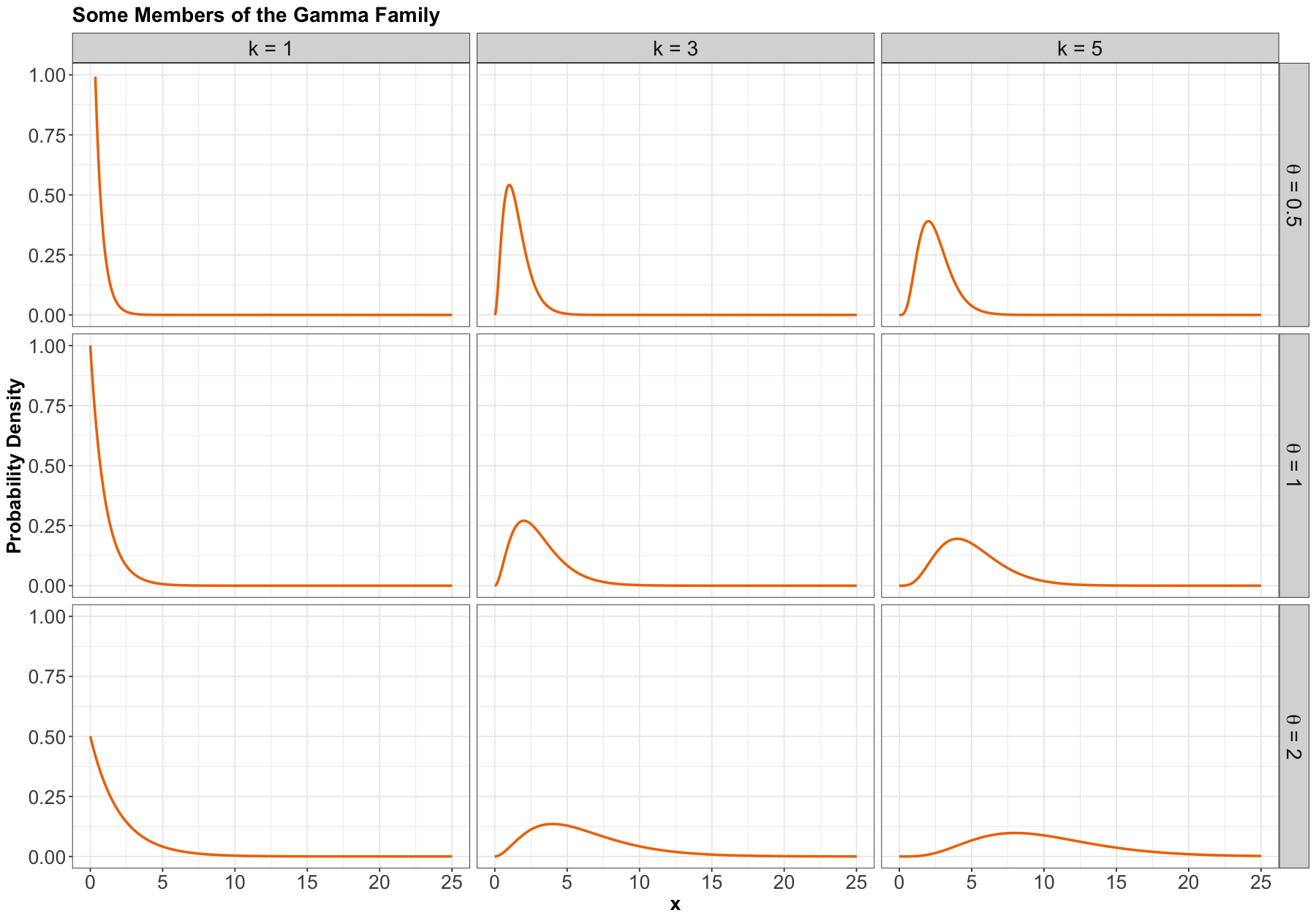

1.7. Gamma#

Process#

Another useful two-parameter family with support on non-negative numbers. One common parameterization is with a shape parameter \(k > 0\) and a scale parameter \(\theta > 0\).

The Gamma family can be denoted as

PDF#

The density is parameterized as

where \(\Gamma(\cdot)\) is the Gamma function.

Here are some densities:

Mean#

The mean of a Gamma random variable is defined as:

Variance#

The variance of a Gamma random variable is defined as:

1.8. Relevant R Functions#

R has functions for many distribution families. We have seen a few already in the case of discrete families, but here is a more complete overview.

The functions are of the form <x><dist>, where <dist> is an abbreviation of a distribution family, and <x> is one of d, p, q, or r, depending on exactly what about the distribution you would like to calculate.

The possible prefixes for <x> indicate the following:

d: density function – we call this \(f_X(x)\).p: cumulative distribution function (CDF) – we call this \(F_X(x)\).q: quantile function (inverse CDF).r: random number generator.

Here are some abbreviations for <dist>:

unif: Uniform (continuous).norm: Normal (continuous).lnorm: Log-Normal (continuous).geom: Geometric (discrete).pois: Poisson (discrete).binom: Binomial (discrete).etc.

Let us check the following examples:

For the Uniform family, we have the following

Rfunctions:dunif(),punif(),qunif(), andrunif().

For the Gaussian or Normal family, we have the following

Rfunctions:dnorm(),pnorm(),qnorm(), andrnorm().

Important

For any R function, you can get syntax help via the R Studio console. For instance, if we want help with the function dunif(), we type ?dunif in the console. More ways to find help can be found here.

Now, let us proceed with some in-class questions via iClicker.

Exercise 28

What R function do we need to obtain the density corresponding for \(X \sim \mathcal N(\mu = 2, \sigma^2 = 4)\) at point \(x = 3\)?

Select the correct option:

A. pnorm(q = 3, mean = 2, sd = 2)

B. dnorm(x = 3, mean = 2, sd = 4)

C. pnorm(q = 3, mean = 2, sd = 4)

D. dnorm(x = 3, mean = 2, sd = 2)

Exercise 29

What R function do we need to obtain the CDF for \(X \sim \operatorname{Uniform}(a = 0, b = 2)\) evaluated at points \(x = 0.25, 0.5, 0.75\)?

Select the correct option:

A. qunif(p = c(0.25, 0.5, 0.75), min = 0, max = 2, lower.tail = TRUE)

B. punif(q = c(0.25, 0.5, 0.75), min = 0, max = 2, lower.tail = TRUE)

C. dunif(x = c(0.25, 0.5, 0.75), min = 0, max = 2)

D. punif(q = c(0.25, 0.5, 0.75), min = 0, max = 2, lower.tail = FALSE)

Exercise 30

What R function do we need to obtain the median of \(X \sim \operatorname{Uniform}(a = 0, b = 2)\)?

Select the correct option:

A. qunif(p = 0.5, min = 0, max = 2, lower.tail = TRUE)

B. punif(q = 0.5, min = 0, max = 2, lower.tail = TRUE)

C. dunif(x = 0.5, min = 0, max = 2)

D. punif(q = 0.5, min = 0, max = 2, lower.tail = FALSE)

Exercise 31

What R function do we need to generate a random sample of size \(10\) from the distribution \(\mathcal N(\mu = 0, \sigma^2 = 25)\)?

Select the correct option:

A. runif(n = 10, min = 0, max = 25)

B. rnorm(n = 10, mean = 0, sd = 25)

C. rnorm(n = 10, mean = 0, sd = 5)

D. runif(n = 10, min = 0, max = 5)

2. Continuous Multivariate Distributions#

In the discrete case, we already saw joint distributions, univariate conditional distributions, multivariate conditional distributions, marginal distributions, etc. All these concepts are carried over to continuous distributions. Let us start with two continuous random variables (i.e., a bivariate case).

Important

Let us make a note on depictions of distributions. There is such thing as a multivariate CDF. It comes in handy in Copula Theory (a more advanced field in Statistics). But otherwise, it is not as useful as a multivariate density, so we will not cover it in this course. Moreover, there is no such thing as a multivariate quantile function.

2.1. Multivariate Probability Density Functions#

Recall the joint probability mass function (PMF) from Lecture 3: Joint Probability between \(\text{Gang}\) demand and length of stay (\(\text{LOS}\)):

| Gang = 1 | Gang = 2 | Gang = 3 | Gang = 4 | |

|---|---|---|---|---|

| LOS = 1 | 0.00170 | 0.04253 | 0.12471 | 0.08106 |

| LOS = 2 | 0.02664 | 0.16981 | 0.13598 | 0.01757 |

| LOS = 3 | 0.05109 | 0.11563 | 0.03203 | 0.00125 |

| LOS = 4 | 0.04653 | 0.04744 | 0.00593 | 0.00010 |

| LOS = 5 | 0.07404 | 0.02459 | 0.00135 | 0.00002 |

Each entry in the table corresponds to the probability of that unique row (\(\text{LOS}\) value) and column (\(\text{Gang}\) value). These probabilities add up to 1.

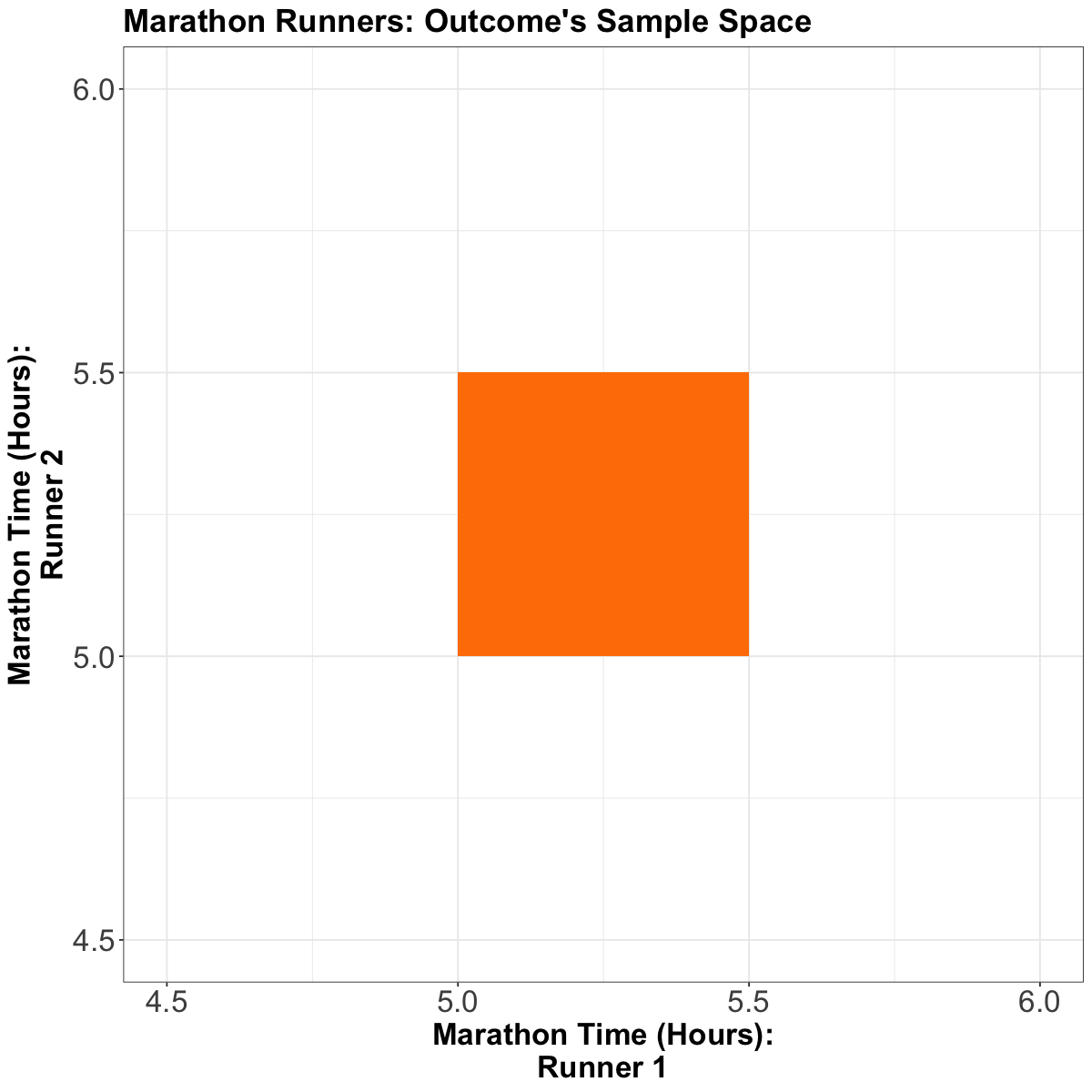

For the continuous case, instead of rows and columns, we have an \(x\) and \(y\)-axis for our two random variables, defining a region of possible values. For example, suppose two marathon runners can only finish a marathon between \(5.0\) and \(5.5\) hours each, and their end times are totally random. In that case, the possible values are indicated by the orange square in the following plot:

Each point in the square is like an entry in the joint probability mass function (PMF) table in the discrete case, except now, instead of holding a probability, it holds a density. Then, the density function is a surface overtop of this square (or, generally, the outcome’s sample space). It is a function that takes two variables (marathon time for Runner 1 and Runner 2) and calculates a single density value from those two points. This function is called a bivariate density function.

We can extend the above plot to a 3D setting as below. Note that the orange distribution surface corresponds to the projection of the joint distribution between the two runners on the \(z\)-axis.

2.2. Calculating Probabilities#

Recall the univariate continuous case; we calculated probabilities as the area under the density curve. Now, let us check the specifics of the bivariate density function.

Definition of the Bivariate Probability Density Function

Let \(X\) and \(Y\) be two random variables. For these two random variables \(X\) and \(Y\), their joint PDF evaluated at the points \(x\) and \(y\) is usually denoted

Therefore, we have a density surface and can calculate probabilities as the volume under the density surface. This means that the total volume under the density function must equal 1. Formally, this may be written as

Note what this implies about the units of \(f_{X, Y}(x,y)\). For example, if \(x\) is measured in metres and \(y\) is measure in seconds, then the units of \(f_{X, Y}(x,y)\) are \(\text{m}^{-1} \text{s}^{-1}\).

Now, let us answer the following questions:

Exercise 32

If the density is equal/flat across the entire sample space, what is the height of this surface? That is, what does the density evaluate to? What does it evaluate to outside of the sample space?

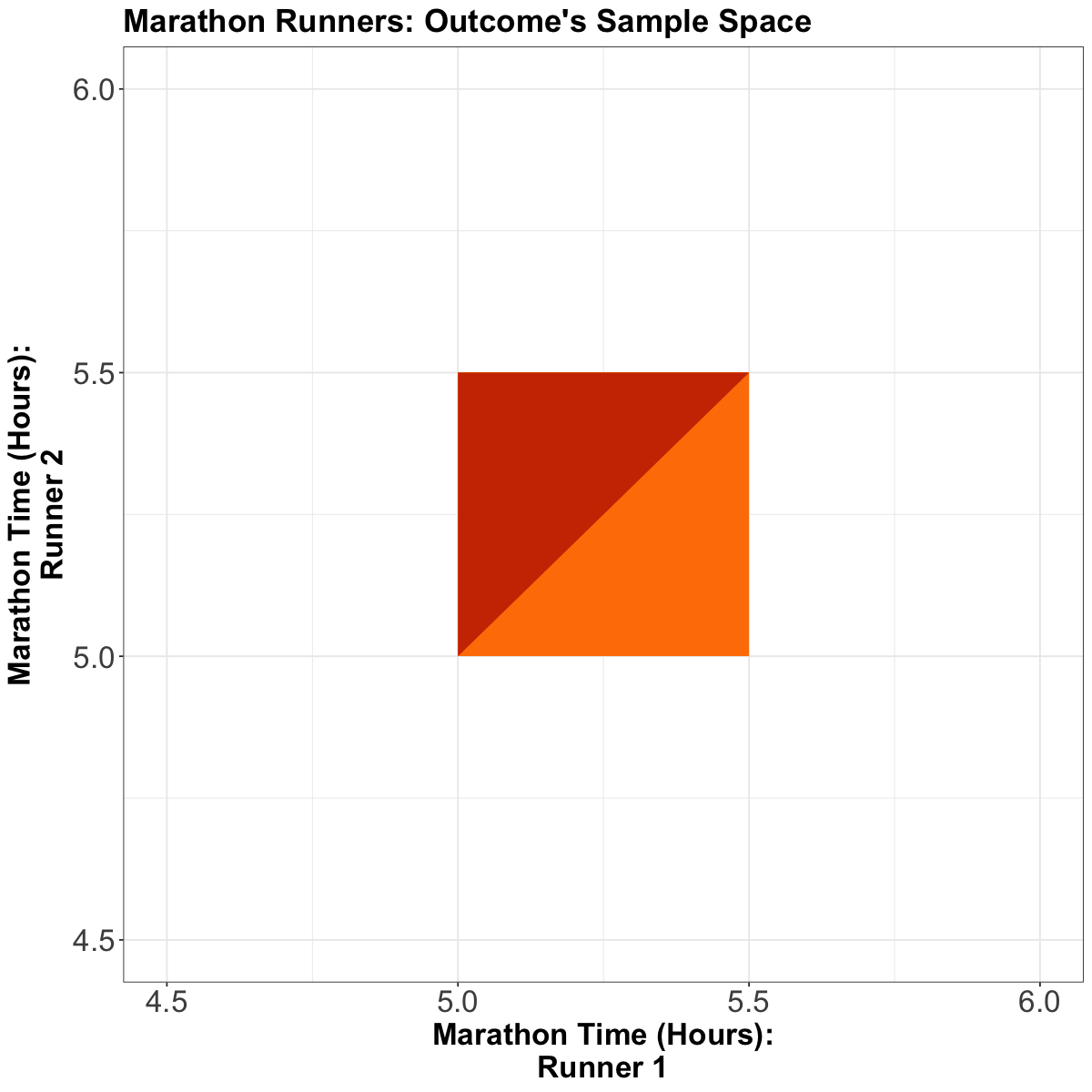

Exercise 33

Let \(X\) be the marathon time of Runner 1 in hours, and \(Y\) be the marathon time of Runner 2 in hours. What is the probability that Runner 1 will finish the marathon before Runner 2, i.e., \(P(X < Y)\)? The below plot might be helpful to visualize this.

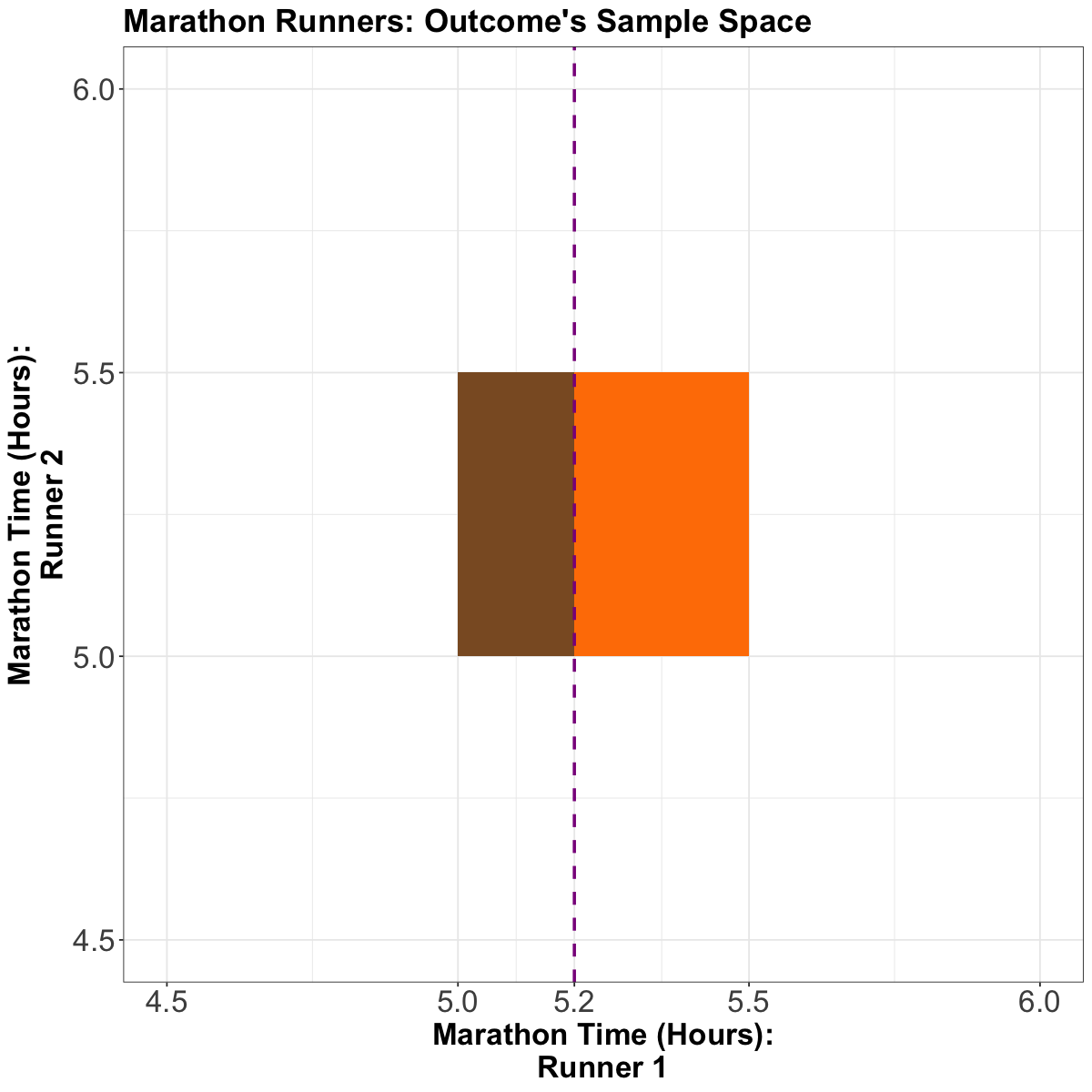

Exercise 34

Now, what is the probability that Runner 1 finishes in \(5.2\) hours or less, i.e., \(P(X \leq 5.2)\)? The below plot might be helpful to visualize this.

3. Conditional Distributions (Revisited)#

Recall the basic formula for conditional probabilities for events \(A\) and \(B\):

Nonetheless, this is only true if \(P(B) \neq 0\), and it is not useful if \(P(A) = 0\) – two situations we face in the continuous world

3.1. When \(P(A) = 0\)#

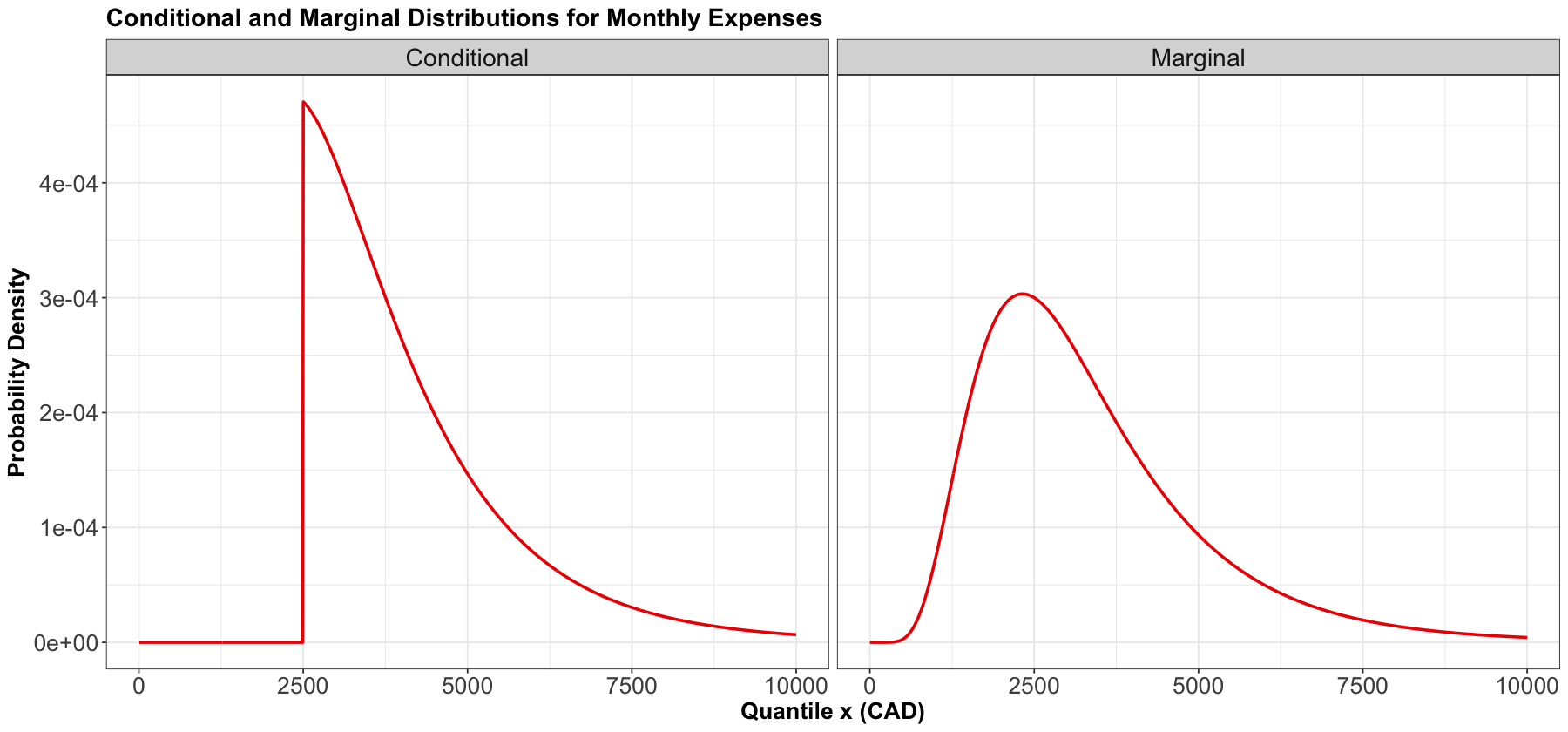

To describe this situation, let us use a univariate continuous example: the monthly expenses.

Suppose the month is halfway over, and you only have \(\$2500\) worth of expenses so far! Given this information, what is the distribution of this month’s total expenditures now?

If we use the Law of Conditional Probability (33), we would get a formula that is not useful. Let

Moreover, assume

Using Equation (33), the conditional probability would be given by

This is not good because the outcome \(x\) would have a probability of \(0\) in a continuous case, which makes no sense!

Instead, in general, we replace probabilities with densities. In this case, what we actually have is:

and

The formula of the resulting conditional PDF is just the original PDF but confined to \(x \geq 2500\), and re-normalized to have area \(1\).

Below, we plot the marginal distribution \(f_X(x)\) and the conditional distribution \(f_{X \mid X \geq 2500}(x)\). Notice the conditional distribution is just a segment of the marginal, and then re-normalized to have an area under the curve equal to \(1\).

3.2. When \(P(B) = 0\)#

To describe this situation, let us use the marathon runners’ example again:

If Runner 1 ended up finishing in \(5.2\) hours, what is the distribution of Runner 2’s time?

Let \(X\) be the time for Runner 1, and \(Y\) for Runner 2, what we are asking for is

However, we already pointed out that

Therefore, we need to find a proper workaround. In this case, the stopwatch used to calculate the run time has rounded the true run time to \(5.2\) hours, even though, in reality, it would have been something like \(5.2133843789373 \dots\) hours.

As seen earlier, plugging in the formula for conditional probabilities will not work. But, as in the case of \(P(A) = 0\), we can generally replace probabilities with densities.

We end up with

This formula is true in general