Load twitterAnalsis package

library(twitterAnalysis)

#> Loading required package: dplyr

#>

#> Attaching package: 'dplyr'

#> The following objects are masked from 'package:stats':

#>

#> filter, lag

#> The following objects are masked from 'package:base':

#>

#> intersect, setdiff, setequal, union

#> Loading required package: stopwords

#> Loading required package: tm

#> Loading required package: NLP

#>

#> Attaching package: 'tm'

#> The following object is masked from 'package:stopwords':

#>

#> stopwords

#> Loading required package: wordcloud

#> Loading required package: RColorBrewer

#> Loading required package: twitteR

#>

#> Attaching package: 'twitteR'

#> The following objects are masked from 'package:dplyr':

#>

#> id, location

#> Loading required package: syuzhetObtain Twitter API credentials

From https://developer.twitter.com/ obtain API access credentials.

consumer_key <- 'xxx'

consumer_secret <- 'xxx'

access_token <- 'xxx'

token_secret <- 'xxx'Quick use example

The functions of the package are all meant to be used in tandem with each other.

Step 1: Setup Twitter API credentials

api_user <- user_info(consumer_key, consumer_secret, access_token, token_secret)Step 2: get 30 most recent tweets of a user (for example,

@elonmusk)

elon_df <- load_twitter_by_user('elonmusk', 100, api_user)

#> [1] "Using direct authentication"

head(elon_df)

#> screenName id created favoriteCount retweetCount

#> 1 elonmusk 1621886041245237253 2023-02-04 14:59:00 2242 84

#> 2 elonmusk 1621881994375299073 2023-02-04 14:42:55 3146 142

#> 3 elonmusk 1621881617978429440 2023-02-04 14:41:25 1444 87

#> 4 elonmusk 1621793704175362050 2023-02-04 08:52:05 13767 426

#> 5 elonmusk 1621719831249567744 2023-02-04 03:58:32 6621 301

#> 6 elonmusk 1621719712429129728 2023-02-04 03:58:04 23223 653

#> text

#> 1 @cb_doge 🤣

#> 2 @d4t4wr4ngl3r 🤣🤣

#> 3 @BillyM2k @cb_doge 🤣 it’s a fair cop

#> 4 @TheJackForge 🤣

#> 5 @tobi !

#> 6 @TrungTPhan 🤣Step 3: Preform pre-processing

elon_clean_df <- generalPreprocessing(elon_df)

head(elon_clean_df)

#> screenName id created favoriteCount retweetCount

#> 1 elonmusk 1621886041245237253 2023-02-04 14:59:00 2242 84

#> 2 elonmusk 1621881994375299073 2023-02-04 14:42:55 3146 142

#> 3 elonmusk 1621881617978429440 2023-02-04 14:41:25 1444 87

#> 4 elonmusk 1621793704175362050 2023-02-04 08:52:05 13767 426

#> 5 elonmusk 1621719831249567744 2023-02-04 03:58:32 6621 301

#> 6 elonmusk 1621719712429129728 2023-02-04 03:58:04 23223 653

#> text text_clean

#> 1 @cb_doge 🤣 🤣

#> 2 @d4t4wr4ngl3r 🤣🤣 🤣🤣

#> 3 @BillyM2k @cb_doge 🤣 it’s a fair cop 🤣 it’s a fair cop

#> 4 @TheJackForge 🤣 🤣

#> 5 @tobi ! !

#> 6 @TrungTPhan 🤣 🤣Step 4: Preform Sentiment Analysis

elon_sentiment_df <- sentiment_labeler(elon_clean_df, 'text')

#> Warning: `spread_()` was deprecated in tidyr 1.2.0.

#> ℹ Please use `spread()` instead.

#> ℹ The deprecated feature was likely used in the syuzhet package.

#> Please report the issue to the authors.

head(elon_sentiment_df)

#> screenName id created favoriteCount retweetCount

#> 1 elonmusk 1621886041245237253 2023-02-04 14:59:00 2242 84

#> 2 elonmusk 1621881994375299073 2023-02-04 14:42:55 3146 142

#> 3 elonmusk 1621881617978429440 2023-02-04 14:41:25 1444 87

#> 4 elonmusk 1621793704175362050 2023-02-04 08:52:05 13767 426

#> 5 elonmusk 1621719831249567744 2023-02-04 03:58:32 6621 301

#> 6 elonmusk 1621719712429129728 2023-02-04 03:58:04 23223 653

#> text text_clean sentiment

#> 1 @cb_doge 🤣 🤣 neutral

#> 2 @d4t4wr4ngl3r 🤣🤣 🤣🤣 neutral

#> 3 @BillyM2k @cb_doge 🤣 it’s a fair cop 🤣 it’s a fair cop positive

#> 4 @TheJackForge 🤣 🤣 neutral

#> 5 @tobi ! ! neutral

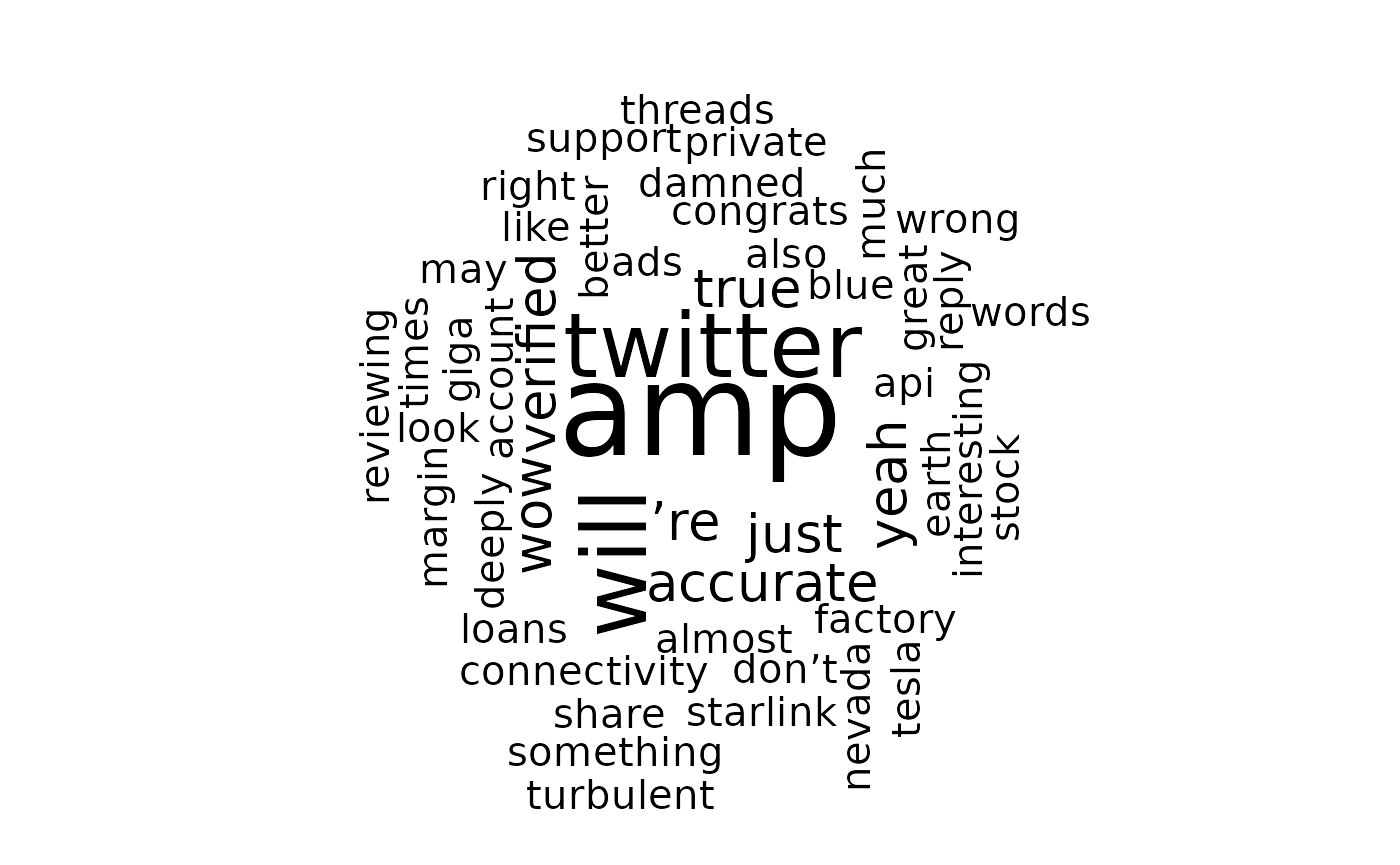

#> 6 @TrungTPhan 🤣 🤣 neutralStep 5: Generate a WordCloud

elon_plt_clean_df <- clean_tweets(elon_sentiment_df)

elon_count_df <- count_words(elon_plt_clean_df)

plt <- create_wordcloud(elon_count_df)

Optional: We can also determine the proportion of

neutral, positive, and netaive

tweets with count_tweets().

count_tweets(elon_sentiment_df)

#> $negative

#> [1] 0.1041667

#>

#> $neutral

#> [1] 0.6458333

#>

#> $positive

#> [1] 0.25