Lecture 7: Introduction to self-attention and transformers#

UBC Master of Data Science program, 2023-24

Instructor: Varada Kolhatkar

Imports and LO#

Imports#

import IPython

from IPython.display import HTML, display

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.optim as optim

pd.set_option("display.max_colwidth", 0)

Intel MKL WARNING: Support of Intel(R) Streaming SIMD Extensions 4.2 (Intel(R) SSE4.2) enabled only processors has been deprecated. Intel oneAPI Math Kernel Library 2025.0 will require Intel(R) Advanced Vector Extensions (Intel(R) AVX) instructions.

Intel MKL WARNING: Support of Intel(R) Streaming SIMD Extensions 4.2 (Intel(R) SSE4.2) enabled only processors has been deprecated. Intel oneAPI Math Kernel Library 2025.0 will require Intel(R) Advanced Vector Extensions (Intel(R) AVX) instructions.

Learning outcomes#

From this lecture you will be able to

Broadly explain the problem of vanishing gradients.

Broadly explain the limitations of RNNs.

Explain the idea of self-attention.

Describe the three core operations in self-attention.

Explain the query, key, and value roles in self-attention.

Explain the role of linear projections for query, key, and value in self-attention.

Explain transformer blocks.

Explain the advantages of using transformers over LSTMs.

Broadly explain the idea of multihead attention.

Broadly explain the idea of positional embeddings.

Attributions#

This material is heavily based on Jurafsky and Martin, Chapter 10.

❓❓ Questions for you#

Suppose you are training a vanilla RNN with one hidden layer.

input representation is of size 200

output layer is of size 200

the hidden size is 100

Exercise 7.1: Select all of the following statements which are True (iClicker)#

iClicker join link: https://join.iclicker.com/ZTLY

(A) The shape of matrix \(U\) between hidden layers in consecutive time steps is going to be \(200 \times 200\).

(B) The output of the hidden layer is going to be a \(100\) dimensional vector.

(C) In bidirectional RNNs, if we want to combine the outputs of two RNNs with element-wise addition, the hidden sizes of the two RNNs have to be the same.

(D) Word2vec skipgram model is likely to suffer from the problem of vanishing gradients.

(E) In the forward pass, in each time step in RNNs, you calculate the output of the hidden layer by multiplying the input \(x\) by the weight matrix \(W\) or \(W_{xh}\) and applying non-linearity.

Exercise 7.1: V’s Solutions!

B, C

1. Motivation#

What kind of neural network models are at the core of all state-of-the-art Generative AI models (e.g., BERT, GPT3, GPT4, Gemini, DALL-E, Llama, Github Copilot)?

Source: GPT-4 Technical Report

Source: BERT paper

Recall the properties we want when we model sequences.

[ ] Order matters

[ ] Variable sequence lengths

[ ] Capture long distance dependencies

What are some reasonable predictions for the missing words in the following sentences?

The students in the exam where the fire alarm is ringing __ really stressed.

The flies munching on the banana that is lying under the tree which is in full bloom __ really happy.

Markov models are able to represent time and handle variable sequence lengths. But they are unable to represent long-distance dependencies in text.

Are RNNs able to capture long-distance dependencies in text?

1.2 Problems with RNNs#

Conceptually, RNNs are supposed to capture long-distance dependencies in text. But in practice, you’ll hardly see people using vanilla RNNs because they are quite hard to train for tasks that require access to distant information. There are three main problems:

Hard to remember relevant information

Hard to optimize

Hard to parallelize

Problem 1: Hard to remember relevant information

In RNNs, the hidden layer and the weights that determine the values in the hidden layer are asked to perform two tasks simultaneously:

Providing information useful for current decision

Updating and carrying forward information required for future decisions

Despite having access to the entire previous sequence, the information encoded in hidden states of RNNs is fairly local.

In the following example:

The students in the exam where the fire alarm is ringing are really stressed.

Assigning high probability to is following alarm is straightforward since it provides a local context for singular agreement.

However, assigning a high probability to are following ringing is quite difficult because not only the plural students is distant, but also the intervening context involves singular constituents.

Ideally, we want the network to retain the distant information about the plural students until it’s needed while still processing the intermediate parts of the sequence correctly.

Problem 2: Hard to optimize

Another difficulty with training RNNs arises from the need to backpropagate the error signal back through time.

Recall that we learn RNNs with

Forward pass

Backward pass (backprop through time)

Computing new states and output in RNNs

Recall: Backpropagation through time

When we backprop with feedforward neural networks

Take the gradient (derivative) of the loss with respect to the parameters.

Change parameters to minimize the loss.

In RNNs we use a generalized version of backprop called Backpropogation Through Time (BPTT)

Calculating gradient at each output depends upon the current time step as well as the previous time steps.

So in the backward pass of RNNs, we have to multiply many derivatives together, which very often results in

vanishing gradients (gradients becoming very small and eventually driven to zero) in case of long sequences

If we have a vanishing gradient, we might not be able to update our weights reliably.

So we are not able to capture long-term dependencies, which kind of defeats the whole purpose of using RNNs.

To address these issues more complex network architectures have been designed with the goal of maintaining relevant context over time by enabling the network to learn to forget the information that is no longer needed and to remember information required for decisions still to come.

Most commonly used models are

The Long short-term memory network (LSTM) (See this appendix on LSTMs.)

Gated Recurrent Units (GRU)

That said, even with these models, for long sequences, there is still a loss of relevant information and difficulties in training.

Also, inherently sequential nature of RNNs/LSTMs make them hard to parallelize. So they are slow to train.

Problem 3: Hard to parallelize

Due to their inherently sequential nature

1.3 Transformers: the intuition#

Transformers provide an approach to sequence processing but they eliminate recurrent connections in RNNs and LSTMs.

The idea is to build up richer and richer contextual representations of the meaning of input words or tokens across a series of transformer layers.

GPT-3 large, for instance, has 96 transformer layers.

They are easy to parallelize and much better at capturing long distance dependencies.

They are at the core of all state-of-the-art generative AI models such as BERT, GPT2, GPT3, DALL-E 2, DALL-E 3, SORA, Meta’s Llama, Google Bard, and GitHub Copilot.

There are two main innovations which make these models work so well.

Self-attention

Positional embeddings/encodings

2. Self-attention#

2.1 Intuition#

Biological motivation for self-attention

Count how many times the players wearing the white pass the basketball?

### An example of a state-of-the-art language model

url = "https://www.youtube.com/embed/vJG698U2Mvo"

IPython.display.IFrame(url, width=500, height=500)

When we process information, we often selectively focus on specific parts of the input, giving more attention to relevant information and less attention to irrelevant information. This is the core idea of attention.

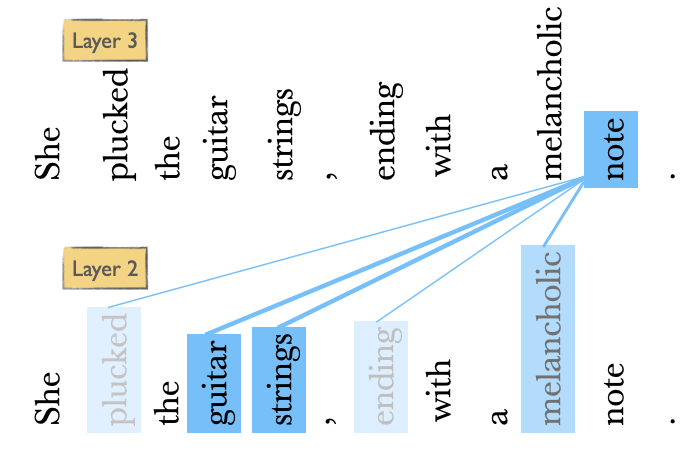

Consider the examples below:

Example 1: She left a brief note on the kitchen table, reminding him to pick up groceries.

Example 2: The diplomat’s speech struck a positive note in the peace negotiations.

Example 3: She plucked the guitar strings, ending with a melancholic note.

The word note in these examples serves quite distinct meanings, each tied to different contexts.

To capture varying word meanings across different contexts, we need a mechanism that considers the wider context to compute each word’s contextual representation.

Self-attention is just that mechanism!

It allows us to look broadly into the context and tells us how to integrate the representations of context words to build representation of a word.

A question for you: why not use traditional word embeddings we have seen so far (like word2vec or GloVe)?

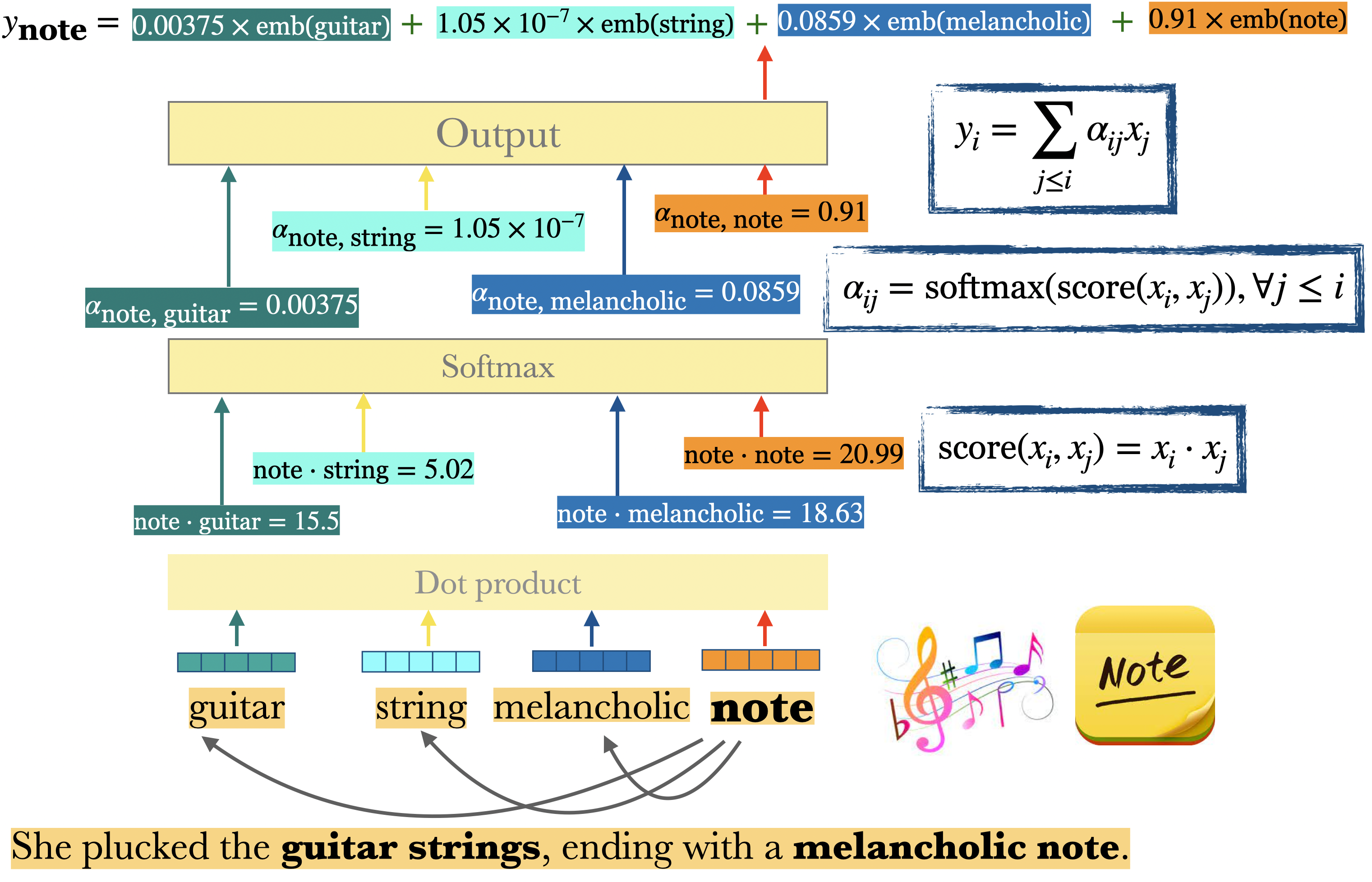

Let’s visualize self-attention, we’ll focus on how the word note is interpreted within its sequence.

She plucked the guitar strings , ending with a melancholic note .

Which words in the context are most relevant to note? Assign a qualitative weight (high or low) to each context word below.

note attending to She: low weight

note attending to plucked:

note attending to ending:

note attending to guitar:

note attending to strings:

note attending to melancholic:

Self-attention mechanism allows a neural network model to ‘attend’ to different parts of the input data with varying degrees of focus.

We compare a token of our interest to a collection of other tokens in the context that reveal their relevance in the current context. (The relevance is denoted with the colour intensity in the diagram above.)

For each token in the sequence, we assign a weight based on how relevant it is to the token under consideration.

Calculate the output for the current token based on these weights.

It allows a network to directly extract and use information from arbitrarily large contexts without the need to pass it through intermediate recurrent connections as in RNNs.

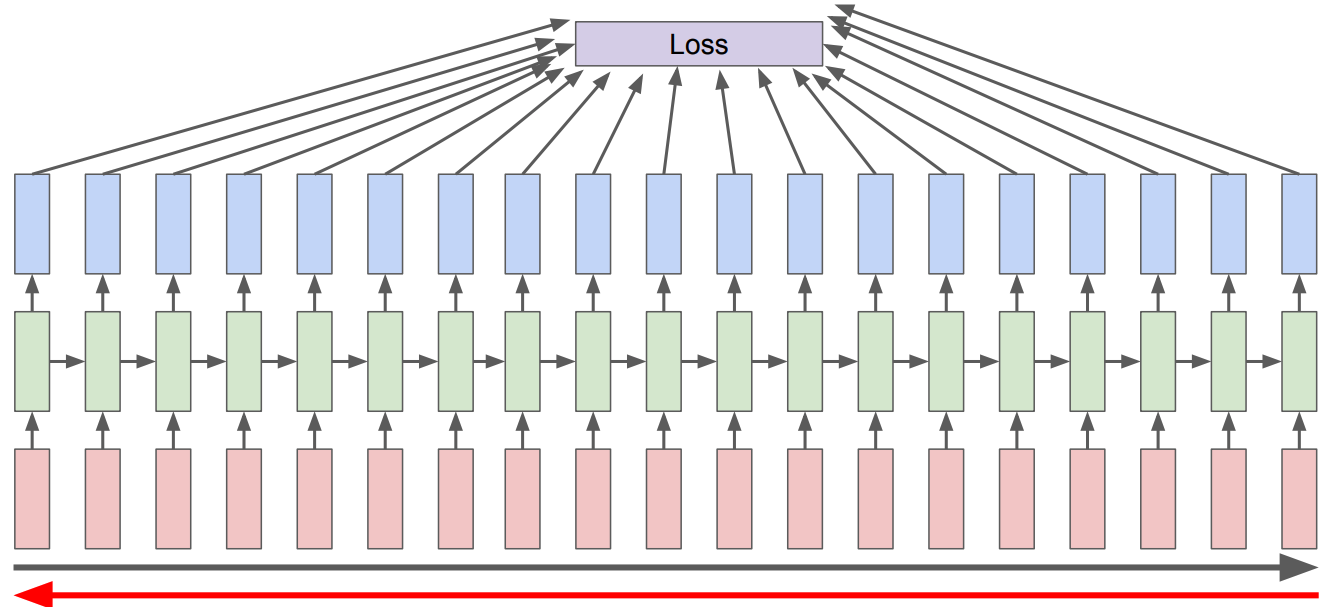

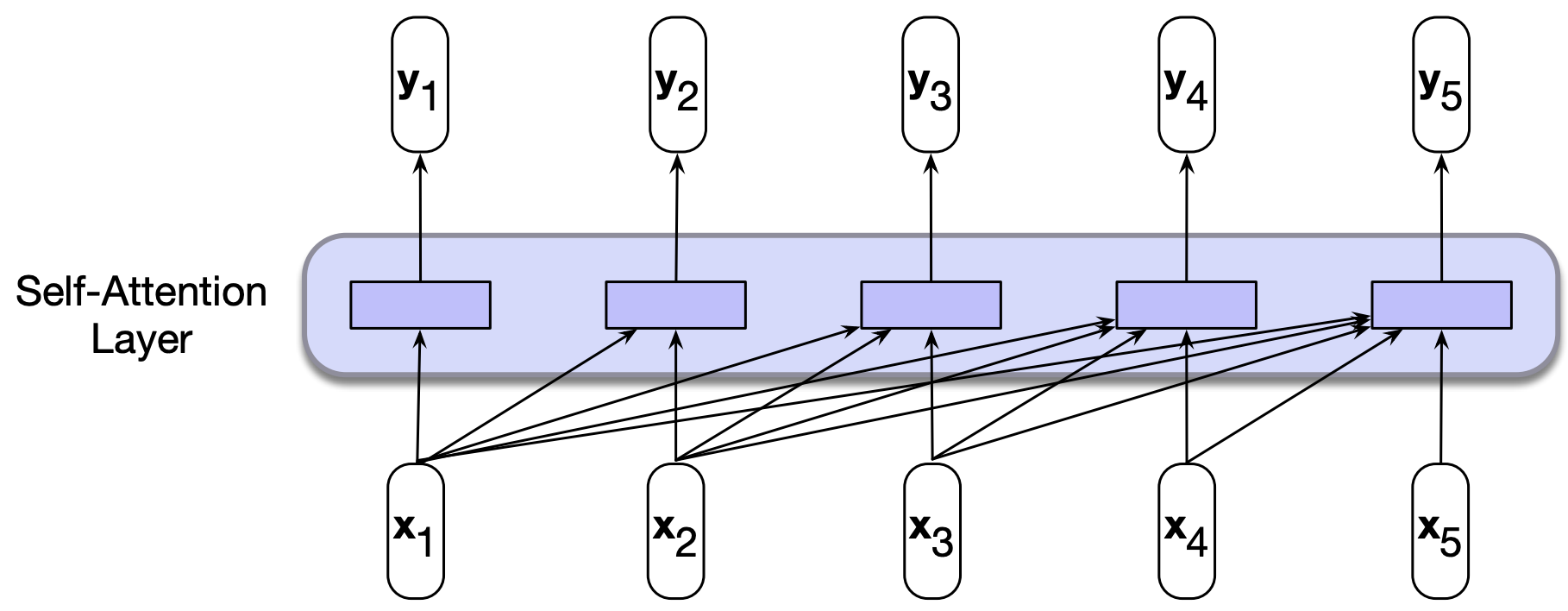

Below is a single backward looking self-attention layer which maps sequences of input vectors \((x_1, \dots, x_n)\) to sequences of output vectors \((y_1, \dots, y_n)\) of the same length.

When processing an item at time \(t\), the model has access to all of the inputs up to and including the one under consideration.

It does not have access to the input beyond the current one.

Note that unlike RNNs or LSTMs, each computation can be done independently; it does not depend upon the previous computation which allows us to easily parallelize forward pass and the training of such models.

2.2 The key operations in self-attention#

To determine the weights of various tokens in the context during self-attention’s output calculation for a specific token, we follow three key operations

Calculate scores with dot products between tokens

Apply softmax to derive a probability distribution

Compute a weighted sum based on the scores for all observed inputs

Now, let’s break down these operations step by step.

Example: Calculating the output \(y\) for the token note in the given context

To generalize, in order to calculate the output \(y_i\)

We score token \(x_i\) with all previous tokens \(x_j\) by taking the dot product between them. $\(\text{score}(x_i, x_j) = x_i \cdot x_j\)$

We apply \(\text{softmax}\) on these scores to get probability distribution over these scores. $\(\alpha_{ij} = \text{softmax}(\text{score}(x_i \cdot x_j)), \forall j \leq i\)$

The output is the weighted sum of the inputs seen so far, where the weights correspond to the \(\alpha\) values calculated above. $\(y_i = \sum_{j \leq i} \alpha_{ij}x_j\)$

The final output vector incorporates the information from all relevant parts of the sentence, weighted by their relevance to the target word “note”.

These three operations outline the core of an attention-based approach. These operations can be carried out independently for each input allowing easy parallelism. Now, let’s introduce additional bells and whistles to make it trainable.

Query, Key, and Value roles

Note that in the process of calculating outputs corresponding to each input, each input embedding plays three kinds of roles: Query, Key, and Value.

Let’s consider at an analogy to understand this better. Imagine you’re on a quest to answer a profound question: “What’s the purpose of life?”. To find an answer, you decide to visit a library.

In the context of self-attention:

Query: the current focus of attention when being compared to all previous inputs. This is something you are trying to understand more deeply.

Key: a preceding input being compared to the current focus of attention. These help determine which other words in the sequence are relevant for your query.

Value: used to compute the output for the current focus of attention. Once the relevant words are identified using keys, the actual content (embedding) from these words is used to construct the output

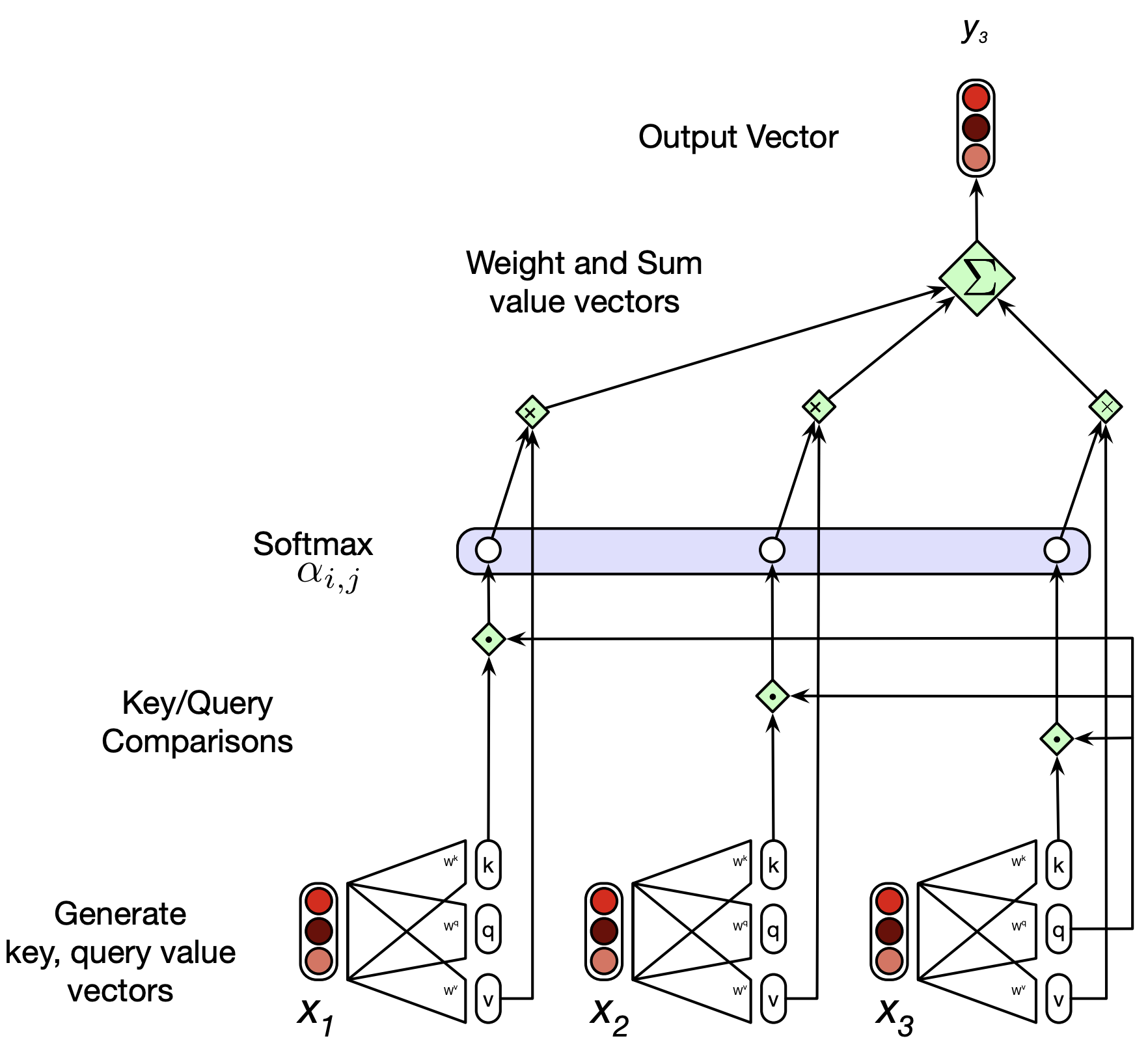

For these three roles transformer introduces three weight matrices: \(W^Q, W^K, W^V\). These weights will be used to project each input vector \(x_i\) into its role as a key, query, or value.

For now let’s assume that all these weight matrices have the same dimensionality and so the projected vectors in each case are going to be of the same size.

With these projections our equations become:

We score the \(x_i\) with all previous tokens \(x_j\) by taking the dot product between \(x_i\)’s query vector \(q_i\) and \(x_j\)’s key vector \(k_j\):

$\(\text{score}(x_i, x_j) = q_i \cdot k_j\)$The softmax calculation remains the same but the output calculation for \(y_i\) is now based on a weighted sum over the projected vectors \(v\): $\(y_i = \sum_{j \leq i} \alpha_{ij}v_j\)$

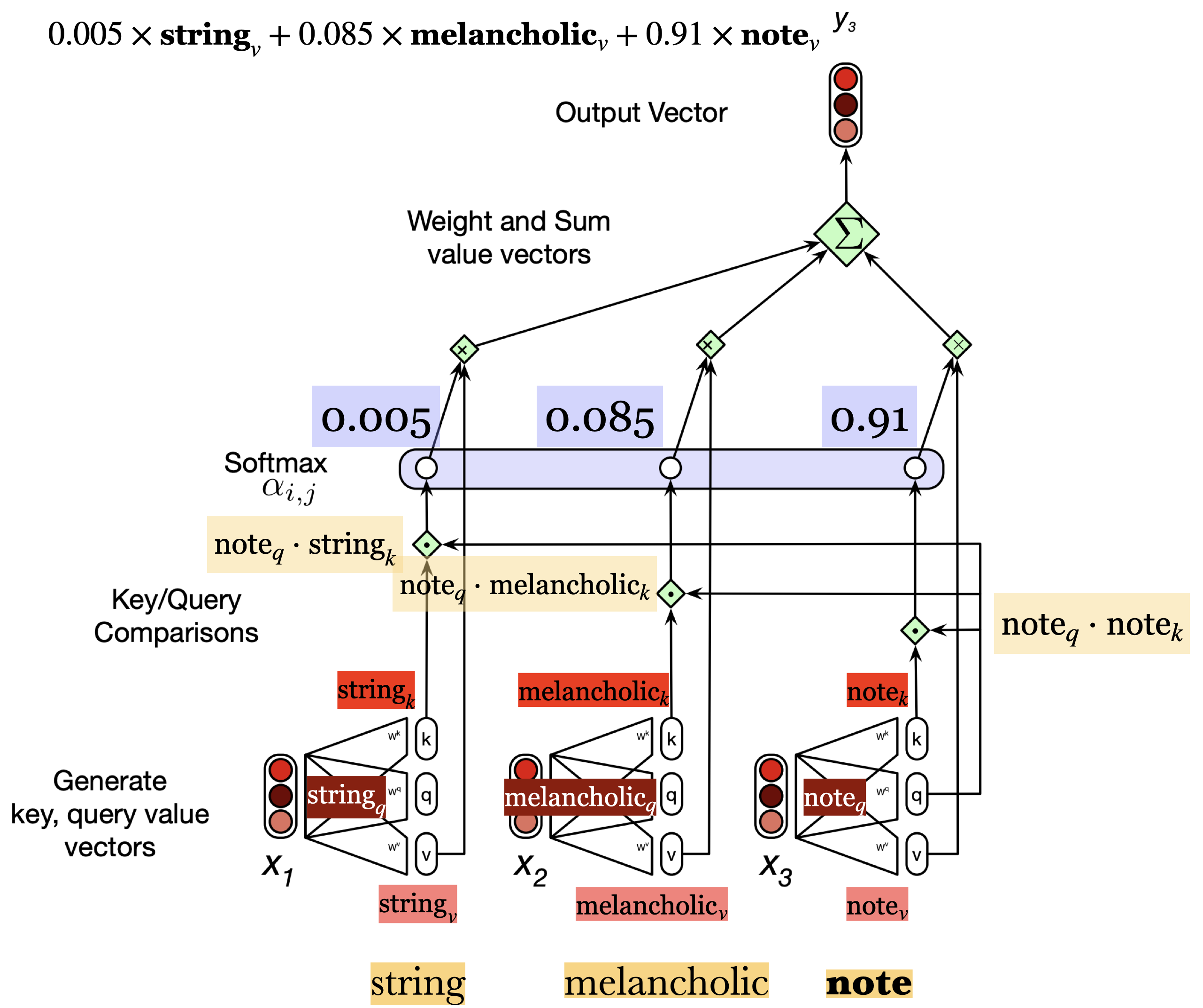

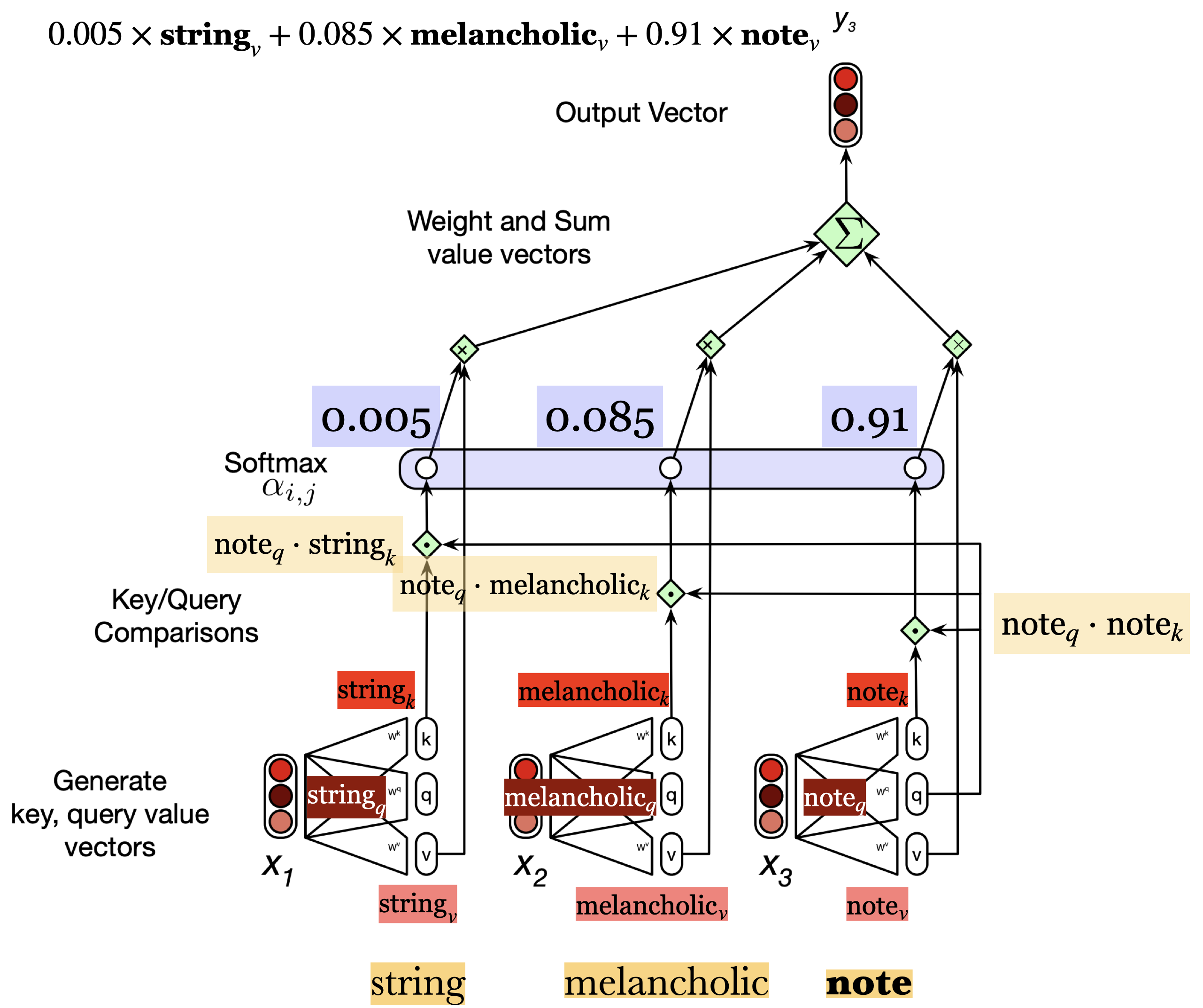

Let’s calculate the output of note in the following sequence with \(K, Q, V\) matrices.

string melancholic note

Suppose input embedding is of size 300.

Suppose the projection matrices \(W^k, W^q, W^v\) are of shape \(300 \times 100\).

So word\(_k\), word\(_q\), word\(_v\) provide 100-dimensional projections of each word corresponding to the key, query and value roles. For example, note\(_k\), note\(_q\), bite\(_v\) represent 100-dimensional projections of the word note corresponding to its key, query, and value roles, respectively.

The dot products will be calculated between the appropriate query and key projections. In this example, we will calculate the following dot products:

\(\text{note}_q \cdot \text{string}_k\)

\(\text{note}_q \cdot \text{melancholic}_k\)

\(\text{note}_q \cdot \text{note}_k\)

We apply softmax on these dot products. Suppose the softmax output in this toy example is

So we have weights associated with three input words: string (0.005), melancholic (0.085) and note (0.91)

We can calculate the output as the weighted sum of the inputs. Here we will use the value projections of the inputs: \(0.005 \times \text{string}_v + 0.085 \times \text{melancholic}_v + 0.91 \times \text{note}_v\)

Since we will be adding 100 dimensional vectors (size of our projections), the dimensionality of the output \(y_3\) is going to be 100.

Scaling the dot products

The result of a dot product can be arbitrarily large and exponentiating such values can lead to numerical issues and problems during training.

So the dot products are usually scaled before applying the softmax.

The most common scaling is where we divide the dot product by the square root of the dimensionality of the query and the key vectors. $\(\text{score}(x_i, x_j) = \frac{q_i \cdot k_j}{\sqrt{d_k}}\)$

This is how we calculate a single output of a single time step \(i\).

Would the output calculation at different time steps be dependent upon each other?

Efficient calculations with matrix multiplication

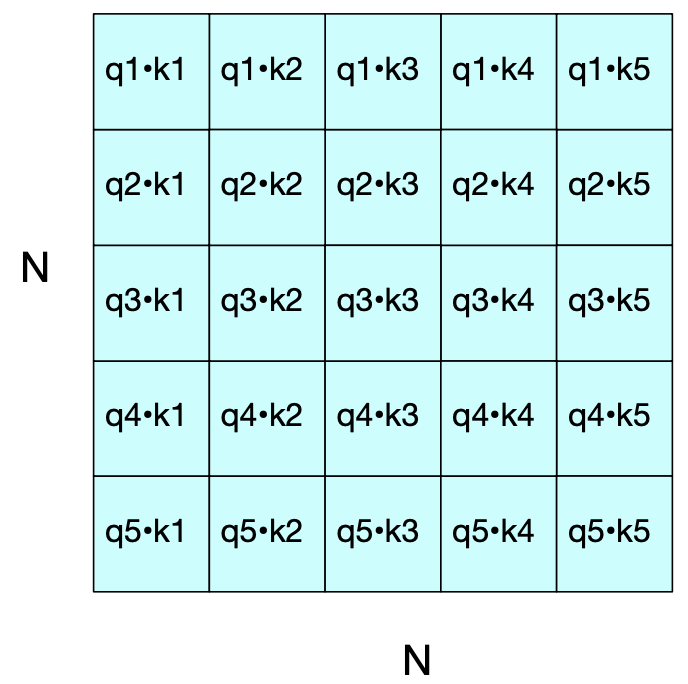

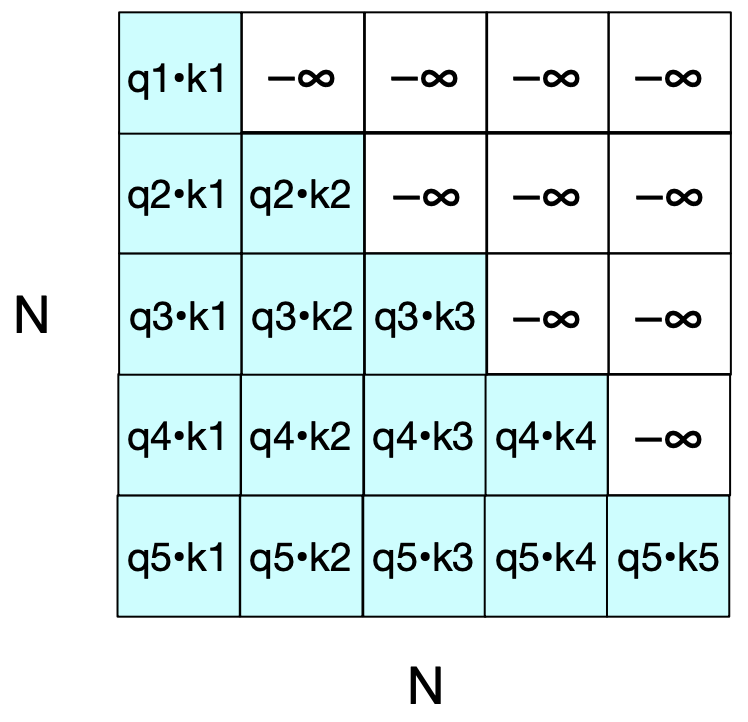

\(X_{N \times d} \rightarrow\) matrix of all tokens in a sequence of length \(N\) with each token represented with a \(d\) dimensional embedding. Each row of \(X\) is embedding representation of one token of the input. Then we can calculate \(Q, K, V\) as follows.

With these, we can now calculate all the query-key scores simultaneously as \(Q \times K\).

We can then apply softmax on all rows and multiply the resulting matrix by \(V\).

Finally, we get output sequence \(y_1, \dots, y_n\).

What’s the problem with the approach above?

This process goes a bit too far since the calculation of the comparisons in \(QK\) results in a score for each value to each key value, including those that follow the query.

Is this appropriate in the setting of language modeling?

Break#

❓❓ Questions for you#

Exercise 7.2: Select all of the following statements which are True (iClicker)#

(A) The main difference between the RNN layer and a self-attention layer is that in self-attention, we pass the information without intermediate recurrent connections.

(B) In self-attention, the output \(y_i\) of input \(x_i\) at time \(i\) is a scalar.

(C) Calculating attention weights is quadratic in the length of the input since we need to compute dot products between each pair of tokens in the input.

(D) Self-attention results in contextual embeddings.

Exercise 8.2: V’s Solutions!

A, C, D

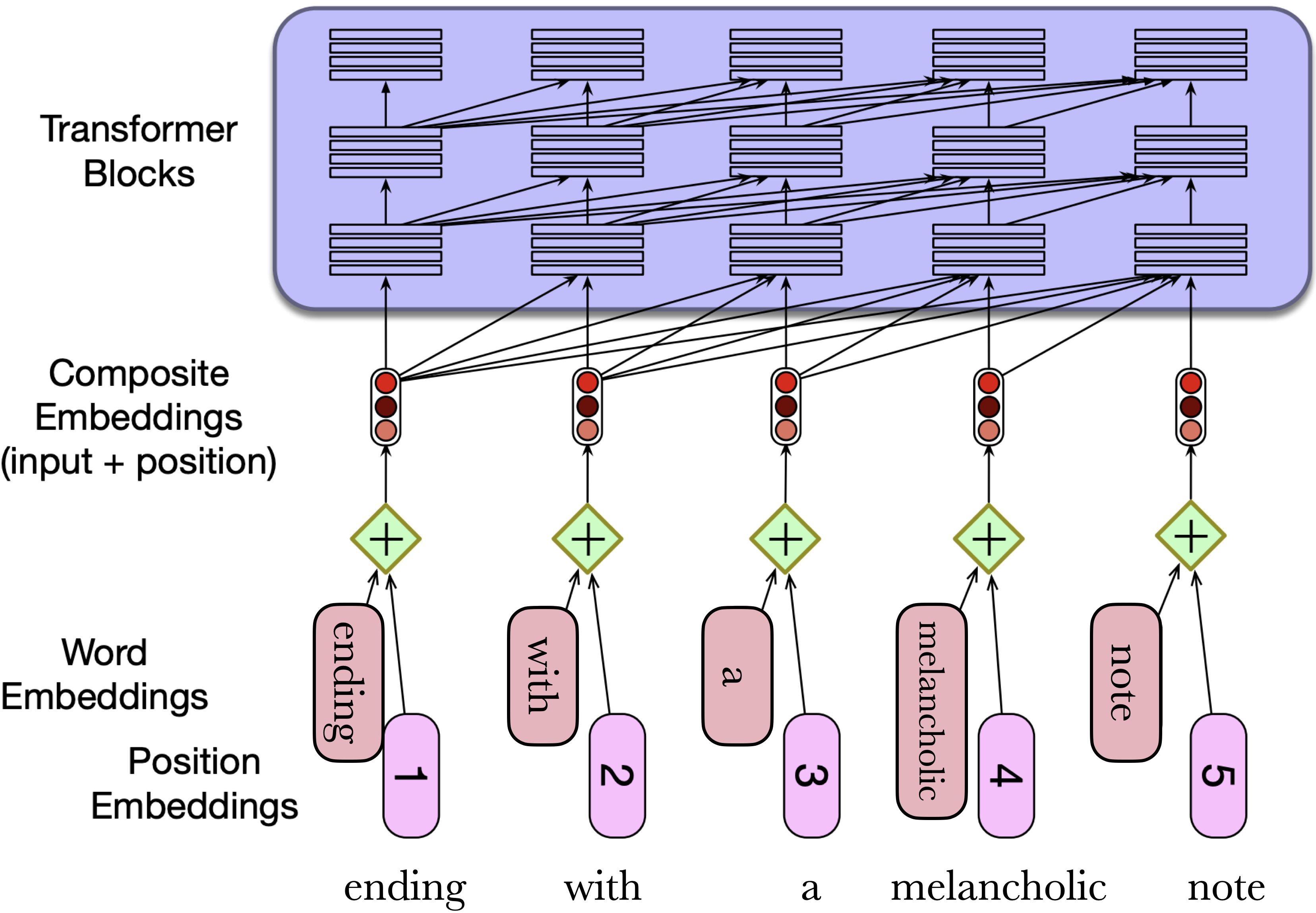

3. Positional embeddings#

Also called positional encodings.

Are we capturing word order when we calculate \(y_3\)? In other words if you scramble the order of the inputs, will you get exactly the same answer for \(y_3\)?

How can we capture word order and positional information?

A simple solution is positional embeddings!

To produce an input embedding that captures positional information,

We create positional embedding for each position (e.g., 1, 2, 3, …)

We add it to the corresponding input embedding

The resulting embedding has some information about the input along with its position in the text

Where do we get these positional embeddings? The simplest method is to start with randomly initialized embeddings corresponding to each possible input position and learn them along with other parameters during training.

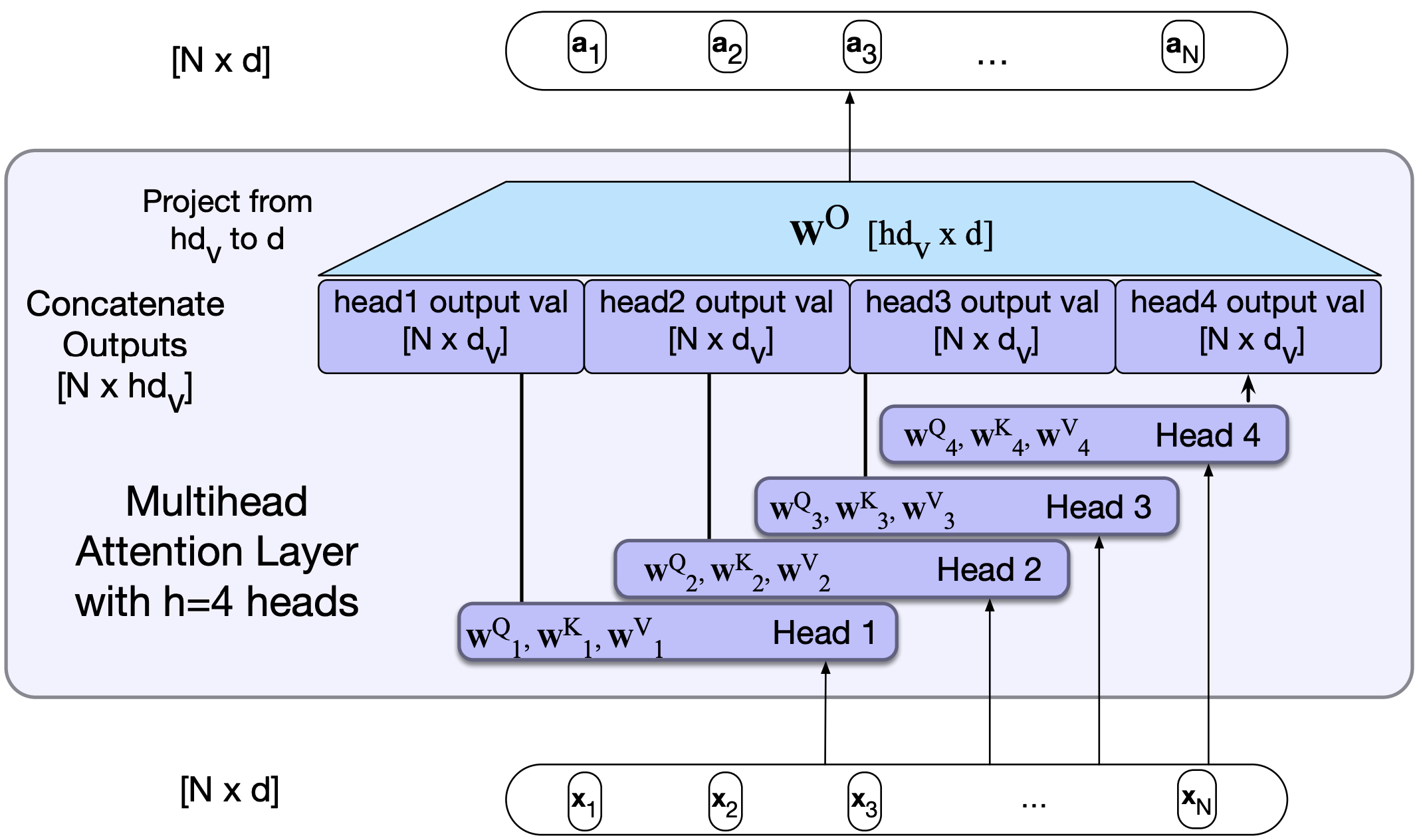

4. Multi-head attention#

Different words in a sentence can relate to each other in many different ways simultaneously.

Consider the sentence below.

The cat was scared because it didn’t recognize me in my mask.

Let’s look at all the dependencies in this sentence.

import spacy

from spacy import displacy

nlp = spacy.load("en_core_web_md")

doc = nlp("The cat was scared because it did n't recognize me in my mask .")

displacy.render(doc, style="dep")

So a single attention layer usually is not enough to capture all different kinds of parallel relations between inputs.

Transformers address this issue with multihead self-attention layers.

These self-attention layers are called heads.

They are at the same depth of the model, operate in parallel, each with a different set of parameters.

The idea is that with these different sets of parameters, each head can learn different aspects of the relationships that exist among inputs.

Visualization of multi-head attention

Similar to RNNs you can stack self-attention layers or multihead self-attention layers on the top of each other.

Let’s look at this visualization which shows where the attention of different attention heads is going in multihead attention.

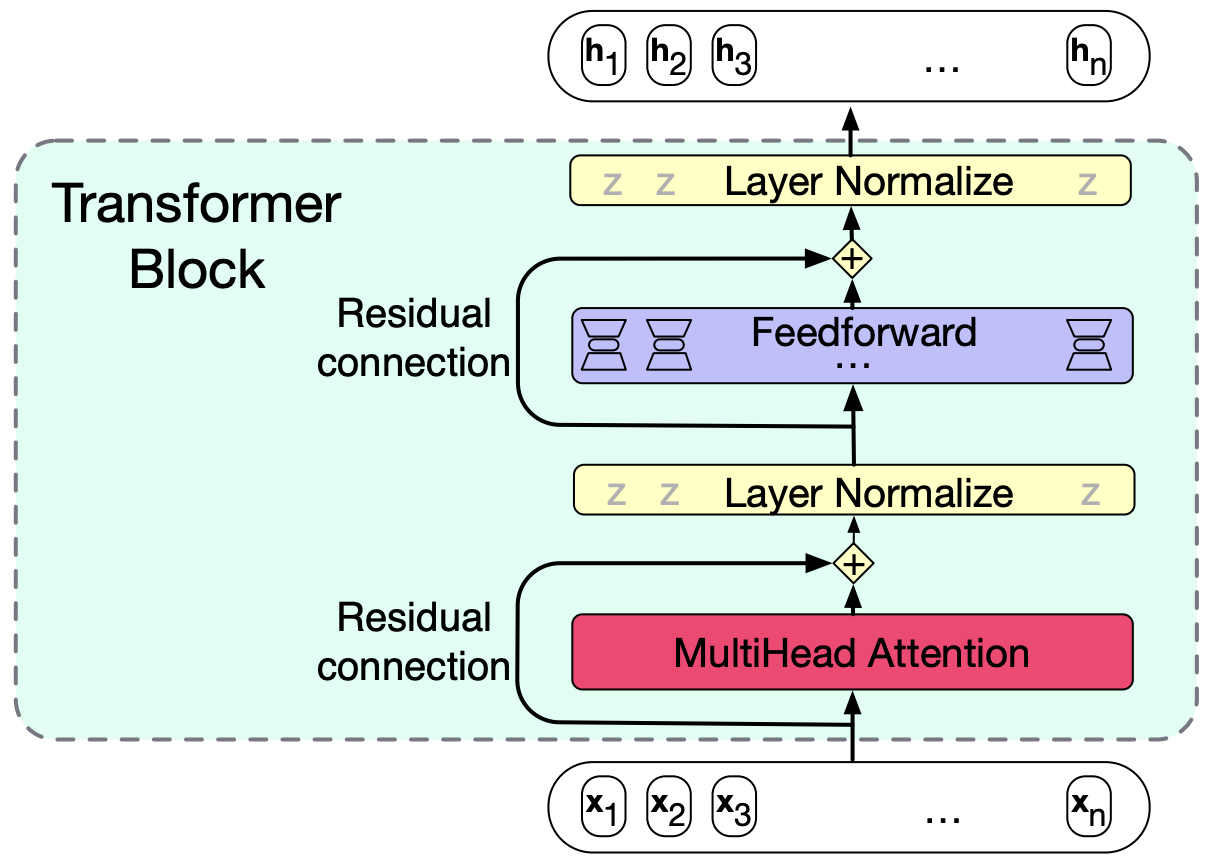

5. Transformer blocks#

In many advanced architectures, you will see transformer blocks which consists of

The multi-head self-attention layer

Feedforward layer

Residual connections

Layer Norm

Feedforward layer

\(N\) networks, one at each position.

Each network is a fully-connected network with one hidden layer, i.e., two weight matrices

The networks are independent for each position and so can be computed in parallel.

It’s common to make the the dimensionality of the hidden layer \(d_{ff}\) larger than the input dimensionality \(d_f\)

Residual connections

Connections that pass information from a lower layer to a higher layer without going through the intermediate layer.

Why? It has been shown that skipping a layer improves learning and gives higher level layers direct access to information from lower layers.

They are implemented simply by adding a layer’s input vector to its output vector before passing it forward.

Typically, residual connections are used with both attention and feedforward layers.

Layer normalization or layer norm

The summed vectors are normalized in this layer.

Layer norm applies something similar to

StandardScalerso that the mean is 0 and standard deviation is 1 in the vector.This is done to keep the values of a hidden layer in a range that facilitates gradient-based training.

For each poisition, the input is a \(d-\)dimensional vector and the output is a normalized \(d-\)dimensional vector.

Putting it all together:

Here is the function computed by a transformer.

The input and output dimensions of these layers are matched so that they can be stacked.

Each token \(x_i\) at the input to the block has dimensionality \(d\).

The input \(X\) and output \(H\) are both of shape \(N \times d\), where \(N\) is the sequence length.

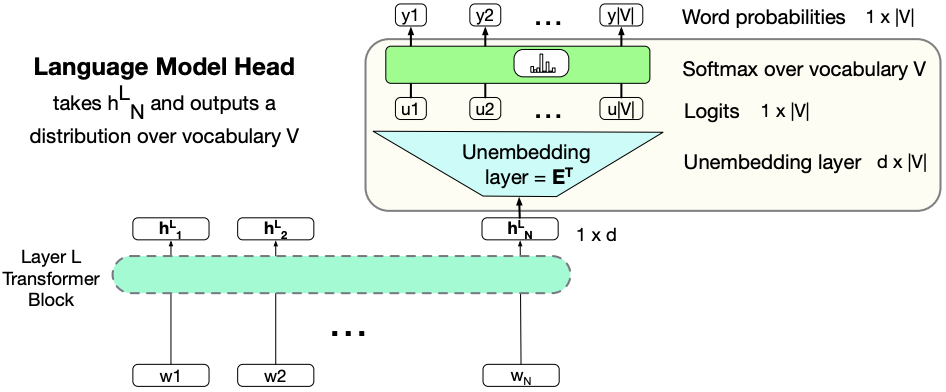

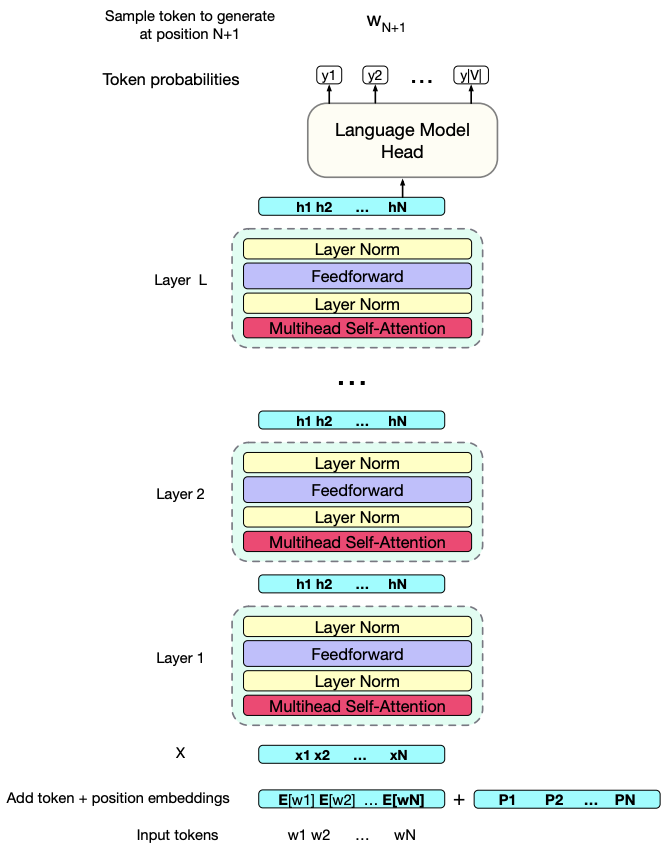

5.1 Language models with transformers#

Language models with single transformer block

The job of the language modeling head is to take the output \(h_N^{L}\), which represents the output token embedding at position \(N\) from the final block \(L\), and use it to predict the upcoming word at position \(N+1\)

The shape of \(h_N^{L}\) is \(1 \times d\).

We project it to the logit vector of shape \(1 \times V\), where \(V\) is the vocabulary size.

Recall the embedding layer at the beginning of the network. The input to our network is one-hot-encoding of tokens and the weight matrix between input and embedding layer is \(V \times d\)

Since this linear layer performs the reverse mapping from \(d\) dimensions to \(V\) dimensions, it is referred to as unembedding layer.

Language models with multiple transformer blocks

Transformers for large language models stack many of these blocks.

T5 language model or GPT-3 small language models stack 12 such blocks.

GPT-3 large stacks 96 blocks.

Final comments and summary#

Transformers are non-recurrent networks based on self-attention.

There are two main components of transformers:

A self-attention layer maps input sequences to output sequences of the same length using attention heads which model how the surrounding words are relevant for the processing of the current word.

Positional embeddings/encodings

A transformer block consists of a multi-head attention layer, followed by a feedforward layer with residual connections and layer normalizations. These blocks can be stacked together to create powerful networks.

Resources#

Attention-mechanisms and transformers are quite new. But there are many resources on transformers. I’m listing a few resources here.

3Blue1Brown has recently released some videos on transformers

Coming up: Some applications of transformers