Lecture 8: More transformers#

UBC Master of Data Science program, 2023-24

Instructor: Varada Kolhatkar

Imports, LO#

Imports#

import sys

from collections import defaultdict

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.optim as optim

pd.set_option("display.max_colwidth", 0)

Intel MKL WARNING: Support of Intel(R) Streaming SIMD Extensions 4.2 (Intel(R) SSE4.2) enabled only processors has been deprecated. Intel oneAPI Math Kernel Library 2025.0 will require Intel(R) Advanced Vector Extensions (Intel(R) AVX) instructions.

Intel MKL WARNING: Support of Intel(R) Streaming SIMD Extensions 4.2 (Intel(R) SSE4.2) enabled only processors has been deprecated. Intel oneAPI Math Kernel Library 2025.0 will require Intel(R) Advanced Vector Extensions (Intel(R) AVX) instructions.

Learning outcomes#

From this lecture you will be able to

Broadly explain how transformers are used in a language model.

Broadly explain how transformers are used for autoregressive text generation.

Broadly explain how bi-directional attention works.

Broadly explain masking and masked language models.

Explain the difference between causal language model and bi-directional language model.

Use PyTorch’s

TransformerDecoderLayerlayer.Use basic functionalities provided in the 🤗 Transformers library.

Attributions#

This material is heavily based on Jurafsky and Martin, Chapter 10 and Chapter 11.

Recap and introduction#

❓❓ Questions for you#

Exercise 8.1: Discuss the following questions with your neighbour#

Explain how self-attention enables Transformers to model relationships between different parts of the input data.

How are query (\(Q\)), key (\(K\)), and value (\(V\)) matrices used to compute the output of a self-attention layer?

What architectural feature enables Transformers to process data in parallel, and how does this compare to the processing in RNNs?

Why are positional embeddings necessary, and what might happen if they were omitted?

What is multihead attention, and how does it benefit a Transformer model over using a single attention head?

Describe the typical components of a Transformer block.

Now you know some fundamentals of transformers. In this lesson we’ll focus on:

Three types of language models

Coding example of text generation and

TransformerDecoderLayerusing PyTorchUsing 🤗 Transformers library

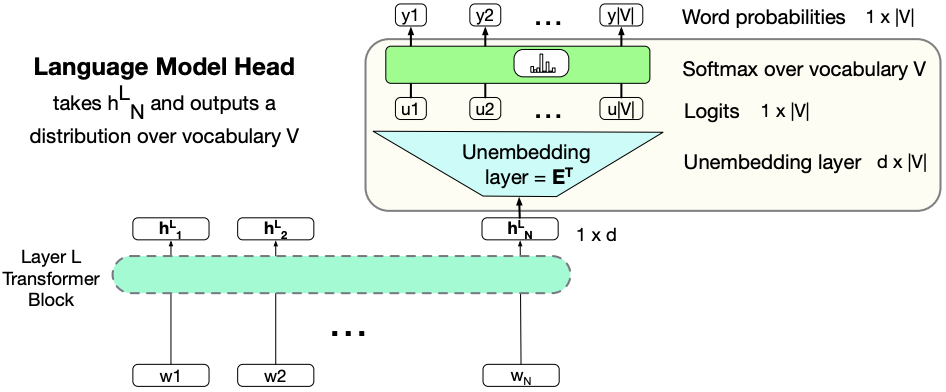

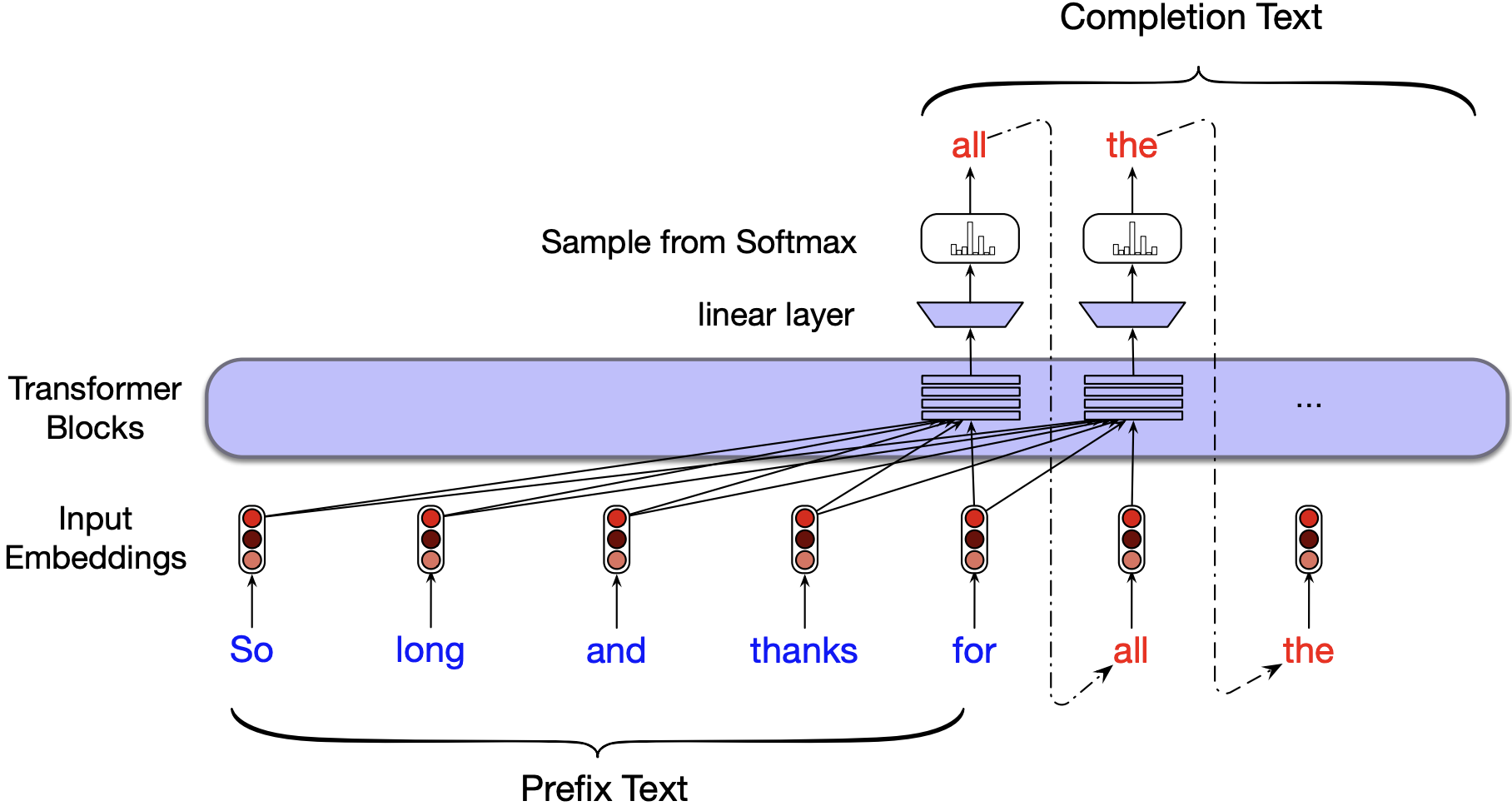

In the last lecture, we looked at this architecture for language modeling. This is a decoder-only language model.

Language models can be broadly categorized into three types:

Decoder-only models (e.g., GPT-3): These models focus on generating text based on prior input. They are typically used for tasks like text generation.

Encoder-only models (e.g., BERT, RoBERTa): These models are designed to analyze and understand input text, making them suitable for tasks such as sentiment analysis and question answering.

Encoder-decoder models (e.g., T5, BART): These models combine the functionalities of both encoder and decoder architectures to handle tasks that involve transforming an input into an output, such as translation or summarization.

Let’s try to understand the distinctions between these architectures. Later, we will look at a code example of the decoder-only architecture.

1. Decoder-only architecture#

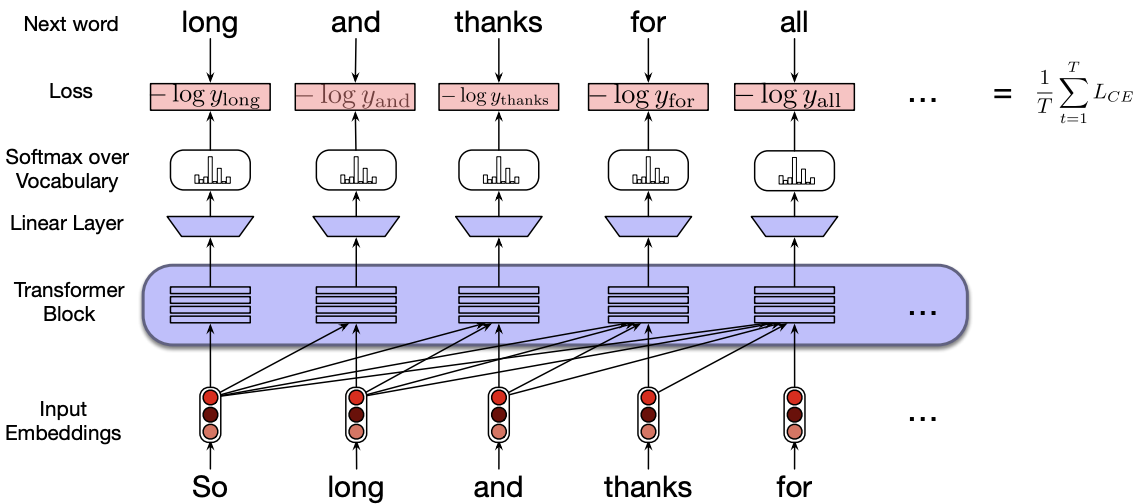

For training, we segment a large corpus of text into input/output sequence pairs with a fixed length. For example:

Input: So long and thanks for

Gold output: long and thanks for all

At each step, the final transformer layer uses all preceding words to predict a probability distribution over the entire vocabulary.

During training, we maximize the likelihood of the correct subsequent word using the cross-entropy loss for each position in the sequence.

The loss for an entire sequence is computed as the mean of the cross-entropy losses for all positions in that sequence.

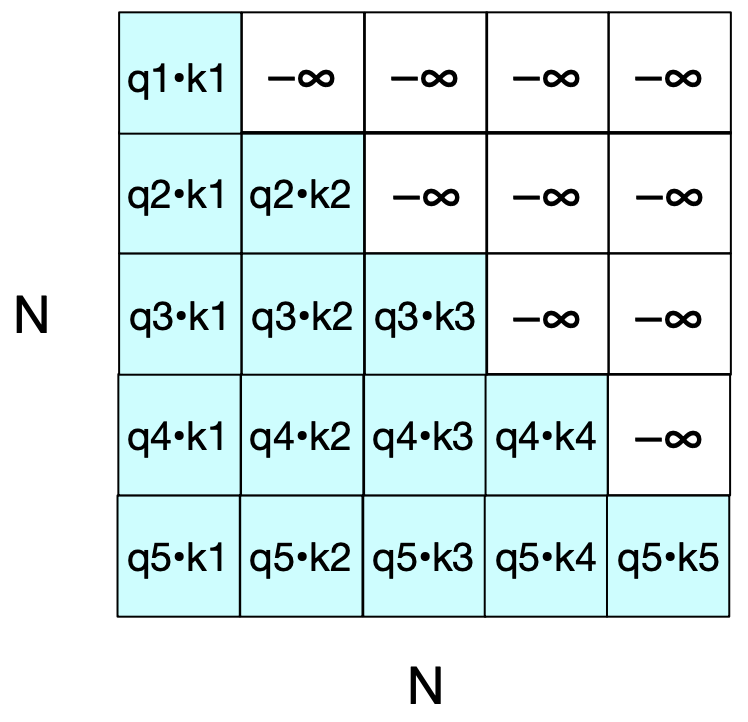

In decoder-only models, when we compute the self-attention mechanism’s dot products, we must prevent the model from ‘seeing’ future tokens.

To do this, we apply a causal mask. This mask works by setting the upper triangle of the dot product matrix, which represents future token comparisons, to a very large negative value (typically \(-\infty\)).

When passed through the softmax function, these large negative numbers effectively become zero, ensuring that future tokens do not influence the prediction of the current token.

This is how we maintain the ‘causal’ nature of the model, preserving the forward direction of text generation.

Autoregressive text generation

Once we have a trained model, we can generate new text autoregressively, similar to RNN-based models.

Incrementally generating words by repeatedly sampling the next word conditioned on our previous choices is called autoregressive generation or causal language model generation.

The sampling part in neural text generation process is similar to generation with Markov models. But overall, it considers long-distance dependencies and richer contextual information.

The models in the GPT family are autoregressive language models which are primarily designed for text generation.

2. Encoder-only architecture#

Models such as BERT and its variant RoBERTa are bidirectional transformer models. These have encoder-only architecture.

These models are primarily designed for a wide range of NLP tasks (e.g., text classification)

Remember the sentence transformers you used in DSCI 563 lab1 to get sentence embeddings? These sentence embeddings are based on BERT.

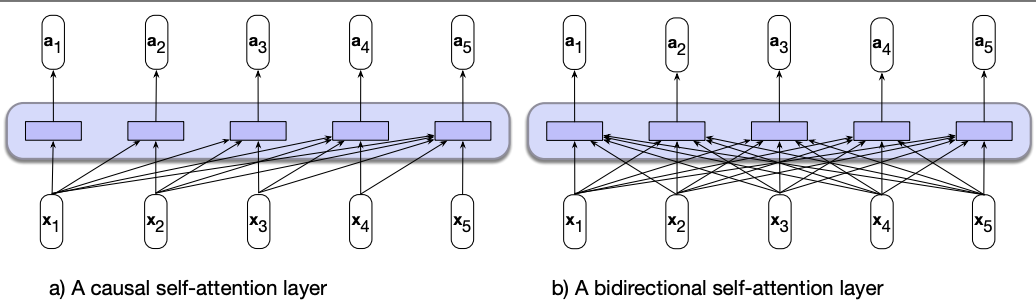

2.1 Bidirectional self-attention#

We have seen backward looking self-attention.

This is also referred to as causal (left-to-right) transformer model.

Each output is computed using only information seen earlier in the context.

This is great for autoregressive generation.

But in the context of sequence classification or sequence labeling they have an obvious shortcoming because they do not have access to the context on the right of the current token.

The hidden state computation is solely based on the current and the earlier elements in the in the sequence and it ignores the potentially useful information located to the right of each time step.

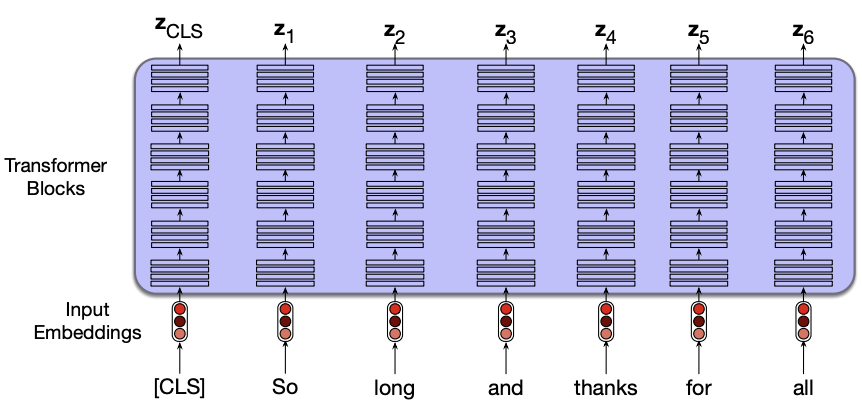

Bidirectional self-attention overcomes this limitation

Allow the self-attention mechanism to access elements from the entire input sequence.

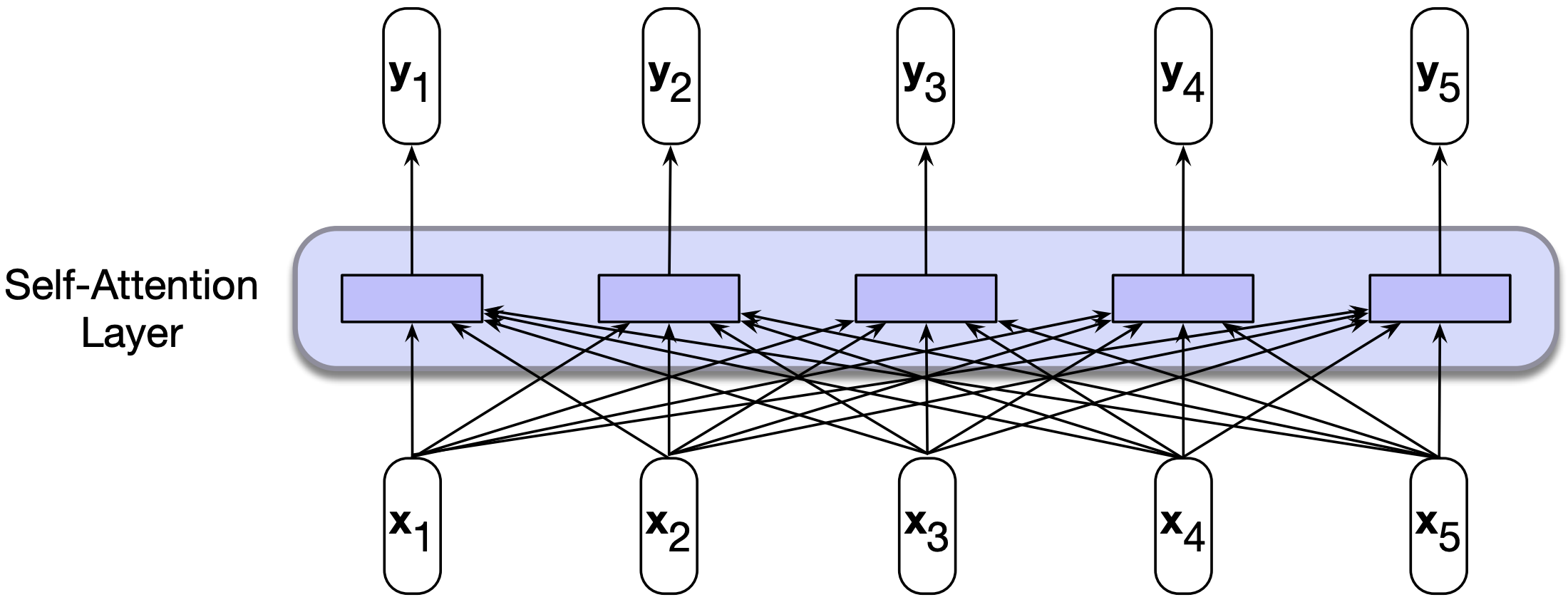

Again, it’s used to map sequences of input embeddings \((x_1, \dots, x_n)\) to sequences of output embeddings of the same length \((y_1, \dots, y_n)\).

The model attends to all inputs, both before and after the current one.

Information flows in both directions in bidirectional self-attention.

With bidirectional encoders we get contextual representations of tokens in the input sequence which are generally useful across a range of downstream applications.

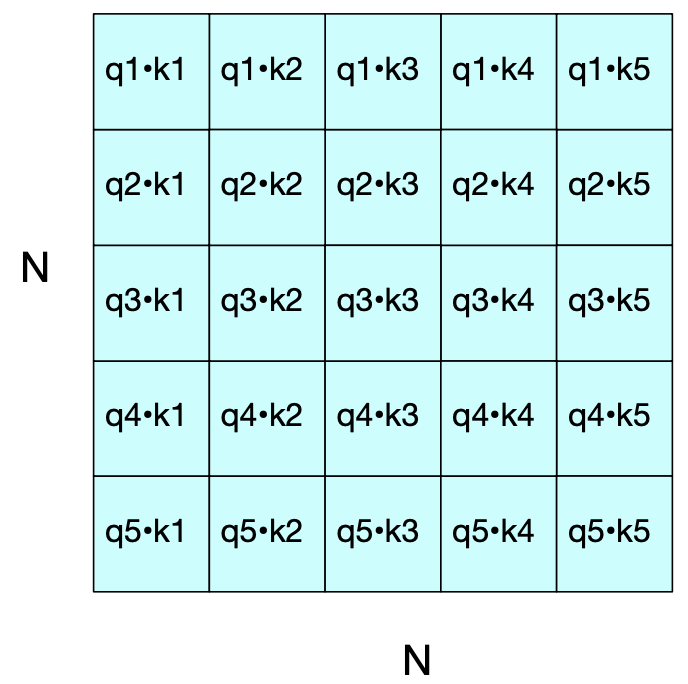

All the computations are exactly the same as before.

The matrix below shows \(q_i \cdot k_i\) comparisons. We do not set the values in the upper triangle to \(\infty\) anymore.

What’s the challenge with training bidirectional encoders?

We trained the causal transformer language model by making them iteratively predict the next word.

Can we use the same approach?

“fill-in-the-blank” task

No because that would be cheating, as we have access to the right context as well.

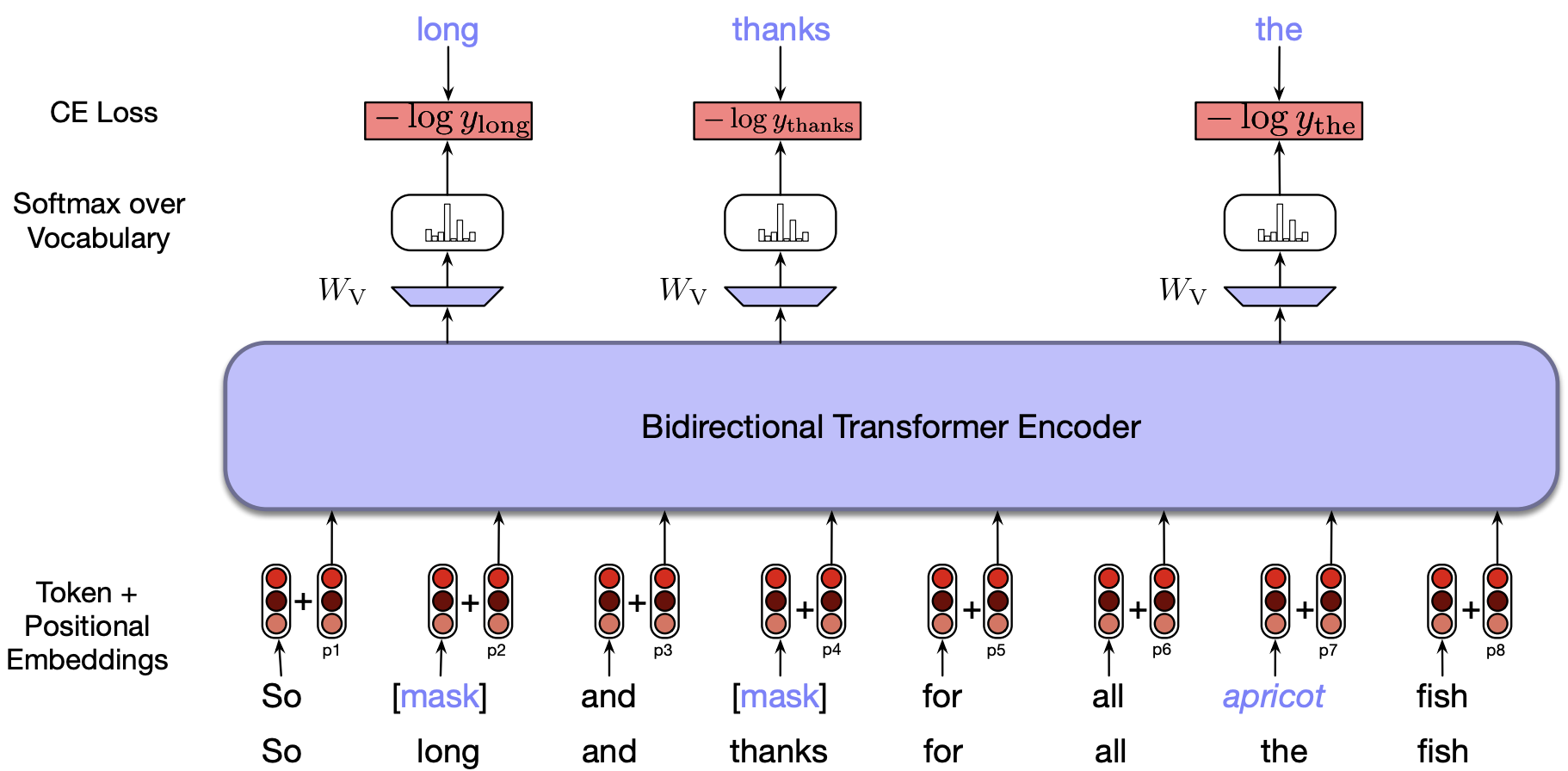

So instead of predicting the next word, the model learns to perform a fill-in-the-blank task (also referred to as cloze task).

I am studying science at UBC because I want to ___ as a data scientist.

The ___ in the exam where the fire alarm is ___ are really stressed.

Given an input sequence with one or more elements missing, the learning task is to predict the missing elements.

During training the model is deprived of one or more elements of an input sequence and the model predicts the probability distribution over all words in the vocabulary for each of these missing items.

Use cross-entropy loss for each of the model’s predictions to drive the learning process.

Masking

There are several ways to deprive the model of one or more elements of an input sequence during training.

Remove a token and replace it by a special token called

[MASK]and learn to recover the masked tokenCorrupt the input by replacing a token with a random token from the vocabulary and ask it to recover the original input

BERT used this approach of masked language modeling for training.

In BERT, \(15\%\) of the input tokens in a training sequence are sampled for learning. Of these

\(80\%\) are replaced with

[MASK]\(10\%\) are replaced with randomly selected tokens and

the remaining \(10\%\) are left unchanged.

Contextual embeddings

The representations created by masked language models are called contextual embeddings.

The methods like word2vec learned a single vector embedding for each unique word \(w\) in the vocabulary.

By contrast, the representations created by masked language have contextual information. Each word is represented by a different vector each time it appears in a different context.

Did we create contextual embeddings with causal language models?

BERT model parameters

The original bidirectional transformer encoder model (BERT) consisted of the following:

The corpus used in training BERT and other early transformer-based language models consisted of an 800 million word corpus of book texts called BooksCorpus and a 2.5 Billion word corpus derived from the English Wikipedia, for a combined size of 3.3 Billion words.

Hidden layers of size 768 (If you recall the sentence embeddings from DSCI 563 lab 1 were 768 dimensional.)

12 layers of transformer blocks with 12 multihead attention layers each!

The model has over 100M parameters.

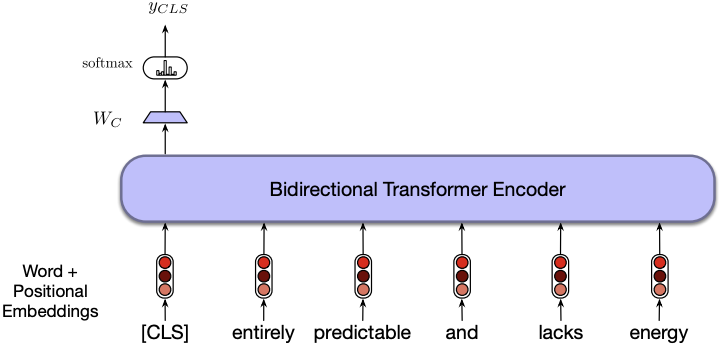

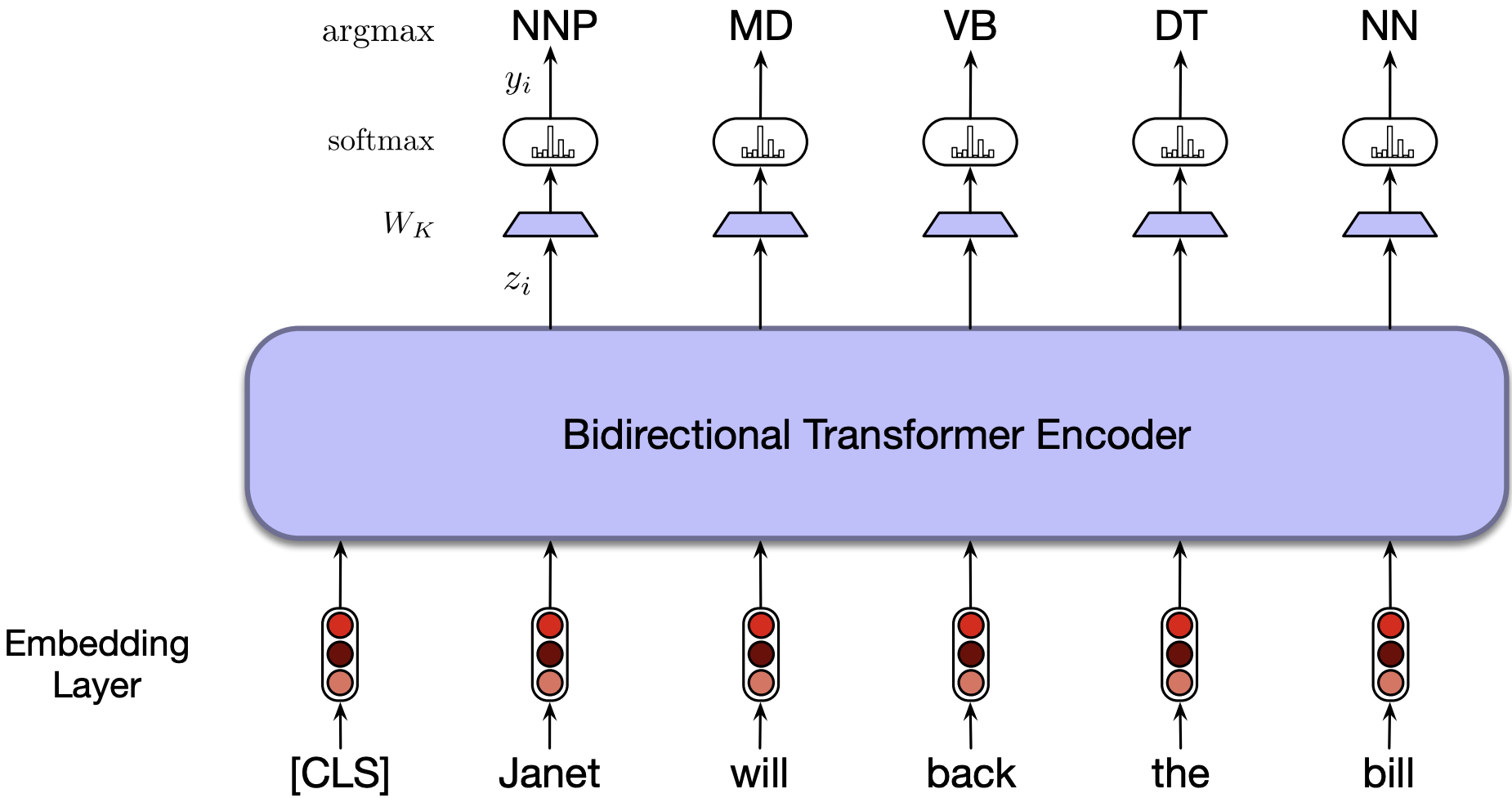

2.2 Transfer Learning through Fine-Tuning#

GPT and BERT extract generalizations from large amount of data that are useful for large number of downstream applications

How do we use these representations in our tasks?

Create interfaces from these models to downstream applications through a process called fine-tuning

Create applications on top of pre-trained models by adding a small set of application-specific parameters

Either freeze the training or only make minimal adjustments to the pre-trained language model parameters

Bidirectional sequence classification

Bidirectional sequence labeling

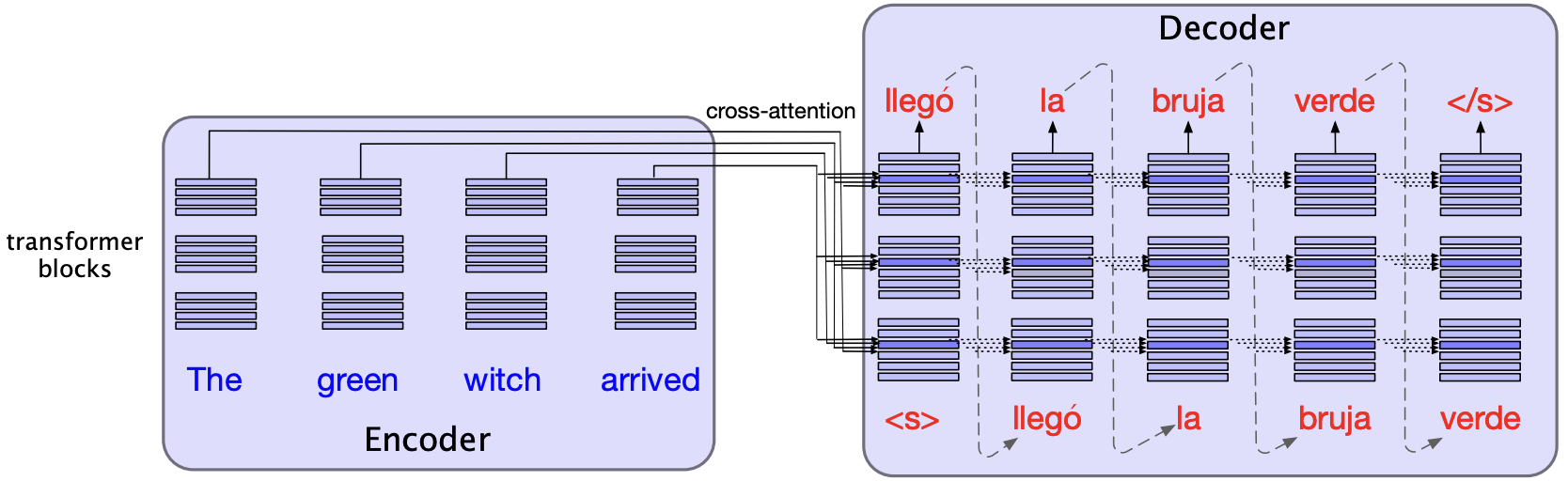

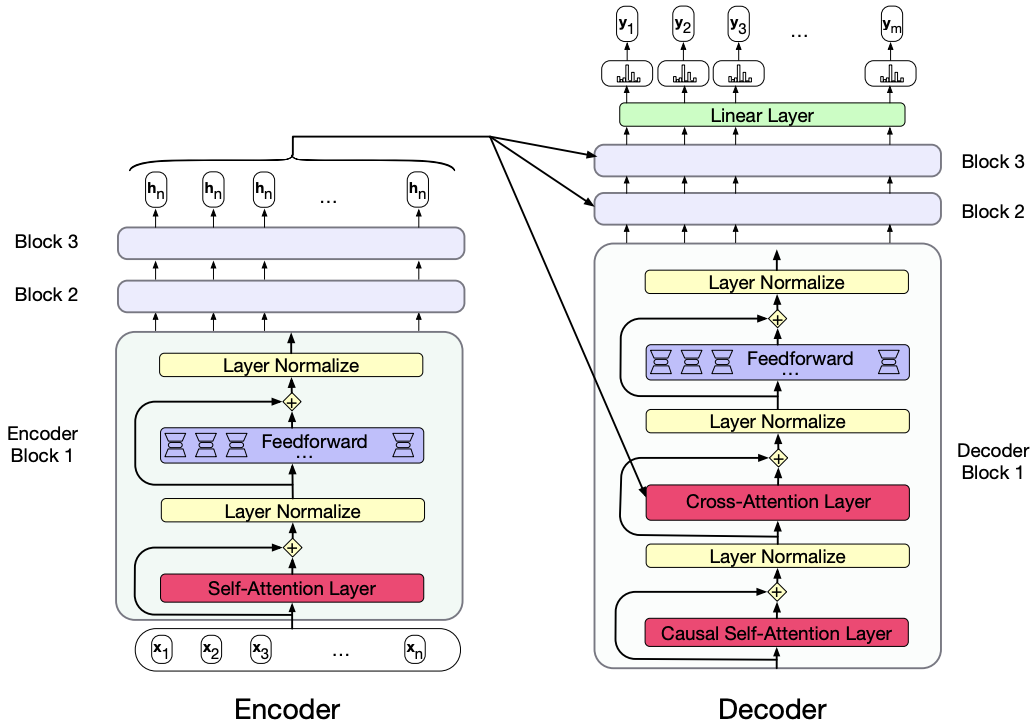

3. Encoder-decoder architecture (high-level)#

There are tasks such as machine translation or text summarization, where a combination of encoder and decoder architecture is beneficial.

For instance, when translating from English to Spanish, it would be useful to get contextual representations of the English sentence and then autoregressively generate the Spanish sentence.

In the encoder-decoder transformer architecture, the encoder uses the transformer blocks we saw in the previous lecture.

The decoder uses a more powerful block with an extra cross-attention layer that can attend to all the encoder words.

Interim summary

The table below summarizes main differences between the three types of language models.

Feature |

Decoder-only (e.g., GPT-3) |

Encoder-only (e.g., BERT, RoBERTa) |

Encoder-decoder (e.g., T5, BART) |

|---|---|---|---|

Contextual Embedding Direction |

Unidirectional |

Bidirectional |

Bidirectional |

Output Computation Based on |

Information earlier in the context |

Entire context (bidirectional) |

Encoded input context |

Text Generation |

Can naturally generate text completion |

Cannot directly generate text |

Can generate outputs naturally |

Example |

MDS Cohort 8 is the ___ |

MDS Cohort 8 is the best! → positive |

Input: Translate to Mandarin: MDS Cohort 8 is the best! Output: MDS 第八期是最棒的! |

Usage |

Recursive prediction over the sequence |

Used for classification tasks, sequence labeling taks and many other tasks |

Used for tasks requiring transformations of input (e.g., translation, summarization) |

Textual Context Embeddings |

Produces unidirectional contextual embeddings and token distributions |

Compute bidirectional contextual embeddings |

Compute bidirectional contextual embeddings in the encoder part and unidirectional embeddings in the decoder part |

Sequence Processing |

Given a prompt \(X_{1:i}\), produces embeddings for \(X_{i+1}\) to \(X_{L}\) |

Contextual embeddings are used for analysis, not sequential generation |

Encode input sequence, then decode to output sequence |

This is just a high-level introduction of common transformer architectures.

There are many things related to transformers which we have not covered. Refer to the linked resources from lecture 7 if you want to learn more.

In particular, go through these chapters from Jurafsky & Martin book: Chapter 10, Chapter 11, Chapter 13

The Illustrated Transformer is an excellent resource.

Transformers are not only for NLP. They have been successfully applied in many other domains often with state-of-the-art results. For example,

Break#

4. Implementing transformer blocks with PyTorch#

To implement transformer models using PyTorch, you’ll find the following classes particularly useful:

MultiheadAttention: This class is crucial for allowing the model to jointly attend to information from different representation subspaces at different positions.

For encoder-only architectures, use

TransformerEncoderLayer, which combines a self-attention mechanism with a feedforward neural network.For decoder-only and encoder-decoder architectures, the

TransformerDecoderLayeris appropriate. This layer includes self-attention, cross-attention (for encoder-decoder structures), and feedforward neural network components.TransformerDecoder which stacks

Ndecoder layers.

Check out this demo of recipe generation which demonstrates:

Tokenization of text data

Construction of a PyTorch Dataset

Definition of a transformer-based model architecture

Implementation of a training loop for text generation

5. 🤗 Transformers library#

The Hugging Face Transformers library is a popular open-source Python library for working with transformer models.

It provides a wide range of pre-trained transformer models which have achieved top performance in many state-of-the-art NLP tasks.

It provides

an easy-to-use API that allows using these pre-trained models off-the-shelf for tasks such as text classification, question answering, named entity recognition, or machine translation.

an easy-to-use API that allows developers to fine-tune pre-trained transformer models on their own NLP tasks.

It also includes utilities for tokenization, model inference, and training and other useful features for model visualization, model comparison, and model sharing via the Hugging Face model hub.

It supports various deep learning frameworks such as PyTorch and TensorFlow and provides a unified inferface to working with transformer models across these frameworks.

Excellent documentation and very useful tool for NLP practioners and researchers

Installation

First, install the library if it’s not already in your course environment. On the command line, activate the course environment and install the library. Here you will find installation instructions.

> conda activate 575

> pip install transformers

5.1 🤗 Transformers pipelines#

Many NLP applications, such as sentiment analysis or text summarization, benefit from pre-trained models that work effectively out-of-the-box on diverse datasets without requiring additional training.

An easiest way to get started with using pre-trained transformer models is using pipelines which abstracts many things away from the user.

🤗 Transformers pipelines encapsulate the essential components needed for different tasks in a user-friendly interface.

Let’s create a sentiment-analysis pipeline using distilbert-base-uncased-finetuned-sst-2-english model.

from transformers import pipeline, AutoModelForTokenClassification, AutoTokenizer

# Sentiment analysis pipeline

analyzer = pipeline("sentiment-analysis", model='distilbert-base-uncased-finetuned-sst-2-english')

analyzer(["MDS cohort 8 is the best!",

"I am happy that you will be graduating soon :).",

"But I am also sad that I won't be able to see you as much."])

[{'label': 'POSITIVE', 'score': 0.9998494386672974},

{'label': 'POSITIVE', 'score': 0.9998409748077393},

{'label': 'NEGATIVE', 'score': 0.9981827735900879}]

5.2 Model interpretation#

One appealing aspect of transformer models is the ability to examine and interpret the attention weights, which can provide insights into how the model is processing input data. Let’s explore this by using the transformers_interpret tool to interpret the classification results.

You’ll have to install the tool in the course environment.

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

model = AutoModelForSequenceClassification.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# With both the model and tokenizer initialized we are now able to get explanations on an example text.

from transformers_interpret import SequenceClassificationExplainer

cls_explainer = SequenceClassificationExplainer(

model,

tokenizer)

word_attributions = cls_explainer("I am happy that you will be graduating soon :)")

word_attributions

[('[CLS]', 0.0),

('i', 0.020945378112158998),

('am', 0.1985904452770377),

('happy', 0.9176834067631326),

('that', -0.25655077198710985),

('you', 0.08392903515004298),

('will', 0.08156900919862792),

('be', 0.05088697685229797),

('graduating', 0.05627688259729468),

('soon', 0.16000869429218062),

(':', -0.083720360205643),

(')', 0.009795582263717779),

('[SEP]', 0.0)]

cls_explainer.visualize("distilbert_viz.html")

| True Label | Predicted Label | Attribution Label | Attribution Score | Word Importance |

|---|---|---|---|---|

| [CLS] i am happy that you will be graduating soon : ) [SEP] | ||||

| True Label | Predicted Label | Attribution Label | Attribution Score | Word Importance |

|---|---|---|---|---|

| [CLS] i am happy that you will be graduating soon : ) [SEP] | ||||

from transformers import AutoTokenizer, AutoModel, utils

utils.logging.set_verbosity_error() # Suppress standard warnings

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name, output_attentions=True)

inputs = tokenizer.encode("I am happy that you will be graduating soon :).", return_tensors='pt')

outputs = model(inputs)

attention = outputs[-1] # Output includes attention weights when output_attentions=True

tokens = tokenizer.convert_ids_to_tokens(inputs[0])

from bertviz import head_view

head_view(attention, tokens)

5.3 Zero-shot classification with pretrained transformer models#

The sentiment-analysis pipeline mentioned earlier is suitable for off-the-shelf sentiment analysis. But what if our aim is not sentiment analysis, but rather emotion classification?

Suppose our goal is emotion classification, where we want to assign emotion labels like “joy”, “anger”, “fear”, and “surprise” to text. For this task, we will utilize the emotion dataset loaded below, which contains English Twitter messages annotated with six basic emotions: anger, fear, joy, love, sadness, and surprise. For more detailed information please refer to the paper.

You could either do fine-tuning of a pre-trained model or use zero-shot classification.

from datasets import load_dataset

dataset = load_dataset("dair-ai/emotion")

/Users/kvarada/miniconda3/envs/575/lib/python3.12/site-packages/datasets/load.py:1461: FutureWarning: The repository for dair-ai/emotion contains custom code which must be executed to correctly load the dataset. You can inspect the repository content at https://hf.co/datasets/dair-ai/emotion

You can avoid this message in future by passing the argument `trust_remote_code=True`.

Passing `trust_remote_code=True` will be mandatory to load this dataset from the next major release of `datasets`.

warnings.warn(

dataset["train"][10]

{'text': 'i feel like i have to make the suffering i m seeing mean something',

'label': 0}

dataset["train"]["text"][10]

'i feel like i have to make the suffering i m seeing mean something'

dataset["train"]["label"][10]

0

Yin et al. proposed that pre-trained Natural Language Inference (NLI) models can be used for zero-shot classifiers. Natural Language Inference (NLI), also known as textual entailment or simply inference, is a fundamental task in NLP that involves determining the logical relationship between two given pieces of text, typically referred to as the premise and the hypothesis. Here is an example:

Premise: “All MDS students worked diligently on their assignment.”

Hypothesis: “Most students in MDS received an A on the assignment.”

In this case, based on reasoning abilities, a human would likely conclude the hypothesis above as a reasonable inference from the premise. A machine learning model trained on NLI data is designed to recognize such logical relationship and classify the pair as “entailment” or “contradiction”.

Yin et al.’s method works for zero-shot-classification by posing the sequence to be classified as the NLI premise. A hypothesis is created from each candidate label. For example, if we want to evaluate whether a sequence belongs to the class “joy”, we could construct a hypothesis of “This text expresses joy.” The probabilities for entailment and contradiction are then converted to label probabilities.

Let’s classifying emotions with zero-shot-classification pipeline and facebook/bart-large-mnli model. In other words, get predictions from the pretrained model without any fine-tuning.

Refer to the documentation.

from transformers import AutoTokenizer

from transformers import pipeline

import torch

#Load the pretrained model

model_name = "facebook/bart-large-mnli"

classifier = pipeline('zero-shot-classification', model=model_name)

exs = dataset["test"]["text"][:10]

candidate_labels = ["sadness", "joy", "love","anger", "fear", "surprise"]

outputs = classifier(exs, candidate_labels)

pd.DataFrame(outputs)

| sequence | labels | scores | |

|---|---|---|---|

| 0 | im feeling rather rotten so im not very ambitious right now | [sadness, anger, surprise, fear, joy, love] | [0.7367963194847107, 0.10041721910238266, 0.09770283848047256, 0.05880146473646164, 0.004266373347491026, 0.0020157054532319307] |

| 1 | im updating my blog because i feel shitty | [sadness, surprise, anger, fear, joy, love] | [0.742975652217865, 0.13775935769081116, 0.05828309431672096, 0.054218094795942307, 0.005098145455121994, 0.0016656097723171115] |

| 2 | i never make her separate from me because i don t ever want her to feel like i m ashamed with her | [love, sadness, surprise, fear, anger, joy] | [0.3153635263442993, 0.22490312159061432, 0.19025255739688873, 0.1365167796611786, 0.06732949614524841, 0.06563455611467361] |

| 3 | i left with my bouquet of red and yellow tulips under my arm feeling slightly more optimistic than when i arrived | [surprise, joy, love, sadness, fear, anger] | [0.42182061076164246, 0.33366987109184265, 0.21740469336509705, 0.010598981752991676, 0.009764240123331547, 0.0067415558733046055] |

| 4 | i was feeling a little vain when i did this one | [surprise, anger, fear, love, joy, sadness] | [0.5639422535896301, 0.17000217735767365, 0.08645083010196686, 0.07528447359800339, 0.06986634433269501, 0.034453898668289185] |

| 5 | i cant walk into a shop anywhere where i do not feel uncomfortable | [surprise, fear, sadness, anger, joy, love] | [0.3703344762325287, 0.36559322476387024, 0.14264947175979614, 0.09872687608003616, 0.016457417979836464, 0.006238470319658518] |

| 6 | i felt anger when at the end of a telephone call | [anger, surprise, fear, sadness, joy, love] | [0.9760519862174988, 0.012534412555396557, 0.0036342781968414783, 0.00323868403211236, 0.0029335564468055964, 0.0016070229467004538] |

| 7 | i explain why i clung to a relationship with a boy who was in many ways immature and uncommitted despite the excitement i should have been feeling for getting accepted into the masters program at the university of virginia | [surprise, joy, love, sadness, fear, anger] | [0.43820154666900635, 0.23223182559013367, 0.12985974550247192, 0.0756307914853096, 0.06867080181837082, 0.05540527030825615] |

| 8 | i like to have the same breathless feeling as a reader eager to see what will happen next | [surprise, joy, love, fear, anger, sadness] | [0.7675779461860657, 0.13846923410892487, 0.03162868320941925, 0.029056446626782417, 0.02683253027498722, 0.006435177754610777] |

| 9 | i jest i feel grumpy tired and pre menstrual which i probably am but then again its only been a week and im about as fit as a walrus on vacation for the summer | [surprise, sadness, anger, fear, joy, love] | [0.7340186834335327, 0.11860275268554688, 0.07453767955303192, 0.0580662377178669, 0.010977287776768208, 0.003797301324084401] |

5.4 Prompts#

Up until recently, language models were only a component of a large system such as speech recognition system or machine translation system.

Now they are becoming more capable of being a standalone system.

Language models are capable of conditional generation. So they are capable of generating completion given a prompt.

$\(\text{prompt} \rightarrow \text{completion}\)$This simple interface opens up lets us use language models for a variety of tasks by just changing the prompt.

Let’s try a couple of prompts with the T5 encoder decoder language model.

The following examples are based on the documentation here.

import torch

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained('t5-base')

model = AutoModelWithLMHead.from_pretrained('t5-base', return_dict=True)

/Users/kvarada/miniconda3/envs/575/lib/python3.12/site-packages/transformers/models/auto/modeling_auto.py:1595: FutureWarning: The class `AutoModelWithLMHead` is deprecated and will be removed in a future version. Please use `AutoModelForCausalLM` for causal language models, `AutoModelForMaskedLM` for masked language models and `AutoModelForSeq2SeqLM` for encoder-decoder models.

warnings.warn(

sequence = ('''

A transformer is a deep learning model that adopts the mechanism of self-attention,

differentially weighting the significance of each part of the input data.

It is used primarily in the fields of natural language processing (NLP) and computer vision (CV).

Like recurrent neural networks (RNNs), transformers are designed to process sequential input data,

such as natural language, with applications towards tasks such as translation and text summarization.

However, unlike RNNs, transformers process the entire input all at once.

The attention mechanism provides context for any position in the input sequence.

For example, if the input data is a natural language sentence,

the transformer does not have to process one word at a time.

This allows for more parallelization than RNNs and therefore reduces training times.

Transformers were introduced in 2017 by a team at Google Brain and are increasingly the model of choice

for NLP problems, replacing RNN models such as long short-term memory (LSTM).

The additional training parallelization allows training on larger datasets.

This led to the development of pretrained systems such as BERT (Bidirectional Encoder Representations from Transformers)

and GPT (Generative Pre-trained Transformer), which were trained with large language datasets,

such as the Wikipedia Corpus and Common Crawl, and can be fine-tuned for specific tasks.

Before transformers, most state-of-the-art NLP systems relied on gated RNNs,

such as LSTMs and gated recurrent units (GRUs), with added attention mechanisms.

Transformers also make use of attention mechanisms but, unlike RNNs, do not have a recurrent structure.

This means that provided with enough training data, attention mechanisms alone can match the performance

of RNNs with attention.

Gated RNNs process tokens sequentially, maintaining a state vector that contains

a representation of the data seen prior to the current token. To process the

nth token, the model combines the state representing the sentence up to token n-1 with the information

of the new token to create a new state, representing the sentence up to token n.

Theoretically, the information from one token can propagate arbitrarily far down the sequence,

if at every point the state continues to encode contextual information about the token.

In practice this mechanism is flawed: the vanishing gradient problem leaves the model's state at

the end of a long sentence without precise, extractable information about preceding tokens.

The dependency of token computations on the results of previous token computations also makes it hard

to parallelize computation on modern deep-learning hardware. This can make the training of RNNs inefficient.

These problems were addressed by attention mechanisms. Attention mechanisms let a model draw

from the state at any preceding point along the sequence. The attention layer can access

all previous states and weigh them according to a learned measure of relevance, providing

relevant information about far-away tokens.

A clear example of the value of attention is in language translation, where context is essential

to assign the meaning of a word in a sentence. In an English-to-French translation system,

the first word of the French output most probably depends heavily on the first few words of the English input.

However, in a classic LSTM model, in order to produce the first word of the French output, the model

is given only the state vector after processing the last English word. Theoretically, this vector can encode

information about the whole English sentence, giving the model all the necessary knowledge.

In practice, this information is often poorly preserved by the LSTM.

An attention mechanism can be added to address this problem: the decoder is given access to the state vectors of every English input word,

not just the last, and can learn attention weights that dictate how much to attend to each English input state vector.

''')

prompt = "summarize: "

inputs = tokenizer.encode(prompt + sequence, return_tensors="pt", max_length=512, truncation=True)

summary_ids = model.generate(inputs, max_length=150, min_length=80, length_penalty=5., num_beams=2)

summary_ids

tensor([[ 0, 3, 9, 19903, 19, 3, 9, 1659, 1036, 825,

24, 4693, 7, 8, 8557, 13, 1044, 18, 25615, 3,

5, 9770, 3, 60, 14907, 24228, 5275, 41, 14151, 567,

7, 201, 19903, 7, 433, 8, 1297, 3785, 66, 44,

728, 3, 5, 8, 1388, 8557, 795, 2625, 21, 136,

1102, 16, 8, 3785, 5932, 3, 5, 19903, 7, 130,

3665, 16, 1233, 57, 3, 9, 372, 44, 10283, 2241,

3, 5, 8, 1388, 8557, 19, 1126, 12, 24, 13,

3, 9, 3, 60, 14907, 24228, 1229, 41, 14151, 567,

61, 1]])

tokenizer.decode(summary_ids[0])

'<pad> a transformer is a deep learning model that adopts the mechanism of self-attention. unlike recurrent neural networks (RNNs), transformers process the entire input all at once. the attention mechanism provides context for any position in the input sequence. transformers were introduced in 2017 by a team at google brain. the attention mechanism is similar to that of a recurrent neural network (RNN)</s>'

Let’s try translation with the same model.

input_ids = tokenizer("translate English to German: The house is wonderful.", return_tensors="pt").input_ids

output = model.generate(input_ids)

output

tensor([[ 0, 644, 4598, 229, 19250, 5, 1]])

tokenizer.decode(output[0])

'<pad> Das Haus ist wunderbar.</s>'

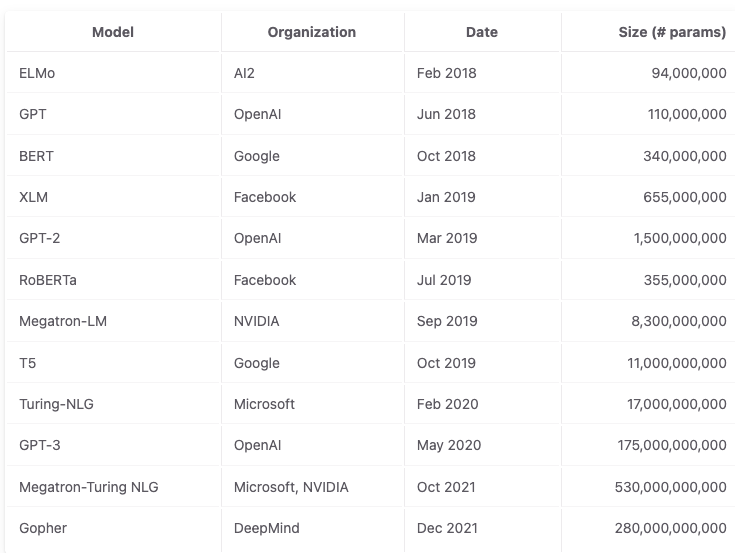

Increase in size of language models#

The model sizes have increased by an order of 500x over the last 4 years.

Harms of large language models#

While these models are super powerful and useful, be mindful of the harms caused by these models. Some of the harms as summarized here are:

misinformation

performance disparties

social biases and stereotypes

toxicity

security and privacy risks

copyright and legal protections

environmental impact

centralization of power

An important component of large language models that we haven’t yet discussed is Reinforcement Learning with Human Feedback (RLHF). Vincent will cover this topic in the upcoming Capstone seminar series. 🎉🎉

Summary and wrap up#

Week 1 ✅#

Markov models, language models, text generation

Applications of Markov models#

Week 2 ✅#

Hidden Markov models, speech recognition, POS tagging

Week 3 ✅#

Topic modeling (Latent Dirichlet Allocation (LDA)), organizing documents

Introduction to Recurrent Neural Networks (RNNs)

Week 4 ✅#

Transformers

Final remarks#

That’s all! I had fun teaching you this complex material. I very much appreciate your support, patience, and great questions ♥️!

It has been a challenging year but we all tried to make the best out of it. I wish you every success in your job search. Stay in touch!

Time for course evaluations#

I would love to hear your thoughts on this course. When you get a chance, it’ll be great if you fill in the evaluation survey for this course on Canvas.

The evaluation closing date is: April 26th, 2024