Lecture 6: Hyperparameter Optimization and Optimization Bias#

UBC Master of Data Science program, 2023-24

Instructor: Varada Kolhatkar

Lecture plan#

Hyperparameter optimization: Big picture

Grid search

Break

Randomized search

Optimization bias (summary)

iClicker

Imports, Announcements, and LO#

Imports#

import os

import sys

sys.path.append("code/.")

import matplotlib.pyplot as plt

import mglearn

import numpy as np

import pandas as pd

from plotting_functions import *

from sklearn.dummy import DummyClassifier

from sklearn.impute import SimpleImputer

from sklearn.model_selection import cross_val_score, cross_validate, train_test_split

from sklearn.pipeline import Pipeline, make_pipeline

from sklearn.preprocessing import OneHotEncoder, StandardScaler

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

from utils import *

%matplotlib inline

pd.set_option("display.max_colwidth", 200)

from sklearn import set_config

set_config(display="diagram")

Learning outcomes#

From this lecture, you will be able to

explain the need for hyperparameter optimization

carry out hyperparameter optimization using

sklearn’sGridSearchCVandRandomizedSearchCVexplain different hyperparameters of

GridSearchCVexplain the importance of selecting a good range for the values.

explain optimization bias

identify and reason when to trust and not trust reported accuracies

Hyperparameter optimization motivation#

Motivation#

Remember that the fundamental goal of supervised machine learning is to generalize beyond what we see in the training examples.

We have been using data splitting and cross-validation to provide a framework to approximate generalization error.

With this framework, we can improve the model’s generalization performance by tuning model hyperparameters using cross-validation on the training set.

Hyperparameters: the problem#

In order to improve the generalization performance, finding the best values for the important hyperparameters of a model is necessary for almost all models and datasets.

Picking good hyperparameters is important because if we don’t do it, we might end up with an underfit or overfit model.

Some ways to pick hyperparameters:#

Manual or expert knowledge or heuristics based optimization

Data-driven or automated optimization

Manual hyperparameter optimization#

Advantage: we may have some intuition about what might work.

E.g. if I’m massively overfitting, try decreasing

max_depthorC.

Disadvantages

it takes a lot of work

not reproducible

in very complicated cases, our intuition might be worse than a data-driven approach

Automated hyperparameter optimization#

Formulate the hyperparamter optimization as a one big search problem.

Often we have many hyperparameters of different types: Categorical, integer, and continuous.

Often, the search space is quite big and systematic search for optimal values is infeasible.

In homework assignments, we have been carrying out hyperparameter search by exhaustively trying different possible combinations of the hyperparameters of interest.

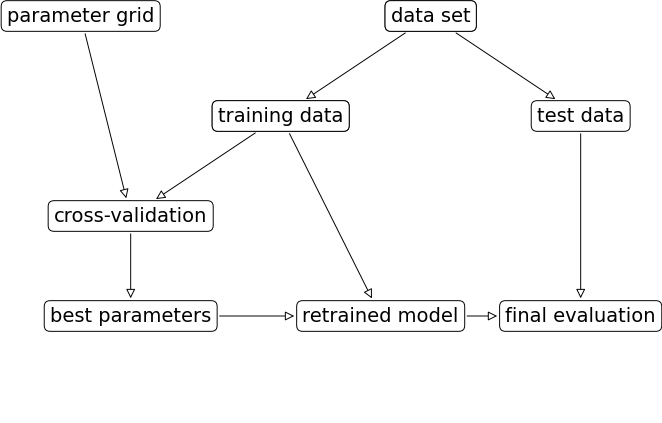

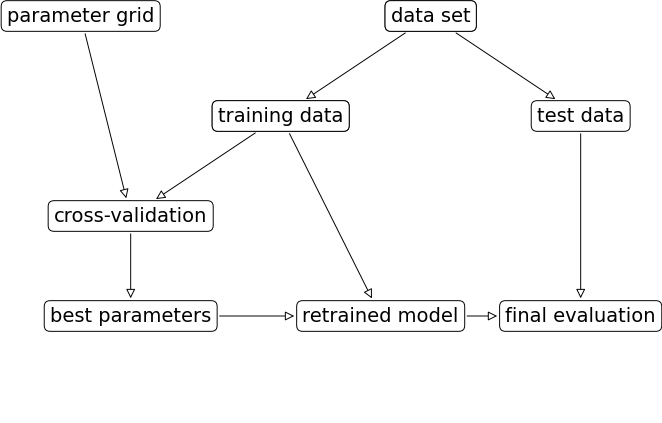

mglearn.plots.plot_grid_search_overview()

Let’s look at an example of tuning max_depth of the DecisionTreeClassifier on the Spotify dataset.

spotify_df = pd.read_csv("data/spotify.csv", index_col=0)

X_spotify = spotify_df.drop(columns=["target", "artist"])

y_spotify = spotify_df["target"]

X_spotify.head()

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

Cell In[4], line 1

----> 1 spotify_df = pd.read_csv("data/spotify.csv", index_col=0)

2 X_spotify = spotify_df.drop(columns=["target", "artist"])

3 y_spotify = spotify_df["target"]

File ~/miniconda3/envs/571/lib/python3.10/site-packages/pandas/io/parsers/readers.py:948, in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, date_format, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, encoding_errors, dialect, on_bad_lines, delim_whitespace, low_memory, memory_map, float_precision, storage_options, dtype_backend)

935 kwds_defaults = _refine_defaults_read(

936 dialect,

937 delimiter,

(...)

944 dtype_backend=dtype_backend,

945 )

946 kwds.update(kwds_defaults)

--> 948 return _read(filepath_or_buffer, kwds)

File ~/miniconda3/envs/571/lib/python3.10/site-packages/pandas/io/parsers/readers.py:611, in _read(filepath_or_buffer, kwds)

608 _validate_names(kwds.get("names", None))

610 # Create the parser.

--> 611 parser = TextFileReader(filepath_or_buffer, **kwds)

613 if chunksize or iterator:

614 return parser

File ~/miniconda3/envs/571/lib/python3.10/site-packages/pandas/io/parsers/readers.py:1448, in TextFileReader.__init__(self, f, engine, **kwds)

1445 self.options["has_index_names"] = kwds["has_index_names"]

1447 self.handles: IOHandles | None = None

-> 1448 self._engine = self._make_engine(f, self.engine)

File ~/miniconda3/envs/571/lib/python3.10/site-packages/pandas/io/parsers/readers.py:1705, in TextFileReader._make_engine(self, f, engine)

1703 if "b" not in mode:

1704 mode += "b"

-> 1705 self.handles = get_handle(

1706 f,

1707 mode,

1708 encoding=self.options.get("encoding", None),

1709 compression=self.options.get("compression", None),

1710 memory_map=self.options.get("memory_map", False),

1711 is_text=is_text,

1712 errors=self.options.get("encoding_errors", "strict"),

1713 storage_options=self.options.get("storage_options", None),

1714 )

1715 assert self.handles is not None

1716 f = self.handles.handle

File ~/miniconda3/envs/571/lib/python3.10/site-packages/pandas/io/common.py:863, in get_handle(path_or_buf, mode, encoding, compression, memory_map, is_text, errors, storage_options)

858 elif isinstance(handle, str):

859 # Check whether the filename is to be opened in binary mode.

860 # Binary mode does not support 'encoding' and 'newline'.

861 if ioargs.encoding and "b" not in ioargs.mode:

862 # Encoding

--> 863 handle = open(

864 handle,

865 ioargs.mode,

866 encoding=ioargs.encoding,

867 errors=errors,

868 newline="",

869 )

870 else:

871 # Binary mode

872 handle = open(handle, ioargs.mode)

FileNotFoundError: [Errno 2] No such file or directory: 'data/spotify.csv'

X_train, X_test, y_train, y_test = train_test_split(

X_spotify, y_spotify, test_size=0.2, random_state=123

)

numeric_feats = ['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']

categorical_feats = ['time_signature', 'key']

passthrough_feats = ['mode']

text_feat = "song_title"

from sklearn.compose import make_column_transformer

from sklearn.feature_extraction.text import CountVectorizer

preprocessor = make_column_transformer(

(StandardScaler(), numeric_feats),

("passthrough", passthrough_feats),

(OneHotEncoder(handle_unknown = "ignore"), categorical_feats),

(CountVectorizer(max_features=100, stop_words="english"), text_feat)

)

svc_pipe = make_pipeline(preprocessor, SVC)

best_score = 0

param_grid = {"max_depth": np.arange(1, 20, 2)}

results_dict = {"max_depth": [], "mean_cv_score": []}

for depth in param_grid[

"max_depth"

]: # for each combination of parameters, train an SVC

dt_pipe = make_pipeline(preprocessor, DecisionTreeClassifier(max_depth=depth))

scores = cross_val_score(dt_pipe, X_train, y_train) # perform cross-validation

mean_score = np.mean(scores) # compute mean cross-validation accuracy

if (

mean_score > best_score

): # if we got a better score, store the score and parameters

best_score = mean_score

best_params = {"max_depth": depth}

results_dict["max_depth"].append(depth)

results_dict["mean_cv_score"].append(mean_score)

best_params

{'max_depth': 5}

best_score

0.7309405226621543

Let’s try SVM RBF and tuning C and gamma on the same dataset.

pipe_svm = make_pipeline(preprocessor, SVC()) # We need scaling for SVM RBF

pipe_svm.fit(X_train, y_train)

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

Let’s try cross-validation with default hyperparameters of SVC.

scores = cross_validate(pipe_svm, X_train, y_train, return_train_score=True)

pd.DataFrame(scores).mean()

fit_time 0.049904

score_time 0.011824

test_score 0.734011

train_score 0.828891

dtype: float64

Now let’s try exhaustive hyperparameter search using for loops.

This is what we have been doing for this:

for gamma in [0.01, 1, 10, 100]: # for some values of gamma

for C in [0.01, 1, 10, 100]: # for some values of C

for fold in folds:

fit in training portion with the given C

score on validation portion

compute average score

pick hyperparameter values which yield with best average score

best_score = 0

param_grid = {

"C": [0.001, 0.01, 0.1, 1, 10, 100],

"gamma": [0.001, 0.01, 0.1, 1, 10, 100],

}

results_dict = {"C": [], "gamma": [], "mean_cv_score": []}

for gamma in param_grid["gamma"]:

for C in param_grid["C"]: # for each combination of parameters, train an SVC

pipe_svm = make_pipeline(preprocessor, SVC(gamma=gamma, C=C))

scores = cross_val_score(pipe_svm, X_train, y_train) # perform cross-validation

mean_score = np.mean(scores) # compute mean cross-validation accuracy

if (

mean_score > best_score

): # if we got a better score, store the score and parameters

best_score = mean_score

best_parameters = {"C": C, "gamma": gamma}

results_dict["C"].append(C)

results_dict["gamma"].append(gamma)

results_dict["mean_cv_score"].append(mean_score)

best_parameters

{'C': 1, 'gamma': 0.1}

best_score

0.7352614272253524

df = pd.DataFrame(results_dict)

df.sort_values(by="mean_cv_score", ascending=False).head(10)

| C | gamma | mean_cv_score | |

|---|---|---|---|

| 15 | 1.0 | 0.100 | 0.735261 |

| 16 | 10.0 | 0.100 | 0.722249 |

| 11 | 100.0 | 0.010 | 0.716657 |

| 10 | 10.0 | 0.010 | 0.716655 |

| 5 | 100.0 | 0.001 | 0.705511 |

| 14 | 0.1 | 0.100 | 0.701173 |

| 9 | 1.0 | 0.010 | 0.691877 |

| 17 | 100.0 | 0.100 | 0.677601 |

| 4 | 10.0 | 0.001 | 0.673277 |

| 8 | 0.1 | 0.010 | 0.652828 |

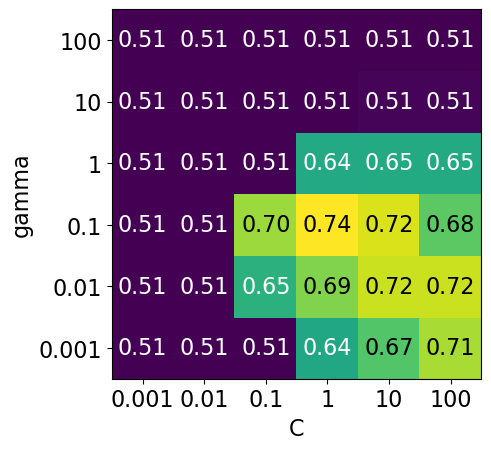

scores = np.array(df.mean_cv_score).reshape(6, 6)

my_heatmap(

scores,

xlabel="C",

xticklabels=param_grid["C"],

ylabel="gamma",

yticklabels=param_grid["gamma"],

cmap="viridis",

fmt="%0.2f"

);

# plot the mean cross-validation scores

We have 6 possible values for

Cand 6 possible values forgamma.In 5-fold cross-validation, for each combination of parameter values, five accuracies are computed.

So to evaluate the accuracy of the SVM using 6 values of

Cand 6 values ofgammausing five-fold cross-validation, we need to train 36 * 5 = 180 models!

np.prod(list(map(len, param_grid.values())))

36

Once we have optimized hyperparameters, we retrain a model on the full training set with these optimized hyperparameters.

pipe_svm = make_pipeline(preprocessor, SVC(**best_parameters))

pipe_svm.fit(

X_train, y_train

) # Retrain a model with optimized hyperparameters on the combined training and validation set

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC(C=1, gamma=0.1))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC(C=1, gamma=0.1))])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC(C=1, gamma=0.1)

Note

In Python, the double asterisk (**) followed by a variable name is used to pass a variable number of keyword arguments to a function. This allows to pass a dictionary of named arguments to a function, where keys of the dictionary become the argument names and values vecome the corresponding argument values.

And finally evaluate the performance of this model on the test set.

pipe_svm.score(X_test, y_test) # Final evaluation on the test data

0.75

This process is so common that there are some standard methods in scikit-learn where we can carry out all of this in a more compact way.

mglearn.plots.plot_grid_search_overview()

In this lecture we are going to talk about two such most commonly used automated optimizations methods from scikit-learn.

Exhaustive grid search:

sklearn.model_selection.GridSearchCVRandomized search:

sklearn.model_selection.RandomizedSearchCV

The “CV” stands for cross-validation; these methods have built-in cross-validation.

Exhaustive grid search: sklearn.model_selection.GridSearchCV#

For

GridSearchCVwe needan instantiated model or a pipeline

a parameter grid: A user specifies a set of values for each hyperparameter.

other optional arguments

The method considers product of the sets and evaluates each combination one by one.

svc_pipe

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('abcmeta', <class 'sklearn.svm._classes.SVC'>)])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('abcmeta', <class 'sklearn.svm._classes.SVC'>)])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

<class 'sklearn.svm._classes.SVC'>

from sklearn.model_selection import GridSearchCV

pipe_svm = make_pipeline(preprocessor, SVC())

param_grid = {

"columntransformer__countvectorizer__max_features": [100, 200, 400, 800, 1000, 2000],

"svc__gamma": [0.001, 0.01, 0.1, 1.0, 10, 100],

"svc__C": [0.001, 0.01, 0.1, 1.0, 10, 100],

}

# Create a grid search object

gs = GridSearchCV(pipe_svm, param_grid=param_grid, n_jobs=-1, return_train_score=True)

The GridSearchCV object above behaves like a classifier. We can call fit, predict or score on it.

# Carry out the search

gs.fit(X_train, y_train)

GridSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature',

'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())]),

n_jobs=-1,

param_grid={'columntransformer__countvectorizer__max_features': [100,

200,

400,

800,

1000,

2000],

'svc__C': [0.001, 0.01, 0.1, 1.0, 10, 100],

'svc__gamma': [0.001, 0.01, 0.1, 1.0, 10, 100]},

return_train_score=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

GridSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature',

'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())]),

n_jobs=-1,

param_grid={'columntransformer__countvectorizer__max_features': [100,

200,

400,

800,

1000,

2000],

'svc__C': [0.001, 0.01, 0.1, 1.0, 10, 100],

'svc__gamma': [0.001, 0.01, 0.1, 1.0, 10, 100]},

return_train_score=True)Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

Fitting the GridSearchCV object

Searches for the best hyperparameter values

You can access the best score and the best hyperparameters using

best_score_andbest_params_attributes, respectively.

# Get the best score

gs.best_score_

0.7395977155164125

# Get the best hyperparameter values

gs.best_params_

{'columntransformer__countvectorizer__max_features': 1000,

'svc__C': 1.0,

'svc__gamma': 0.1}

It is often helpful to visualize results of all cross-validation experiments.

You can access this information using

cv_results_attribute of a fittedGridSearchCVobject.

results = pd.DataFrame(gs.cv_results_)

results.T

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 206 | 207 | 208 | 209 | 210 | 211 | 212 | 213 | 214 | 215 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mean_fit_time | 0.104123 | 0.091723 | 0.081871 | 0.089127 | 0.08145 | 0.082344 | 0.086312 | 0.079848 | 0.112528 | 0.096349 | ... | 0.129181 | 0.108881 | 0.11883 | 0.108802 | 0.096398 | 0.15581 | 0.125058 | 0.113329 | 0.110062 | 0.114328 |

| std_fit_time | 0.007768 | 0.01175 | 0.009403 | 0.006055 | 0.00732 | 0.013899 | 0.006945 | 0.006046 | 0.010098 | 0.014253 | ... | 0.013599 | 0.002556 | 0.003461 | 0.004804 | 0.009283 | 0.013123 | 0.003564 | 0.003017 | 0.01028 | 0.028482 |

| mean_score_time | 0.021796 | 0.023445 | 0.021901 | 0.022294 | 0.022494 | 0.027702 | 0.01979 | 0.024711 | 0.019548 | 0.022263 | ... | 0.023513 | 0.023242 | 0.023758 | 0.023175 | 0.017724 | 0.020237 | 0.022042 | 0.023271 | 0.023701 | 0.02129 |

| std_score_time | 0.006511 | 0.004352 | 0.010497 | 0.004802 | 0.001931 | 0.006765 | 0.002836 | 0.00635 | 0.00429 | 0.003825 | ... | 0.002489 | 0.000603 | 0.000759 | 0.003478 | 0.002546 | 0.001506 | 0.001134 | 0.000242 | 0.000516 | 0.002989 |

| param_columntransformer__countvectorizer__max_features | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | ... | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 |

| param_svc__C | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.01 | 0.01 | 0.01 | 0.01 | ... | 10 | 10 | 10 | 10 | 100 | 100 | 100 | 100 | 100 | 100 |

| param_svc__gamma | 0.001 | 0.01 | 0.1 | 1.0 | 10 | 100 | 0.001 | 0.01 | 0.1 | 1.0 | ... | 0.1 | 1.0 | 10 | 100 | 0.001 | 0.01 | 0.1 | 1.0 | 10 | 100 |

| params | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 0.001} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 0.01} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 1.0} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 10} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 100} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.01, 'svc__gamma': 0.001} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.01, 'svc__gamma': 0.01} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.01, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.01, 'svc__gamma': 1.0} | ... | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 10, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 10, 'svc__gamma': 1.0} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 10, 'svc__gamma': 10} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 10, 'svc__gamma': 100} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 100, 'svc__gamma': 0.001} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 100, 'svc__gamma': 0.01} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 100, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 100, 'svc__gamma': 1.0} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 100, 'svc__gamma': 10} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 100, 'svc__gamma': 100} |

| split0_test_score | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | ... | 0.733746 | 0.616099 | 0.50774 | 0.504644 | 0.718266 | 0.718266 | 0.724458 | 0.616099 | 0.50774 | 0.504644 |

| split1_test_score | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | ... | 0.77709 | 0.625387 | 0.510836 | 0.510836 | 0.724458 | 0.739938 | 0.764706 | 0.625387 | 0.510836 | 0.510836 |

| split2_test_score | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | ... | 0.690402 | 0.606811 | 0.50774 | 0.50774 | 0.693498 | 0.705882 | 0.687307 | 0.606811 | 0.50774 | 0.50774 |

| split3_test_score | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | ... | 0.708075 | 0.618012 | 0.509317 | 0.509317 | 0.68323 | 0.704969 | 0.708075 | 0.618012 | 0.509317 | 0.509317 |

| split4_test_score | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | ... | 0.723602 | 0.645963 | 0.509317 | 0.509317 | 0.720497 | 0.717391 | 0.720497 | 0.645963 | 0.509317 | 0.509317 |

| mean_test_score | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | ... | 0.726583 | 0.622454 | 0.50899 | 0.508371 | 0.70799 | 0.717289 | 0.721008 | 0.622454 | 0.50899 | 0.508371 |

| std_test_score | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | ... | 0.029198 | 0.013161 | 0.001162 | 0.002105 | 0.01647 | 0.012616 | 0.025396 | 0.013161 | 0.001162 | 0.002105 |

| rank_test_score | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | ... | 9 | 81 | 91 | 97 | 28 | 22 | 18 | 81 | 91 | 97 |

| split0_train_score | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | ... | 1.0 | 1.0 | 1.0 | 1.0 | 0.828682 | 0.989147 | 1.0 | 1.0 | 1.0 | 1.0 |

| split1_train_score | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | ... | 0.999225 | 1.0 | 1.0 | 1.0 | 0.834109 | 0.989922 | 1.0 | 1.0 | 1.0 | 1.0 |

| split2_train_score | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | ... | 0.99845 | 0.999225 | 0.999225 | 0.999225 | 0.827907 | 0.987597 | 0.999225 | 0.999225 | 0.999225 | 0.999225 |

| split3_train_score | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | ... | 0.998451 | 0.999225 | 0.999225 | 0.999225 | 0.841208 | 0.989156 | 0.999225 | 0.999225 | 0.999225 | 0.999225 |

| split4_train_score | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | ... | 0.999225 | 0.999225 | 0.999225 | 0.999225 | 0.82804 | 0.988381 | 0.999225 | 0.999225 | 0.999225 | 0.999225 |

| mean_train_score | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | ... | 0.99907 | 0.999535 | 0.999535 | 0.999535 | 0.831989 | 0.988841 | 0.999535 | 0.999535 | 0.999535 | 0.999535 |

| std_train_score | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | ... | 0.00058 | 0.00038 | 0.00038 | 0.00038 | 0.005151 | 0.00079 | 0.00038 | 0.00038 | 0.00038 | 0.00038 |

23 rows × 216 columns

results = (

pd.DataFrame(gs.cv_results_).set_index("rank_test_score").sort_index()

)

display(results.T)

| rank_test_score | 1 | 2 | 3 | 4 | 5 | 5 | 7 | 8 | 9 | 10 | ... | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mean_fit_time | 0.085231 | 0.086313 | 0.079107 | 0.081699 | 0.07353 | 0.074386 | 0.120402 | 0.115253 | 0.129181 | 0.114582 | ... | 0.080985 | 0.098668 | 0.101037 | 0.08573 | 0.094247 | 0.092475 | 0.096127 | 0.09177 | 0.096797 | 0.104123 |

| std_fit_time | 0.006505 | 0.003525 | 0.009367 | 0.003672 | 0.005885 | 0.008361 | 0.008925 | 0.007712 | 0.013599 | 0.00214 | ... | 0.005678 | 0.006252 | 0.010225 | 0.003507 | 0.013336 | 0.002976 | 0.00951 | 0.005066 | 0.021648 | 0.007768 |

| mean_score_time | 0.019802 | 0.021118 | 0.017176 | 0.018267 | 0.019067 | 0.019813 | 0.020689 | 0.02284 | 0.023513 | 0.016811 | ... | 0.026743 | 0.024229 | 0.024143 | 0.021021 | 0.021096 | 0.026628 | 0.026533 | 0.021708 | 0.024202 | 0.021796 |

| std_score_time | 0.000516 | 0.000943 | 0.003443 | 0.002294 | 0.006397 | 0.008837 | 0.003677 | 0.005764 | 0.002489 | 0.002575 | ... | 0.0081 | 0.007111 | 0.00504 | 0.003719 | 0.002772 | 0.01301 | 0.006304 | 0.002954 | 0.002097 | 0.006511 |

| param_columntransformer__countvectorizer__max_features | 1000 | 2000 | 400 | 800 | 200 | 100 | 800 | 1000 | 2000 | 400 | ... | 1000 | 1000 | 1000 | 400 | 400 | 400 | 400 | 400 | 1000 | 100 |

| param_svc__C | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 10 | 10 | 10 | 10 | ... | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| param_svc__gamma | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | ... | 0.1 | 0.01 | 0.001 | 0.001 | 0.01 | 0.1 | 1.0 | 10 | 100 | 0.001 |

| params | {'columntransformer__countvectorizer__max_features': 1000, 'svc__C': 1.0, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 1.0, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 1.0, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 800, 'svc__C': 1.0, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 200, 'svc__C': 1.0, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 1.0, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 800, 'svc__C': 10, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 1000, 'svc__C': 10, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 2000, 'svc__C': 10, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 10, 'svc__gamma': 0.1} | ... | {'columntransformer__countvectorizer__max_features': 1000, 'svc__C': 0.001, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 1000, 'svc__C': 0.001, 'svc__gamma': 0.01} | {'columntransformer__countvectorizer__max_features': 1000, 'svc__C': 0.001, 'svc__gamma': 0.001} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 0.001, 'svc__gamma': 0.001} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 0.001, 'svc__gamma': 0.01} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 0.001, 'svc__gamma': 0.1} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 0.001, 'svc__gamma': 1.0} | {'columntransformer__countvectorizer__max_features': 400, 'svc__C': 0.001, 'svc__gamma': 10} | {'columntransformer__countvectorizer__max_features': 1000, 'svc__C': 0.001, 'svc__gamma': 100} | {'columntransformer__countvectorizer__max_features': 100, 'svc__C': 0.001, 'svc__gamma': 0.001} |

| split0_test_score | 0.764706 | 0.767802 | 0.764706 | 0.76161 | 0.758514 | 0.76161 | 0.727554 | 0.718266 | 0.733746 | 0.739938 | ... | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 |

| split1_test_score | 0.767802 | 0.770898 | 0.764706 | 0.76161 | 0.758514 | 0.755418 | 0.77709 | 0.783282 | 0.77709 | 0.783282 | ... | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 |

| split2_test_score | 0.71517 | 0.708978 | 0.708978 | 0.712074 | 0.712074 | 0.712074 | 0.690402 | 0.708978 | 0.690402 | 0.693498 | ... | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 | 0.50774 |

| split3_test_score | 0.717391 | 0.717391 | 0.714286 | 0.720497 | 0.717391 | 0.714286 | 0.729814 | 0.717391 | 0.708075 | 0.714286 | ... | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 | 0.506211 |

| split4_test_score | 0.732919 | 0.729814 | 0.729814 | 0.723602 | 0.729814 | 0.732919 | 0.714286 | 0.708075 | 0.723602 | 0.701863 | ... | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 | 0.509317 |

| mean_test_score | 0.739598 | 0.738977 | 0.736498 | 0.735879 | 0.735261 | 0.735261 | 0.727829 | 0.727198 | 0.726583 | 0.726573 | ... | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 |

| std_test_score | 0.022629 | 0.025689 | 0.024028 | 0.021345 | 0.019839 | 0.020414 | 0.028337 | 0.028351 | 0.029198 | 0.032404 | ... | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 | 0.000982 |

| split0_train_score | 0.889147 | 0.903101 | 0.881395 | 0.886047 | 0.872093 | 0.856589 | 0.993023 | 0.996899 | 1.0 | 0.986047 | ... | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 |

| split1_train_score | 0.877519 | 0.895349 | 0.858915 | 0.873643 | 0.847287 | 0.83876 | 0.993023 | 0.994574 | 0.999225 | 0.987597 | ... | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 |

| split2_train_score | 0.888372 | 0.897674 | 0.87907 | 0.887597 | 0.85969 | 0.849612 | 0.994574 | 0.994574 | 0.99845 | 0.989922 | ... | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 | 0.507752 |

| split3_train_score | 0.884586 | 0.902401 | 0.869094 | 0.879938 | 0.859799 | 0.852053 | 0.989156 | 0.992254 | 0.998451 | 0.982184 | ... | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 | 0.508133 |

| split4_train_score | 0.876065 | 0.891557 | 0.861348 | 0.874516 | 0.850503 | 0.841983 | 0.992254 | 0.993029 | 0.999225 | 0.985283 | ... | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 | 0.507359 |

| mean_train_score | 0.883138 | 0.898016 | 0.869964 | 0.880348 | 0.857874 | 0.847799 | 0.992406 | 0.994266 | 0.99907 | 0.986207 | ... | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 |

| std_train_score | 0.005426 | 0.004337 | 0.009063 | 0.00573 | 0.008667 | 0.006545 | 0.001792 | 0.001594 | 0.00058 | 0.002561 | ... | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 | 0.000245 |

22 rows × 216 columns

Let’s only look at the most relevant rows.

pd.DataFrame(gs.cv_results_)[

[

"mean_test_score",

"param_columntransformer__countvectorizer__max_features",

"param_svc__gamma",

"param_svc__C",

"mean_fit_time",

"rank_test_score",

]

].set_index("rank_test_score").sort_index().T

| rank_test_score | 1 | 2 | 3 | 4 | 5 | 5 | 7 | 8 | 9 | 10 | ... | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 | 121 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mean_test_score | 0.739598 | 0.738977 | 0.736498 | 0.735879 | 0.735261 | 0.735261 | 0.727829 | 0.727198 | 0.726583 | 0.726573 | ... | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 |

| param_columntransformer__countvectorizer__max_features | 1000 | 2000 | 400 | 800 | 200 | 100 | 800 | 1000 | 2000 | 400 | ... | 1000 | 1000 | 1000 | 400 | 400 | 400 | 400 | 400 | 1000 | 100 |

| param_svc__gamma | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | ... | 0.1 | 0.01 | 0.001 | 0.001 | 0.01 | 0.1 | 1.0 | 10 | 100 | 0.001 |

| param_svc__C | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 10 | 10 | 10 | 10 | ... | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| mean_fit_time | 0.085231 | 0.086313 | 0.079107 | 0.081699 | 0.07353 | 0.074386 | 0.120402 | 0.115253 | 0.129181 | 0.114582 | ... | 0.080985 | 0.098668 | 0.101037 | 0.08573 | 0.094247 | 0.092475 | 0.096127 | 0.09177 | 0.096797 | 0.104123 |

5 rows × 216 columns

Other than searching for best hyperparameter values,

GridSearchCValso fits a new model on the whole training set with the parameters that yielded the best results.So we can conveniently call

scoreon the test set with a fittedGridSearchCVobject.

gs.best_score_

0.7395977155164125

# Get the test scores

gs.score(X_test, y_test)

0.7574257425742574

Why are best_score_ and the score above different?

n_jobs=-1#

Note the

n_jobs=-1above.Hyperparameter optimization can be done in parallel for each of the configurations.

This is very useful when scaling up to large numbers of machines in the cloud.

When you set

n_jobs=-1, it means that you want to use all available CPU cores for the task.

The __ syntax#

Above: we have a nesting of transformers.

We can access the parameters of the “inner” objects by using __ to go “deeper”:

svc__gamma: thegammaof thesvcof the pipelinesvc__C: theCof thesvcof the pipelinecolumntransformer__countvectorizer__max_features: themax_featureshyperparameter ofCountVectorizerin the column transformerpreprocessor.

pipe_svm

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

Range of C#

Note the exponential range for

C. This is quite common. Using this exponential range allows you to explore a wide range of values efficiently.There is no point trying \(C=\{1,2,3\ldots,100\}\) because \(C=1,2,3\) are too similar to each other.

Often we’re trying to find an order of magnitude, e.g. \(C=\{0.01,0.1,1,10,100\}\).

We can also write that as \(C=\{10^{-2},10^{-1},10^0,10^1,10^2\}\).

Or, in other words, \(C\) values to try are \(10^n\) for \(n=-2,-1,0,1,2\) which is basically what we have above.

Visualizing the parameter grid as a heatmap#

def display_heatmap(param_grid, pipe, X_train, y_train):

grid_search = GridSearchCV(

pipe, param_grid, cv=5, n_jobs=-1, return_train_score=True

)

grid_search.fit(X_train, y_train)

results = pd.DataFrame(grid_search.cv_results_)

scores = np.array(results.mean_test_score).reshape(6, 6)

# plot the mean cross-validation scores

my_heatmap(

scores,

xlabel="gamma",

xticklabels=param_grid["svc__gamma"],

ylabel="C",

yticklabels=param_grid["svc__C"],

cmap="viridis",

);

Note that the range we pick for the parameters play an important role in hyperparameter optimization.

For example, consider the following grid and the corresponding results.

param_grid1 = {

"svc__gamma": 10.0**np.arange(-3, 3, 1),

"svc__C": 10.0**np.arange(-3, 3, 1)

}

display_heatmap(param_grid1, pipe_svm, X_train, y_train)

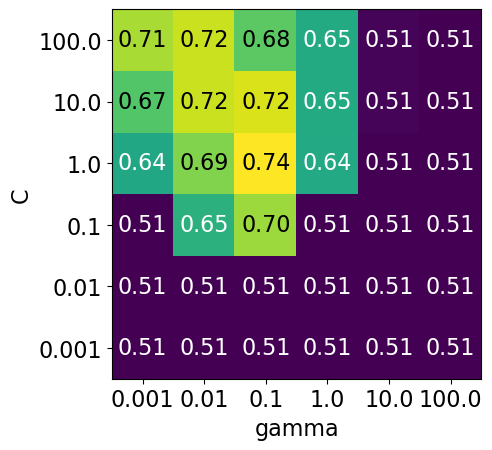

Each point in the heat map corresponds to one run of cross-validation, with a particular setting

Colour encodes cross-validation accuracy.

Lighter colour means high accuracy

Darker colour means low accuracy

SVC is quite sensitive to hyperparameter settings.

Adjusting hyperparameters can change the accuracy from 0.51 to 0.74!

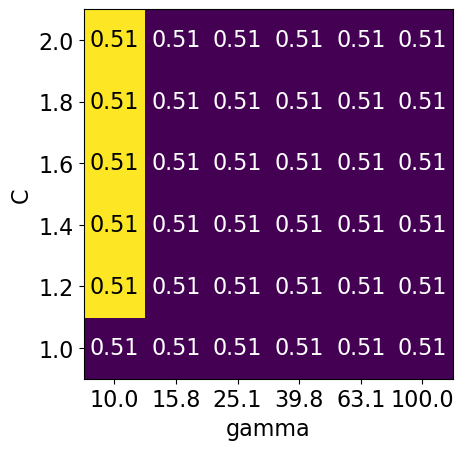

Bad range for hyperparameters#

np.logspace(1, 2, 6)

array([ 10. , 15.84893192, 25.11886432, 39.81071706,

63.09573445, 100. ])

np.linspace(1, 2, 6)

array([1. , 1.2, 1.4, 1.6, 1.8, 2. ])

param_grid2 = {"svc__gamma": np.round(np.logspace(1, 2, 6), 1), "svc__C": np.linspace(1, 2, 6)}

display_heatmap(param_grid2, pipe_svm, X_train, y_train)

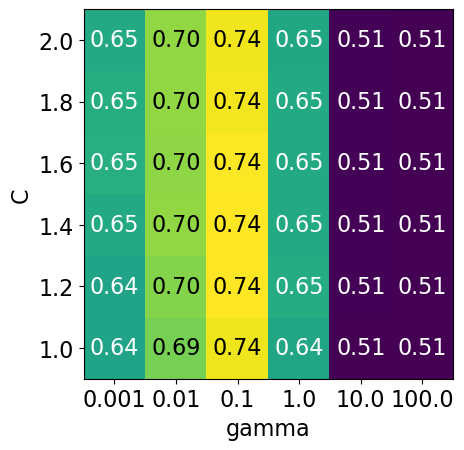

Different range for hyperparameters yields better results!#

np.logspace(-3, 2, 6)

array([1.e-03, 1.e-02, 1.e-01, 1.e+00, 1.e+01, 1.e+02])

np.linspace(1, 2, 6)

array([1. , 1.2, 1.4, 1.6, 1.8, 2. ])

param_grid3 = {"svc__gamma": np.logspace(-3, 2, 6), "svc__C": np.linspace(1, 2, 6)}

display_heatmap(param_grid3, pipe_svm, X_train, y_train)

It seems like we are getting even better cross-validation results with C = 2.0 and gamma = 0.1

How about exploring different values of C close to 2.0?

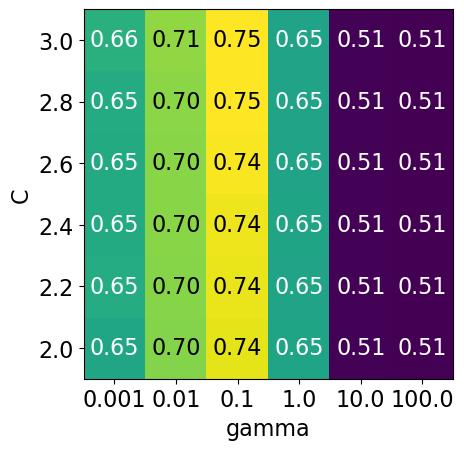

param_grid4 = {"svc__gamma": np.logspace(-3, 2, 6), "svc__C": np.linspace(2, 3, 6)}

display_heatmap(param_grid4, pipe_svm, X_train, y_train)

That’s good! We are finding some more options for C where the accuracy is 0.75.

The tricky part is we do not know in advance what range of hyperparameters might work the best for the given problem, model, and the dataset.

Note

GridSearchCV allows the param_grid to be a list of dictionaries. Sometimes some hyperparameters are applicable only for certain models.

For example, in the context of SVC, C and gamma are applicable when the kernel is rbf whereas only C is applicable for kernel="linear".

Problems with exhaustive grid search#

Required number of models to evaluate grows exponentially with the dimensionally of the configuration space.

Example: Suppose you have

5 hyperparameters

10 different values for each hyperparameter

You’ll be evaluating \(10^5=100,000\) models! That is you’ll be calling

cross_validate100,000 times!

Exhaustive search may become infeasible fairly quickly.

Other options?

Randomized hyperparameter search#

Randomized hyperparameter optimization

Samples configurations at random until certain budget (e.g., time) is exhausted

from sklearn.model_selection import RandomizedSearchCV

param_grid = {

"columntransformer__countvectorizer__max_features": [100, 200, 400, 800, 1000, 2000],

"svc__gamma": [0.001, 0.01, 0.1, 1.0, 10, 100],

"svc__C": [0.001, 0.01, 0.1, 1.0, 10, 100]

}

print("Grid size: %d" % (np.prod(list(map(len, param_grid.values())))))

param_grid

Grid size: 216

{'columntransformer__countvectorizer__max_features': [100,

200,

400,

800,

1000,

2000],

'svc__gamma': [0.001, 0.01, 0.1, 1.0, 10, 100],

'svc__C': [0.001, 0.01, 0.1, 1.0, 10, 100]}

svc_pipe

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('abcmeta', <class 'sklearn.svm._classes.SVC'>)])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('abcmeta', <class 'sklearn.svm._classes.SVC'>)])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

<class 'sklearn.svm._classes.SVC'>

svc_pipe = make_pipeline(preprocessor, SVC())

# Create a random search object

random_search = RandomizedSearchCV(svc_pipe, param_distributions=param_grid, n_iter=100, n_jobs= -1, return_train_score=True)

# Carry out the search

random_search.fit(X_train, y_train)

RandomizedSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature',

'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())]),

n_iter=100, n_jobs=-1,

param_distributions={'columntransformer__countvectorizer__max_features': [100,

200,

400,

800,

1000,

2000],

'svc__C': [0.001, 0.01, 0.1, 1.0, 10,

100],

'svc__gamma': [0.001, 0.01, 0.1, 1.0,

10, 100]},

return_train_score=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RandomizedSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature',

'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())]),

n_iter=100, n_jobs=-1,

param_distributions={'columntransformer__countvectorizer__max_features': [100,

200,

400,

800,

1000,

2000],

'svc__C': [0.001, 0.01, 0.1, 1.0, 10,

100],

'svc__gamma': [0.001, 0.01, 0.1, 1.0,

10, 100]},

return_train_score=True)Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

pd.DataFrame(random_search.cv_results_)[

[

"mean_test_score",

"param_columntransformer__countvectorizer__max_features",

"param_svc__gamma",

"param_svc__C",

"mean_fit_time",

"rank_test_score",

]

].set_index("rank_test_score").sort_index().T

| rank_test_score | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 56 | 56 | 56 | 56 | 56 | 56 | 56 | 56 | 56 | 56 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mean_test_score | 0.735879 | 0.735261 | 0.727198 | 0.726583 | 0.726573 | 0.723481 | 0.721008 | 0.720393 | 0.716049 | 0.705511 | ... | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 | 0.50775 |

| param_columntransformer__countvectorizer__max_features | 800 | 100 | 1000 | 2000 | 400 | 1000 | 2000 | 800 | 1000 | 100 | ... | 200 | 2000 | 400 | 100 | 200 | 200 | 100 | 200 | 1000 | 2000 |

| param_svc__gamma | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.01 | 0.1 | 0.01 | 0.01 | 0.001 | ... | 0.001 | 1.0 | 0.001 | 0.001 | 0.01 | 1.0 | 1.0 | 10 | 0.1 | 100 |

| param_svc__C | 1.0 | 1.0 | 10 | 10 | 10 | 10 | 100 | 100 | 100 | 100 | ... | 0.1 | 0.001 | 0.001 | 0.01 | 0.01 | 0.1 | 0.1 | 0.1 | 0.001 | 0.1 |

| mean_fit_time | 0.079094 | 0.073734 | 0.121584 | 0.130087 | 0.111461 | 0.082629 | 0.134377 | 0.141937 | 0.152505 | 0.072613 | ... | 0.086507 | 0.107527 | 0.099628 | 0.081122 | 0.0996 | 0.087107 | 0.084221 | 0.099165 | 0.098598 | 0.102008 |

5 rows × 100 columns

n_iter#

Note the

n_iter, we didn’t need this forGridSearchCV.Larger

n_iterwill take longer but it’ll do more searching.Remember you still need to multiply by number of folds!

I have set the

random_statefor reproducibility but you don’t have to do it.

Passing probability distributions to random search#

Another approach we can take is to provide probability distributions from which to draw:

from scipy.stats import expon, lognorm, loguniform, randint, uniform, norm, randint

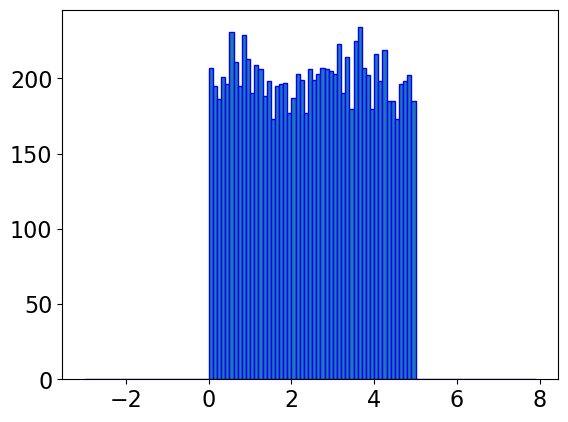

np.random.seed(123)

y = uniform.rvs(0, 5, 10000)

bin = np.arange(-3,8,0.1)

plt.hist(y, bins=bin, edgecolor='blue')

plt.show()

# Sample values

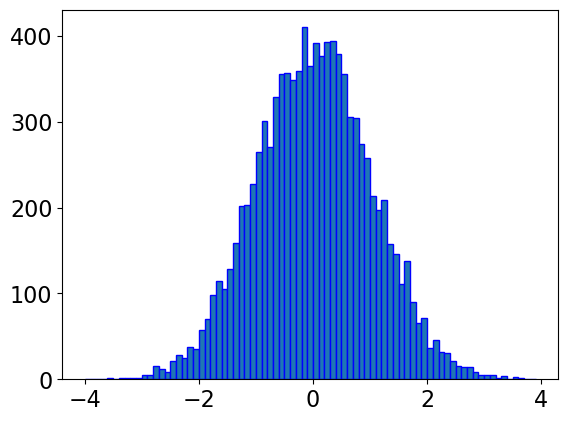

y = norm.rvs(0, 1, 10000)

#creating bin

bin = np.arange(-4,4,0.1)

plt.hist(y, bins=bin, edgecolor='blue')

plt.show()

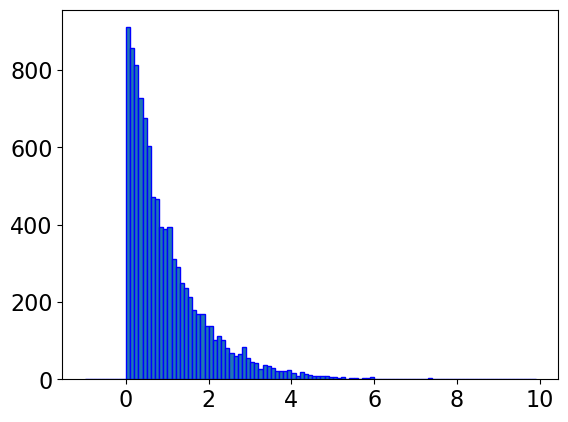

y = expon.rvs(0, 1, 10000)

#creating bin

bin = np.arange(-1,10,0.1)

plt.hist(y, bins=bin, edgecolor='blue')

plt.show()

# Sample values

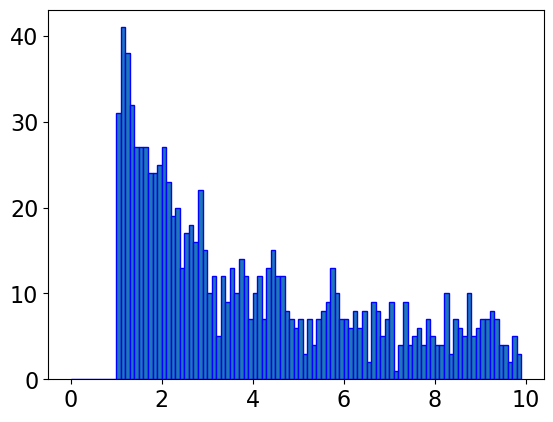

y = loguniform.rvs(10**0, 10**10, size=10000)

# Creating bins

bin = np.arange(0, 10, 0.1)

plt.hist(y, bins=bin, edgecolor='blue')

plt.show()

pipe_svm

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

param_dist = {

"columntransformer__countvectorizer__max_features": randint(100, 2000),

"svc__C": uniform(0.1, 1e4), # loguniform(1e-3, 1e3),

"svc__gamma": loguniform(1e-5, 1e3),

}

# Create a random search object

random_search = RandomizedSearchCV(pipe_svm,

param_distributions = param_dist,

n_iter=100,

n_jobs=-1,

return_train_score=True)

# Carry out the search

random_search.fit(X_train, y_train)

RandomizedSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_...

n_iter=100, n_jobs=-1,

param_distributions={'columntransformer__countvectorizer__max_features': <scipy.stats._distn_infrastructure.rv_discrete_frozen object at 0x16cf830d0>,

'svc__C': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16cfe5d80>,

'svc__gamma': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16d06d210>},

return_train_score=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RandomizedSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_...

n_iter=100, n_jobs=-1,

param_distributions={'columntransformer__countvectorizer__max_features': <scipy.stats._distn_infrastructure.rv_discrete_frozen object at 0x16cf830d0>,

'svc__C': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16cfe5d80>,

'svc__gamma': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16d06d210>},

return_train_score=True)Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

random_search.best_score_

0.7365017402842143

pd.DataFrame(random_search.cv_results_)[

[

"mean_test_score",

"param_columntransformer__countvectorizer__max_features",

"param_svc__gamma",

"param_svc__C",

"mean_fit_time",

"rank_test_score",

]

].set_index("rank_test_score").sort_index().T

| rank_test_score | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 75 | 92 | 92 | 92 | 92 | 92 | 92 | 92 | 92 | 92 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mean_test_score | 0.736502 | 0.734023 | 0.734021 | 0.730923 | 0.729073 | 0.727833 | 0.7272 | 0.723485 | 0.722229 | 0.719768 | ... | 0.508371 | 0.50713 | 0.50713 | 0.50713 | 0.50713 | 0.50713 | 0.50713 | 0.50713 | 0.50713 | 0.50713 |

| param_columntransformer__countvectorizer__max_features | 1867 | 1777 | 1573 | 1679 | 1540 | 704 | 1154 | 1978 | 852 | 697 | ... | 1220 | 1654 | 1197 | 1121 | 1840 | 683 | 248 | 783 | 1021 | 420 |

| param_svc__gamma | 0.243703 | 0.163957 | 0.283171 | 0.152632 | 0.107849 | 0.005368 | 0.286017 | 0.075067 | 0.219963 | 0.006266 | ... | 125.406498 | 851.479852 | 470.320897 | 455.214521 | 314.226182 | 823.099655 | 816.947532 | 617.220235 | 477.259078 | 306.849615 |

| param_svc__C | 706.965536 | 3981.026871 | 7674.924228 | 5230.093183 | 2157.539624 | 88.074488 | 1263.418408 | 2897.463534 | 9263.875195 | 141.009303 | ... | 1828.605658 | 7513.25611 | 8890.680001 | 3655.859611 | 7508.068976 | 1517.826919 | 6971.054315 | 4225.625574 | 2443.80652 | 3266.37956 |

| mean_fit_time | 0.115109 | 0.120184 | 0.1211 | 0.118286 | 0.12453 | 0.100538 | 0.109602 | 0.12987 | 0.118243 | 0.127968 | ... | 0.124883 | 0.111305 | 0.106129 | 0.106697 | 0.109048 | 0.110257 | 0.096447 | 0.121339 | 0.105305 | 0.106083 |

5 rows × 100 columns

This is a bit fancy. What’s nice is that you can have it concentrate more on certain values by setting the distribution.

Advantages of RandomizedSearchCV#

Faster compared to

GridSearchCV.Adding parameters that do not influence the performance does not affect efficiency.

Works better when some parameters are more important than others.

In general, I recommend using

RandomizedSearchCVrather thanGridSearchCV.

Advantages of RandomizedSearchCV#

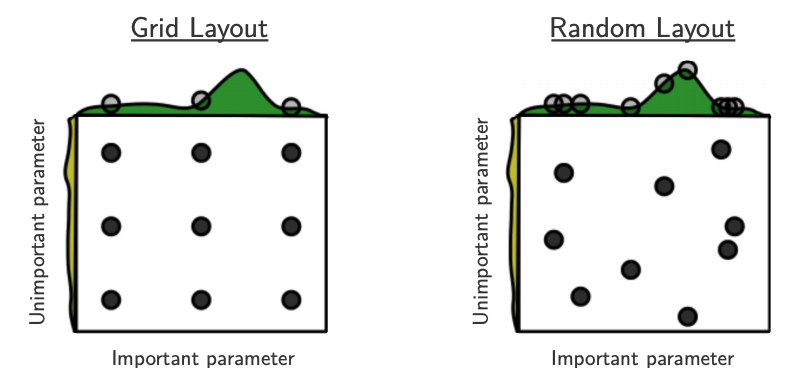

Source: Bergstra and Bengio, Random Search for Hyper-Parameter Optimization, JMLR 2012.

The yellow on the left shows how your scores are going to change when you vary the unimportant hyperparameter.

The green on the top shows how your scores are going to change when you vary the important hyperparameter.

You don’t know in advance which hyperparameters are important for your problem.

In the left figure, 6 of the 9 searches are useless because they are only varying the unimportant parameter.

In the right figure, all 9 searches are useful.

(Optional) Searching for optimal parameters with successive halving¶#

Successive halving is an iterative selection process where all candidates (the parameter combinations) are evaluated with a small amount of resources (e.g., small amount of training data) at the first iteration.

Checkout successive halving with grid search and random search.

from sklearn.experimental import enable_halving_search_cv # noqa

from sklearn.model_selection import HalvingRandomSearchCV

rsh = HalvingRandomSearchCV(

estimator=pipe_svm, param_distributions=param_dist, factor=2, random_state=123

)

rsh.fit(X_train, y_train)

HalvingRandomSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['ti...

('svc', SVC())]),

factor=2,

param_distributions={'columntransformer__countvectorizer__max_features': <scipy.stats._distn_infrastructure.rv_discrete_frozen object at 0x16cf830d0>,

'svc__C': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16cfe5d80>,

'svc__gamma': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16d06d210>},

random_state=123)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

HalvingRandomSearchCV(estimator=Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability',

'energy',

'instrumentalness',

'liveness',

'loudness',

'speechiness',

'tempo',

'valence']),

('passthrough',

'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['ti...

('svc', SVC())]),

factor=2,

param_distributions={'columntransformer__countvectorizer__max_features': <scipy.stats._distn_infrastructure.rv_discrete_frozen object at 0x16cf830d0>,

'svc__C': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16cfe5d80>,

'svc__gamma': <scipy.stats._distn_infrastructure.rv_continuous_frozen object at 0x16d06d210>},

random_state=123)Pipeline(steps=[('columntransformer',

ColumnTransformer(transformers=[('standardscaler',

StandardScaler(),

['acousticness',

'danceability', 'energy',

'instrumentalness',

'liveness', 'loudness',

'speechiness', 'tempo',

'valence']),

('passthrough', 'passthrough',

['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])),

('svc', SVC())])ColumnTransformer(transformers=[('standardscaler', StandardScaler(),

['acousticness', 'danceability', 'energy',

'instrumentalness', 'liveness', 'loudness',

'speechiness', 'tempo', 'valence']),

('passthrough', 'passthrough', ['mode']),

('onehotencoder',

OneHotEncoder(handle_unknown='ignore'),

['time_signature', 'key']),

('countvectorizer',

CountVectorizer(max_features=100,

stop_words='english'),

'song_title')])['acousticness', 'danceability', 'energy', 'instrumentalness', 'liveness', 'loudness', 'speechiness', 'tempo', 'valence']

StandardScaler()

['mode']

passthrough

['time_signature', 'key']

OneHotEncoder(handle_unknown='ignore')

song_title

CountVectorizer(max_features=100, stop_words='english')

SVC()

results = pd.DataFrame(rsh.cv_results_)

results["params_str"] = results.params.apply(str)

results.drop_duplicates(subset=("params_str", "iter"), inplace=True)

results

| iter | n_resources | mean_fit_time | std_fit_time | mean_score_time | std_score_time | param_columntransformer__countvectorizer__max_features | param_svc__C | param_svc__gamma | params | ... | std_test_score | rank_test_score | split0_train_score | split1_train_score | split2_train_score | split3_train_score | split4_train_score | mean_train_score | std_train_score | params_str | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 20 | 0.005094 | 0.000645 | 0.002420 | 0.000206 | 1634 | 7129.653205 | 0.026777 | {'columntransformer__countvectorizer__max_features': 1634, 'svc__C': 7129.653205232272, 'svc__gamma': 0.02677733855112973} | ... | 0.333333 | 111 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 1634, 'svc__C': 7129.653205232272, 'svc__gamma': 0.02677733855112973} |

| 1 | 0 | 20 | 0.004428 | 0.000334 | 0.002270 | 0.000221 | 1222 | 5513.247691 | 5.698385 | {'columntransformer__countvectorizer__max_features': 1222, 'svc__C': 5513.247690828913, 'svc__gamma': 5.698384608345687} | ... | 0.367423 | 22 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 1222, 'svc__C': 5513.247690828913, 'svc__gamma': 5.698384608345687} |

| 2 | 0 | 20 | 0.004418 | 0.000327 | 0.002301 | 0.000242 | 1247 | 7800.377619 | 0.019382 | {'columntransformer__countvectorizer__max_features': 1247, 'svc__C': 7800.377619120792, 'svc__gamma': 0.019381838999846482} | ... | 0.333333 | 111 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 1247, 'svc__C': 7800.377619120792, 'svc__gamma': 0.019381838999846482} |

| 3 | 0 | 20 | 0.004321 | 0.000275 | 0.002245 | 0.000221 | 213 | 4809.419015 | 0.013707 | {'columntransformer__countvectorizer__max_features': 213, 'svc__C': 4809.41901484361, 'svc__gamma': 0.013706928443177698} | ... | 0.359784 | 144 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 213, 'svc__C': 4809.41901484361, 'svc__gamma': 0.013706928443177698} |

| 4 | 0 | 20 | 0.004311 | 0.000281 | 0.002264 | 0.000219 | 1042 | 6273.270093 | 0.003919 | {'columntransformer__countvectorizer__max_features': 1042, 'svc__C': 6273.270093376167, 'svc__gamma': 0.003919287722401839} | ... | 0.406202 | 97 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 1042, 'svc__C': 6273.270093376167, 'svc__gamma': 0.003919287722401839} |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 155 | 5 | 640 | 0.018783 | 0.000256 | 0.004562 | 0.000115 | 729 | 4943.40065 | 0.092454 | {'columntransformer__countvectorizer__max_features': 729, 'svc__C': 4943.400649628005, 'svc__gamma': 0.09245358900622544} | ... | 0.051925 | 10 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 729, 'svc__C': 4943.400649628005, 'svc__gamma': 0.09245358900622544} |

| 156 | 5 | 640 | 0.017977 | 0.000112 | 0.004740 | 0.000092 | 1383 | 2705.246646 | 0.173979 | {'columntransformer__countvectorizer__max_features': 1383, 'svc__C': 2705.246646103936, 'svc__gamma': 0.1739787032867638} | ... | 0.046116 | 9 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 1383, 'svc__C': 2705.246646103936, 'svc__gamma': 0.1739787032867638} |

| 157 | 5 | 640 | 0.017635 | 0.000059 | 0.004559 | 0.000089 | 440 | 5315.613738 | 0.17973 | {'columntransformer__countvectorizer__max_features': 440, 'svc__C': 5315.613738418384, 'svc__gamma': 0.17973005068132514} | ... | 0.043509 | 12 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 440, 'svc__C': 5315.613738418384, 'svc__gamma': 0.17973005068132514} |

| 158 | 6 | 1280 | 0.060138 | 0.000486 | 0.009153 | 0.000165 | 729 | 4943.40065 | 0.092454 | {'columntransformer__countvectorizer__max_features': 729, 'svc__C': 4943.400649628005, 'svc__gamma': 0.09245358900622544} | ... | 0.010715 | 19 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 729, 'svc__C': 4943.400649628005, 'svc__gamma': 0.09245358900622544} |

| 159 | 6 | 1280 | 0.054526 | 0.000155 | 0.010938 | 0.000228 | 1383 | 2705.246646 | 0.173979 | {'columntransformer__countvectorizer__max_features': 1383, 'svc__C': 2705.246646103936, 'svc__gamma': 0.1739787032867638} | ... | 0.026620 | 11 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | {'columntransformer__countvectorizer__max_features': 1383, 'svc__C': 2705.246646103936, 'svc__gamma': 0.1739787032867638} |

160 rows × 26 columns

(Optional) Fancier methods#

Both

GridSearchCVandRandomizedSearchCVdo each trial independently.What if you could learn from your experience, e.g. learn that

max_depth=3is bad?That could save time because you wouldn’t try combinations involving

max_depth=3in the future.

We can do this with

scikit-optimize, which is a completely different package fromscikit-learnIt uses a technique called “model-based optimization” and we’ll specifically use “Bayesian optimization”.

In short, it uses machine learning to predict what hyperparameters will be good.

Machine learning on machine learning!

This is an active research area and there are sophisticated packages for this.

Here are some examples

❓❓ Questions for you#

(iClicker) Exercise 6.1#

iClicker cloud join link: https://join.iclicker.com/SNBF

Select all of the following statements which are TRUE.

(A) If you get best results at the edges of your parameter grid, it might be a good idea to adjust the range of values in your parameter grid.

(B) Grid search is guaranteed to find the best hyperparameter values.

(C) It is possible to get different hyperparameters in different runs of

RandomizedSearchCV.

V’s Solutions!

A, C

Questions for class discussion (hyperparameter optimization)#

Suppose you have 10 hyperparameters, each with 4 possible values. If you run

GridSearchCVwith this parameter grid, how many cross-validation experiments will be carried out?Suppose you have 10 hyperparameters and each takes 4 values. If you run

RandomizedSearchCVwith this parameter grid withn_iter=20, how many cross-validation experiments will be carried out?

Optimization bias/Overfitting of the validation set#

Overfitting of the validation error#

Why do we need to evaluate the model on the test set in the end?

Why not just use cross-validation on the whole dataset?

While carrying out hyperparameter optimization, we usually try over many possibilities.

If our dataset is small and if your validation set is hit too many times, we suffer from optimization bias or overfitting the validation set.

Optimization bias of parameter learning#

Overfitting of the training error

An example:

During training, we could search over tons of different decision trees.

So we can get “lucky” and find a tree with low training error by chance.

Optimization bias of hyper-parameter learning#

Overfitting of the validation error

An example:

Here, we might optimize the validation error over 1000 values of

max_depth.One of the 1000 trees might have low validation error by chance.

(Optional) Example 1: Optimization bias#

Consider a multiple-choice (a,b,c,d) “test” with 10 questions:

If you choose answers randomly, expected grade is 25% (no bias).

If you fill out two tests randomly and pick the best, expected grade is 33%.

Optimization bias of ~8%.

If you take the best among 10 random tests, expected grade is ~47%.