Course Information#

UBC Master of Data Science program, 2024-25

Imports#

import sys

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

import sys, os

sys.path.append(os.path.join(os.path.abspath(".."), "code"))

from plotting_functions import *

from sklearn import datasets

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[1], line 11

8 import sys, os

9 sys.path.append(os.path.join(os.path.abspath(".."), "code"))

---> 11 from plotting_functions import *

12 from sklearn import datasets

File ~/MDS/2024-25/563/DSCI_563_unsup-learn/lectures/code/plotting_functions.py:7

5 import matplotlib.pyplot as plt

6 from matplotlib.colors import ListedColormap, colorConverter, LinearSegmentedColormap

----> 7 from scipy.spatial import distance

8 from sklearn.metrics import euclidean_distances

9 from sklearn.manifold import MDS

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/spatial/__init__.py:110

1 """

2 =============================================================

3 Spatial algorithms and data structures (:mod:`scipy.spatial`)

(...)

107 QhullError

108 """ # noqa: E501

--> 110 from ._kdtree import *

111 from ._ckdtree import * # type: ignore[import-not-found]

112 from ._qhull import *

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/spatial/_kdtree.py:4

1 # Copyright Anne M. Archibald 2008

2 # Released under the scipy license

3 import numpy as np

----> 4 from ._ckdtree import cKDTree, cKDTreeNode # type: ignore[import-not-found]

6 __all__ = ['minkowski_distance_p', 'minkowski_distance',

7 'distance_matrix',

8 'Rectangle', 'KDTree']

11 def minkowski_distance_p(x, y, p=2):

File _ckdtree.pyx:11, in init scipy.spatial._ckdtree()

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/sparse/__init__.py:315

312 from ._sputils import get_index_dtype, safely_cast_index_arrays

314 # For backward compatibility with v0.19.

--> 315 from . import csgraph

317 # Deprecated namespaces, to be removed in v2.0.0

318 from . import (

319 base, bsr, compressed, construct, coo, csc, csr, data, dia, dok, extract,

320 lil, sparsetools, sputils

321 )

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/sparse/csgraph/__init__.py:187

158 __docformat__ = "restructuredtext en"

160 __all__ = ['connected_components',

161 'laplacian',

162 'shortest_path',

(...)

184 'csgraph_to_masked',

185 'NegativeCycleError']

--> 187 from ._laplacian import laplacian

188 from ._shortest_path import (

189 shortest_path, floyd_warshall, dijkstra, bellman_ford, johnson, yen,

190 NegativeCycleError

191 )

192 from ._traversal import (

193 breadth_first_order, depth_first_order, breadth_first_tree,

194 depth_first_tree, connected_components

195 )

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/sparse/csgraph/_laplacian.py:7

5 import numpy as np

6 from scipy.sparse import issparse

----> 7 from scipy.sparse.linalg import LinearOperator

8 from scipy.sparse._sputils import convert_pydata_sparse_to_scipy, is_pydata_spmatrix

11 ###############################################################################

12 # Graph laplacian

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/sparse/linalg/__init__.py:129

1 """

2 Sparse linear algebra (:mod:`scipy.sparse.linalg`)

3 ==================================================

(...)

126

127 """

--> 129 from ._isolve import *

130 from ._dsolve import *

131 from ._interface import *

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/sparse/linalg/_isolve/__init__.py:4

1 "Iterative Solvers for Sparse Linear Systems"

3 #from info import __doc__

----> 4 from .iterative import *

5 from .minres import minres

6 from .lgmres import lgmres

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/sparse/linalg/_isolve/iterative.py:5

3 from scipy.sparse.linalg._interface import LinearOperator

4 from .utils import make_system

----> 5 from scipy.linalg import get_lapack_funcs

7 __all__ = ['bicg', 'bicgstab', 'cg', 'cgs', 'gmres', 'qmr']

10 def _get_atol_rtol(name, b_norm, atol=0., rtol=1e-5):

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/linalg/__init__.py:203

1 """

2 ====================================

3 Linear algebra (:mod:`scipy.linalg`)

(...)

200

201 """ # noqa: E501

--> 203 from ._misc import *

204 from ._cythonized_array_utils import *

205 from ._basic import *

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/linalg/_misc.py:3

1 import numpy as np

2 from numpy.linalg import LinAlgError

----> 3 from .blas import get_blas_funcs

4 from .lapack import get_lapack_funcs

6 __all__ = ['LinAlgError', 'LinAlgWarning', 'norm']

File ~/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/linalg/blas.py:213

210 import numpy as np

211 import functools

--> 213 from scipy.linalg import _fblas

214 try:

215 from scipy.linalg import _cblas

ImportError: dlopen(/Users/kvarada/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/linalg/_fblas.cpython-312-darwin.so, 0x0002): Library not loaded: @rpath/libgfortran.5.dylib

Referenced from: <0B9C315B-A1DD-3527-88DB-4B90531D343F> /Users/kvarada/miniforge3/envs/jbook/lib/libopenblas.0.dylib

Reason: tried: '/Users/kvarada/miniforge3/envs/jbook/lib/libgfortran.5.dylib' (duplicate LC_RPATH '@loader_path'), '/Users/kvarada/miniforge3/envs/jbook/lib/libgfortran.5.dylib' (duplicate LC_RPATH '@loader_path'), '/Users/kvarada/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/linalg/../../../../libgfortran.5.dylib' (duplicate LC_RPATH '@loader_path'), '/Users/kvarada/miniforge3/envs/jbook/lib/python3.12/site-packages/scipy/linalg/../../../../libgfortran.5.dylib' (duplicate LC_RPATH '@loader_path'), '/Users/kvarada/miniforge3/envs/jbook/bin/../lib/libgfortran.5.dylib' (duplicate LC_RPATH '@loader_path'), '/Users/kvarada/miniforge3/envs/jbook/bin/../lib/libgfortran.5.dylib' (duplicate LC_RPATH '@loader_path'), '/usr/local/lib/libgfortran.5.dylib' (no such file), '/usr/lib/libgfortran.5.dylib' (no such file, not in dyld cache)

Learning outcomes#

From this lecture, students are expected to be able to:

Explain what is unsupervised learning.

Explain the difference between supervised and unsupervised learning.

Name some example applications of unsupervised learning.

Types of machine learning#

Recall the typical learning problems we discussed in 571.

Supervised learning (Gmail spam filtering)

Training a model from input data and its corresponding targets to predict targets for new examples. (571, 572, 573)

Unsupervised learning (this course) (Google News)

Training a model to find patterns in a dataset, typically an unlabeled dataset.

Reinforcement learning (AlphaGo)

A family of algorithms for finding suitable actions to take in a given situation in order to maximize a reward.

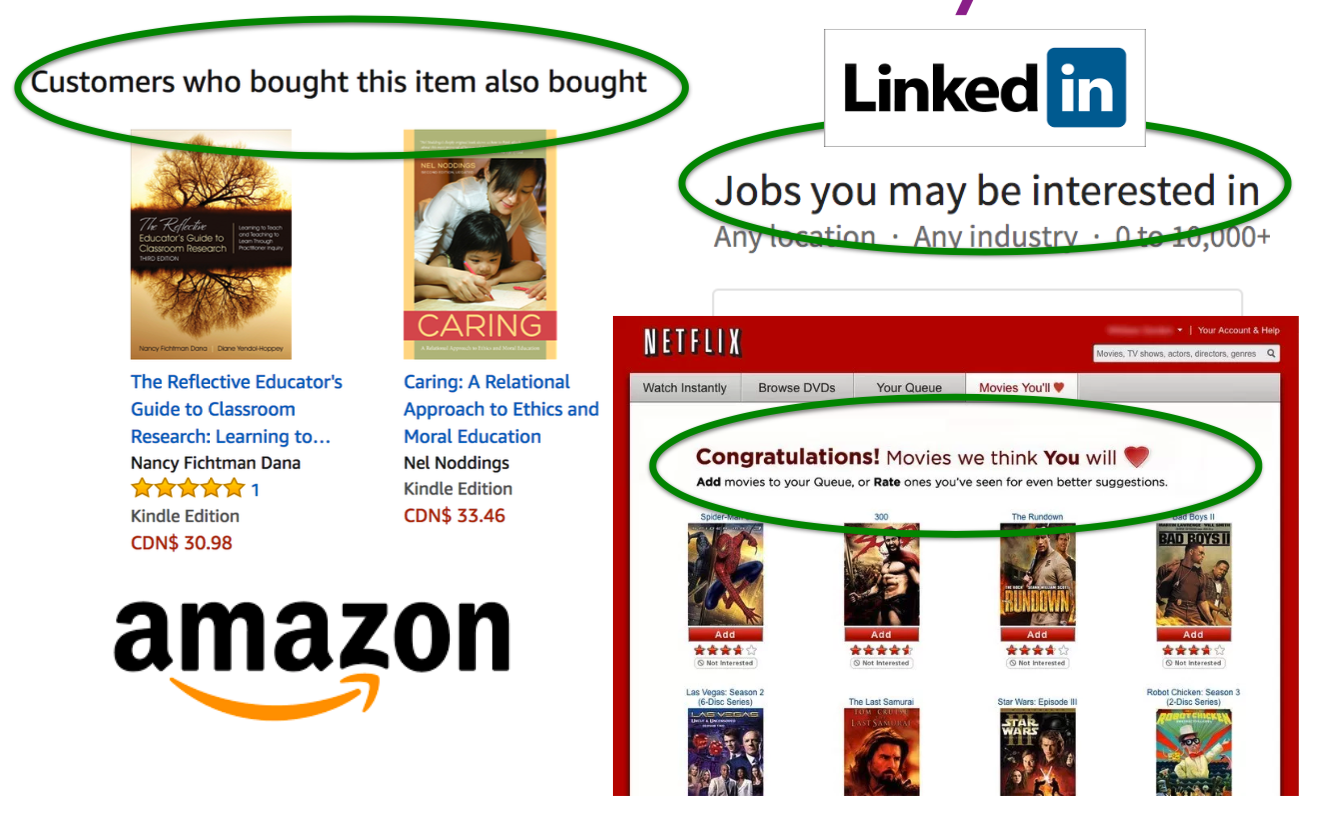

Recommendation systems (Amazon item recommendation system)

Predict the “rating” or “preference” a user would give to an item.

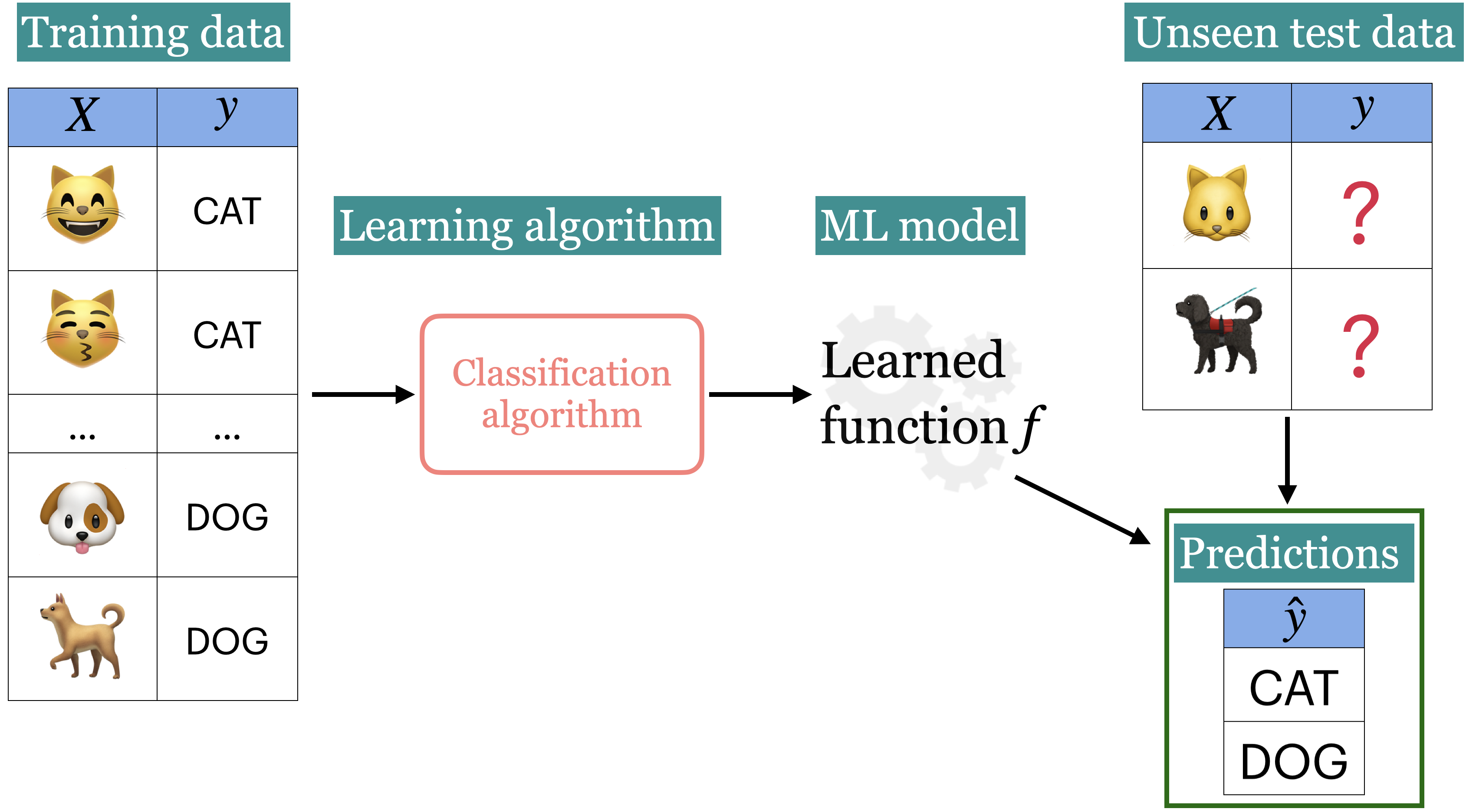

Supervised learning#

Training data comprises a set of observations (\(X\)) and their corresponding targets (\(y\)).

We wish to find a model function \(f\) that relates \(X\) to \(y\).

Then use that model function to predict the targets of new examples.

We have been working with this set up so far.

Unsupervised learning#

Training data consists of observations (\(X\)) without any corresponding targets.

Unsupervised learning could be used to group similar things together in \(X\) or to find underlying structure in the data.

Can we learn without targets?#

Yes, but the learning will be focused on finding the underlying structures of the inputs themselves (rather than finding the function \(f\) between input and output like we did in supervised learning models).

Examples:

Clustering

Dimensionality reduction

Labeled vs. Unlabeled data#

If you have access to labeled training data, you’re in the “supervised” setting.

You know what to do in that case from 571, 572, 573.

Unfortunately, getting large amount of labeled training data is often time consuming and expensive.

Annotated data can become “stale” after a while in cases such as fraud detection.

Can you still make sense of the data even though you do not have the labels?

Yes! At least to a certain extent!

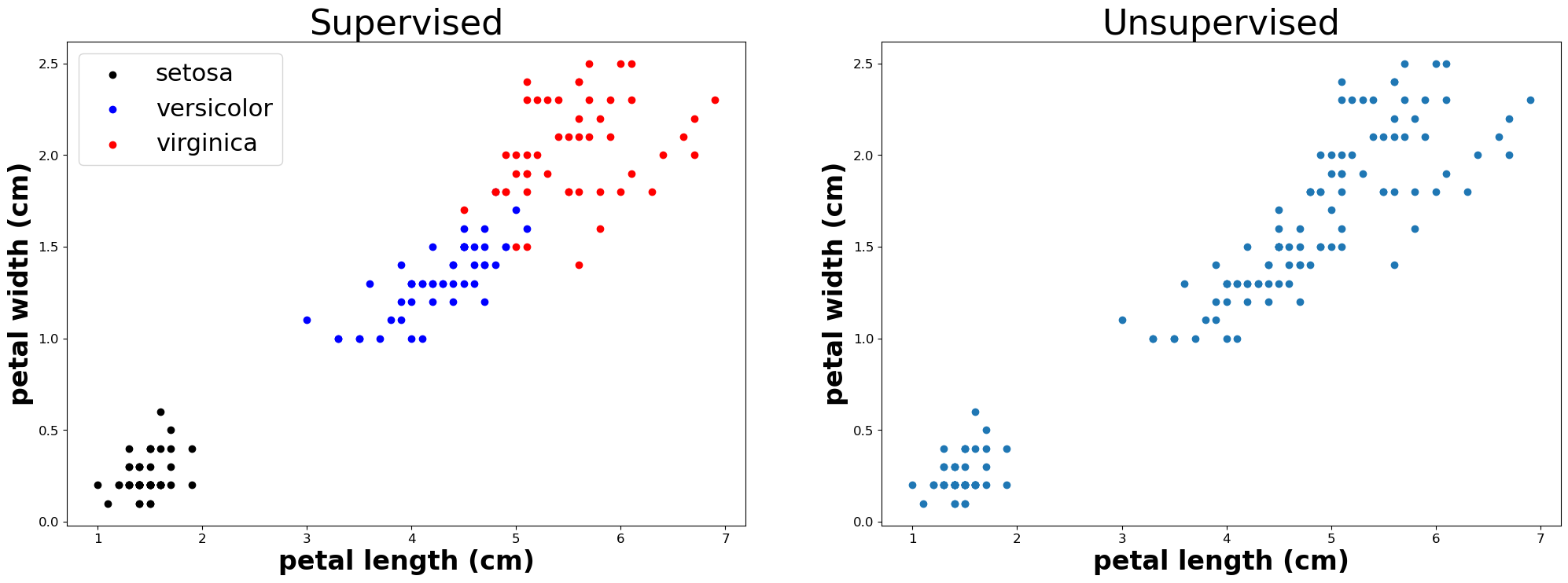

Example: Supervised vs unsupervised learning#

In supervised learning, we are given features \(X\) and target \(y\).

|

|

|||||||||||||||||||||||||||||||||||||

In unsupervised learning, we are only given features \(X\).

|

|

|||||||||||||||||||||||||

An example with sklearn toy dataset#

## Iris dataset

iris = datasets.load_iris() # loading the iris dataset

features = iris.data[:, 2:4] # only consider two features for visualization

labels = iris.target_names[

iris.target

] # get the targets, in this case the types of the Iris flower

iris_df = pd.DataFrame(features, columns=iris.feature_names[2:])

iris_df

| petal length (cm) | petal width (cm) | |

|---|---|---|

| 0 | 1.4 | 0.2 |

| 1 | 1.4 | 0.2 |

| 2 | 1.3 | 0.2 |

| 3 | 1.5 | 0.2 |

| 4 | 1.4 | 0.2 |

| ... | ... | ... |

| 145 | 5.2 | 2.3 |

| 146 | 5.0 | 1.9 |

| 147 | 5.2 | 2.0 |

| 148 | 5.4 | 2.3 |

| 149 | 5.1 | 1.8 |

150 rows × 2 columns

np.unique(labels)

array(['setosa', 'versicolor', 'virginica'], dtype='<U10')

iris_df["target"] = labels

plot_sup_x_unsup(iris_df, 8, 8)

In case of supervised learning, we’re given \(X\) and \(y\) (showed with different colours in the plot above).

In case of unsupervised learning, we’re only given \(X\) and the goal is to identify the underlying structure in data.

Unsupervised learning applications#

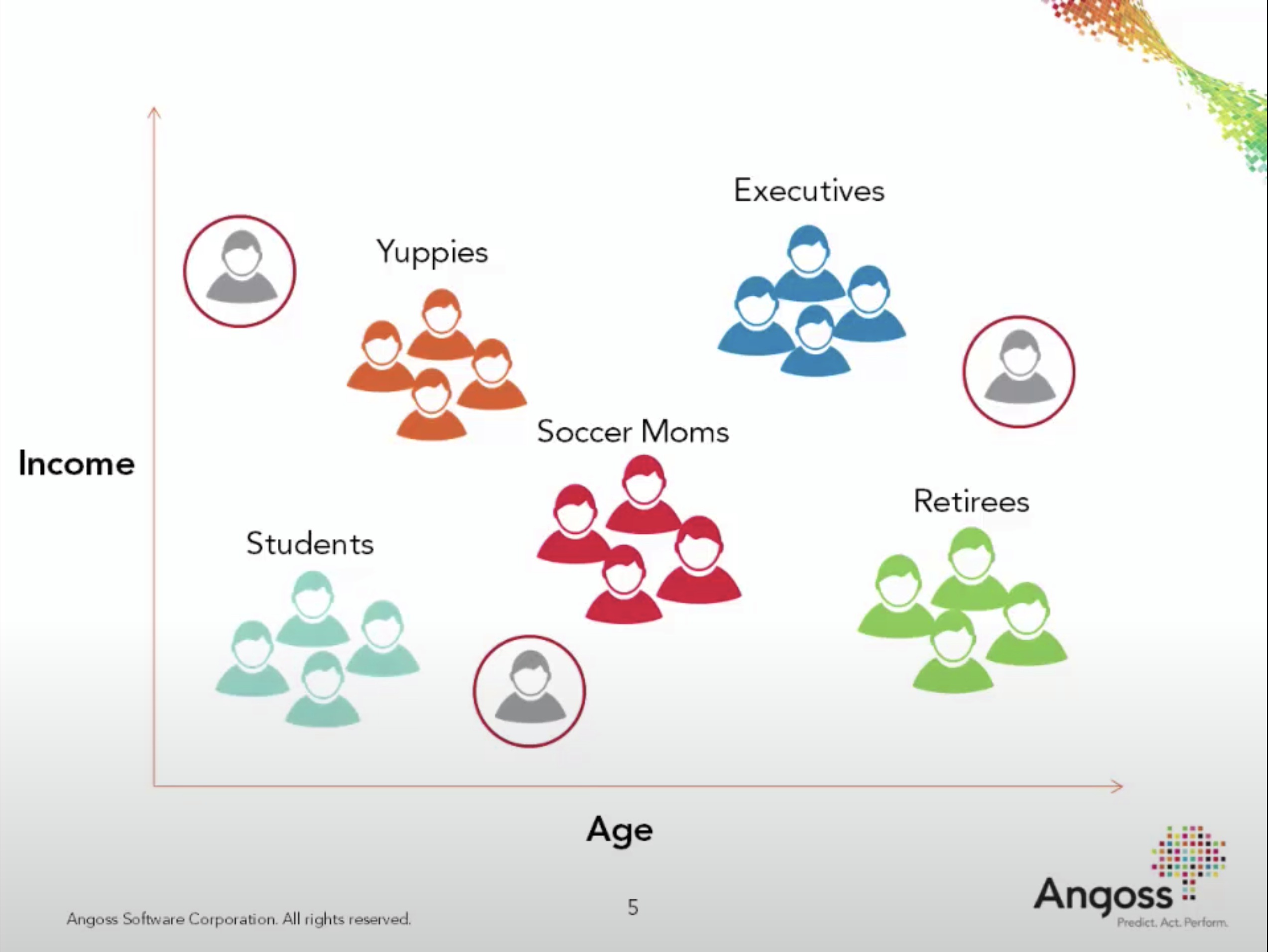

Example 1: Customer segmentation#

Understand landscape of the market.

Example 2: Document clustering#

Grouping articles on different topics from different news sources. For example, Google News.

Example 3: Recommender systems#

What should we recommend to the user, so that they buy another product?

Example 4: Examples projects from Capstone proposals#

Here are some projects from Capstone proposals which would involve unsupervised learning.

Creating (figurative) ecommerce shopping aisles with ML

Life Decision Support: Choose your best career path

…

If you want to build a machine learning model to cluster such images how would you represent such images?

Imagine that we also have ratings data of food items and users for a large number of users. Can you exploit the power of community to recommend certain food items to a given user they are likely to consume?

Course roadmap#

In this course we’ll try to answer these questions. In particular, here is the roadmap of the course.

Week 1

Clustering (How to group unlabeled data?)

Week 2 and week 3

Dimensionality reduction (How to represent the data?)

Word embeddings

Week 4

Recommender systems (How to exploit the power of community to recommend relevant products, services, items to customers they are likely to consume?)

Framework and tools#

sklearnPyTorch

I will be using matplotlib, plotly, seaborn for plotting simply because I am not very comfortable with Altair. The plotting is mainly for the demonstration of the concepts and you are not expected to learn these libraries for this class. You are free to use the libraries of your choice in labs but encouraged to use Altair.

Attributions#

The material of this course is built on the material developed by amazing instructors who have taught this course before. In particular, many thanks to Mike Gelbart, Rodolfo Lourenzutti, and Giulio Valentino Dalla Riva.